Junyang Jiang

Convolutional Gaussian Embeddings for Personalized Recommendation with Uncertainty

Jun 19, 2020

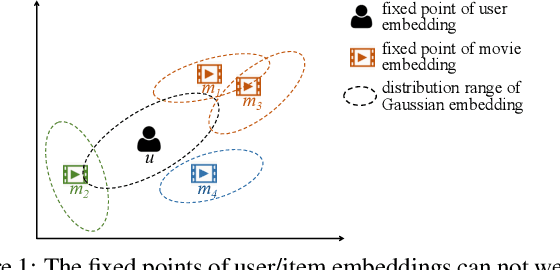

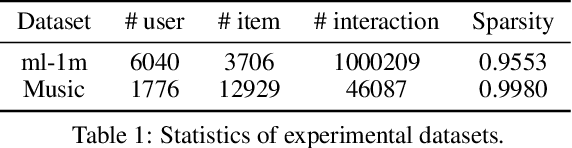

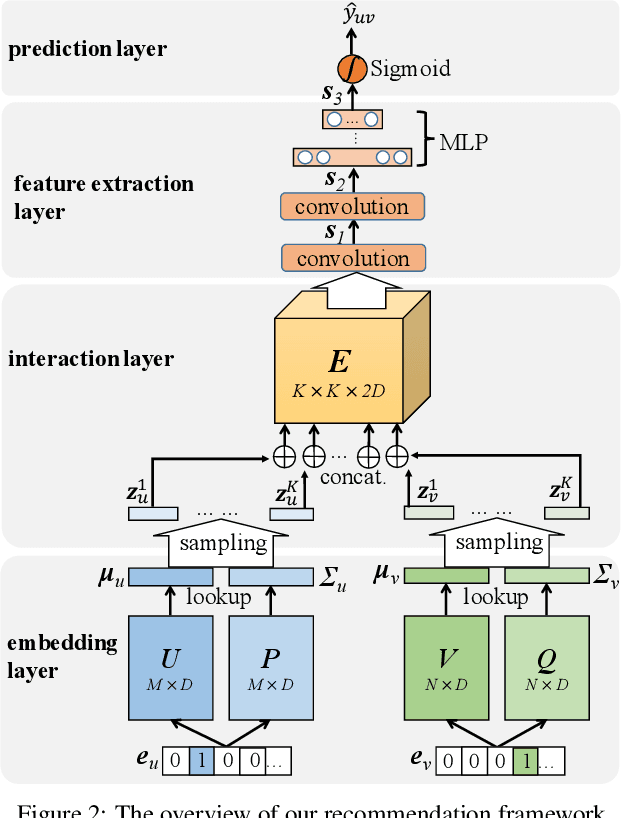

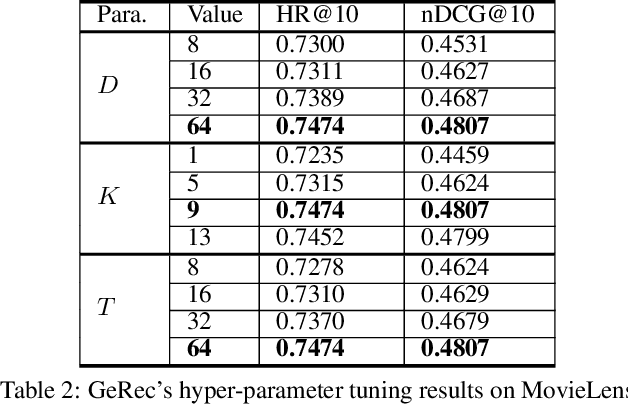

Abstract:Most of existing embedding based recommendation models use embeddings (vectors) corresponding to a single fixed point in low-dimensional space, to represent users and items. Such embeddings fail to precisely represent the users/items with uncertainty often observed in recommender systems. Addressing this problem, we propose a unified deep recommendation framework employing Gaussian embeddings, which are proven adaptive to uncertain preferences exhibited by some users, resulting in better user representations and recommendation performance. Furthermore, our framework adopts Monte-Carlo sampling and convolutional neural networks to compute the correlation between the objective user and the candidate item, based on which precise recommendations are achieved. Our extensive experiments on two benchmark datasets not only justify that our proposed Gaussian embeddings capture the uncertainty of users very well, but also demonstrate its superior performance over the state-of-the-art recommendation models.

Deeper Insights into Weight Sharing in Neural Architecture Search

Jan 06, 2020

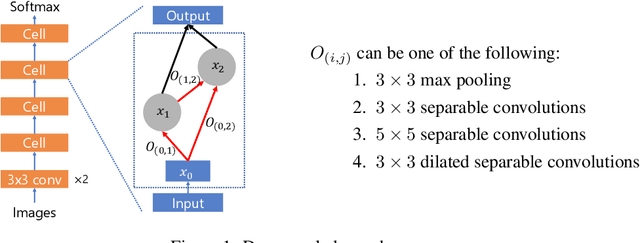

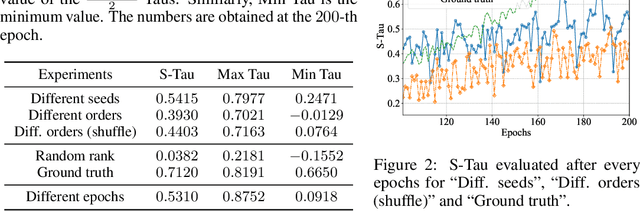

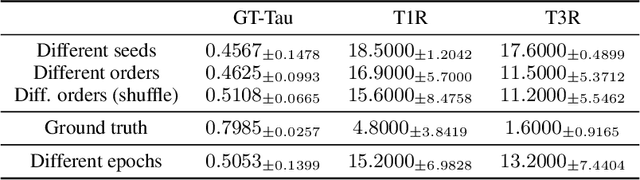

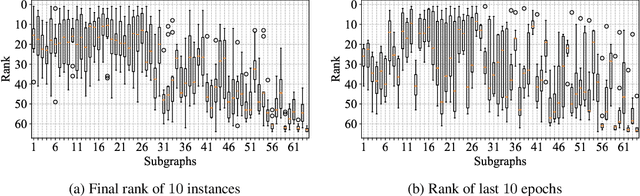

Abstract:With the success of deep neural networks, Neural Architecture Search (NAS) as a way of automatic model design has attracted wide attention. As training every child model from scratch is very time-consuming, recent works leverage weight-sharing to speed up the model evaluation procedure. These approaches greatly reduce computation by maintaining a single copy of weights on the super-net and share the weights among every child model. However, weight-sharing has no theoretical guarantee and its impact has not been well studied before. In this paper, we conduct comprehensive experiments to reveal the impact of weight-sharing: (1) The best-performing models from different runs or even from consecutive epochs within the same run have significant variance; (2) Even with high variance, we can extract valuable information from training the super-net with shared weights; (3) The interference between child models is a main factor that induces high variance; (4) Properly reducing the degree of weight sharing could effectively reduce variance and improve performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge