Junlin Lv

Identify Critical KV Cache in LLM Inference from an Output Perturbation Perspective

Feb 06, 2025

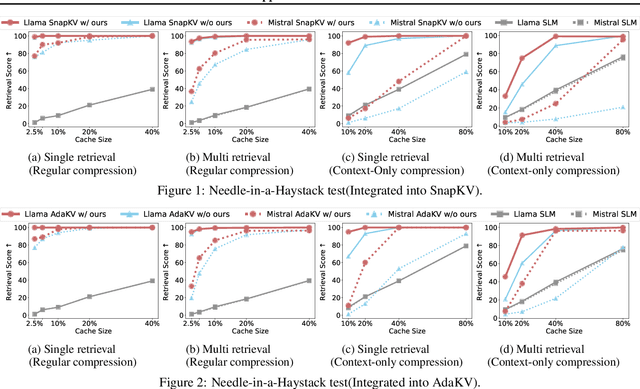

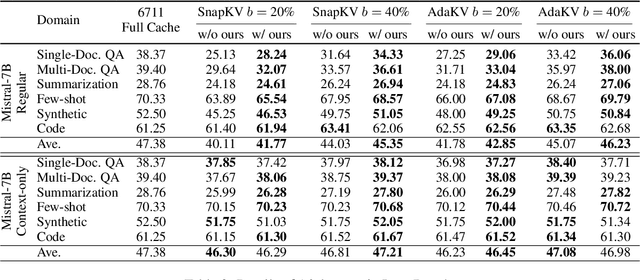

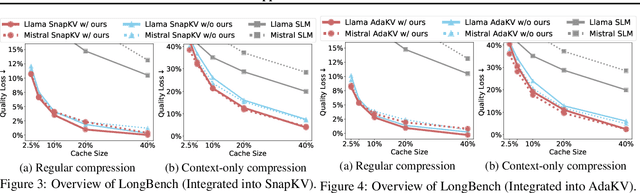

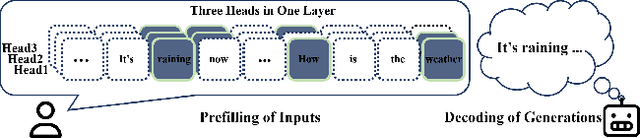

Abstract:Large language models have revolutionized natural language processing but face significant challenges of high storage and runtime costs, due to the transformer architecture's reliance on self-attention, particularly the large Key-Value (KV) cache for long-sequence inference. Recent efforts to reduce KV cache size by pruning less critical entries based on attention weights remain empirical and lack formal grounding. This paper presents a formal study on identifying critical KV cache entries by analyzing attention output perturbation. Our analysis reveals that, beyond attention weights, the value states within KV entries and pretrained parameter matrices are also crucial. Based on this, we propose a perturbation-constrained selection algorithm that optimizes the worst-case output perturbation to identify critical entries. Evaluations on the Needle-in-a-Haystack test and Longbench benchmark show our algorithm enhances state-of-the-art cache eviction methods. Further empirical analysis confirms that our algorithm achieves lower output perturbations in over 92% attention heads in Llama model, thereby providing a significant improvement over existing methods.

Optimizing KV Cache Eviction in LLMs: Adaptive Allocation for Enhanced Budget Utilization

Jul 16, 2024

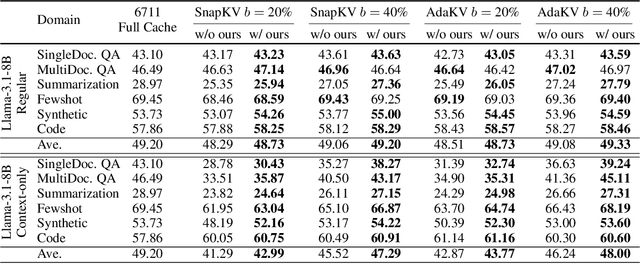

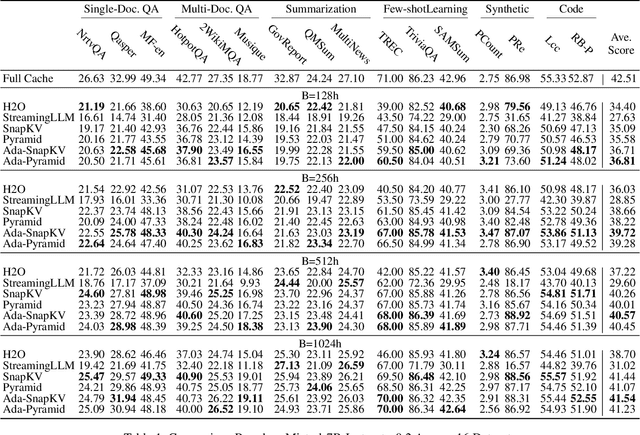

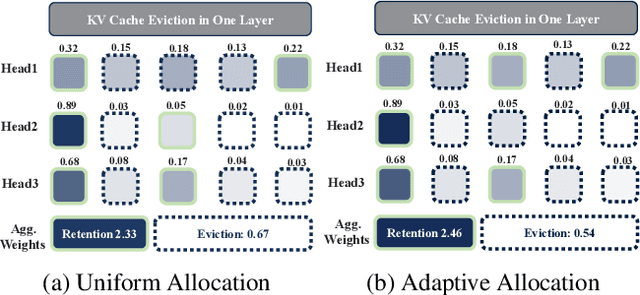

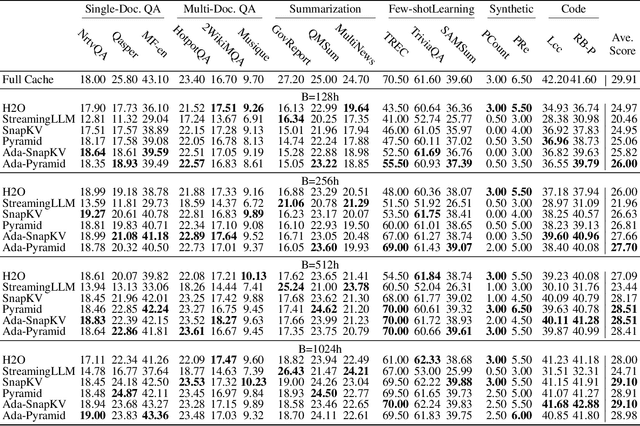

Abstract:Large Language Models have excelled in various fields but encounter efficiency limitations due to the extensive KV cache required for long sequences inference. Many efforts try to evict non-critical cache elements during runtime, thereby reducing cache size within a given memory budget while preserving generation quality. Our reexamination of their underlying principles discerns that prevailing strategies essentially aim to minimize an upper bound of eviction loss within a specific budget allocation. However, we observe that the current practice of uniformly allocating budgets across different attention heads during the eviction procedure tends to degrade the quality of generation posten-eviction. In light of these findings, we propose a simple yet effective adaptive allocation algorithm that not only theoretically ensures its loss upper bound does not exceed that of previous uniform allocation methods, but also effectively aligns with the characteristics of the self-attention mechanism, thus practically reducing the upper bound. Further, integrating this algorithm with two of the most advanced methods yields Ada-SnapKV and Ada-Pyramid. Extensive experimental validation across 16 datasets and the Needle-in-a-Haystack test confirm that Ada-SnapKV and Ada-Pyramid achieve further enhancements, establishing new benchmarks in state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge