Julius Pesonen

Road Grip Uncertainty Estimation Through Surface State Segmentation

Apr 11, 2025Abstract:Slippery road conditions pose significant challenges for autonomous driving. Beyond predicting road grip, it is crucial to estimate its uncertainty reliably to ensure safe vehicle control. In this work, we benchmark several uncertainty prediction methods to assess their effectiveness for grip uncertainty estimation. Additionally, we propose a novel approach that leverages road surface state segmentation to predict grip uncertainty. Our method estimates a pixel-wise grip probability distribution based on inferred road surface conditions. Experimental results indicate that the proposed approach enhances the robustness of grip uncertainty prediction.

Detecting Wildfires on UAVs with Real-time Segmentation Trained by Larger Teacher Models

Aug 19, 2024

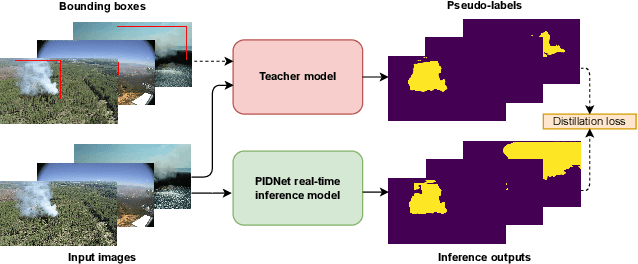

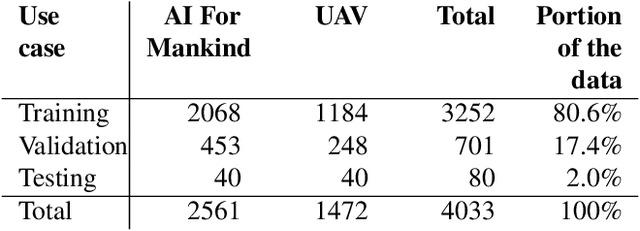

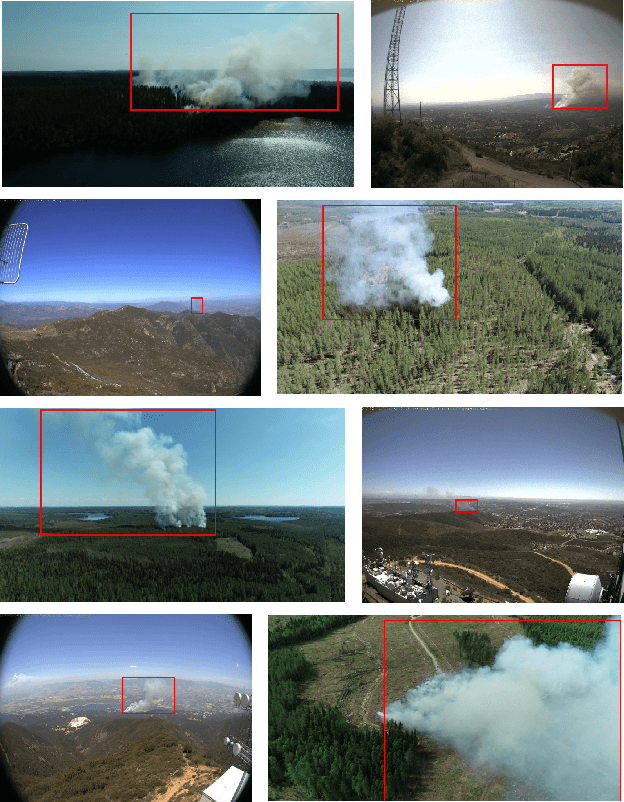

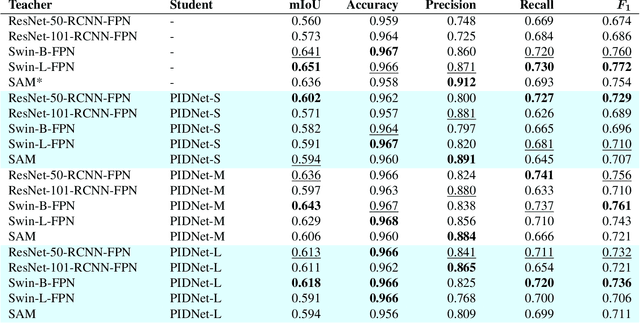

Abstract:Early detection of wildfires is essential to prevent large-scale fires resulting in extensive environmental, structural, and societal damage. Uncrewed aerial vehicles (UAVs) can cover large remote areas effectively with quick deployment requiring minimal infrastructure and equipping them with small cameras and computers enables autonomous real-time detection. In remote areas, however, the UAVs are limited to on-board computing for detection due to the lack of high-bandwidth mobile networks. This limits the detection to methods which are light enough for the on-board computer alone. For accurate camera-based localisation, segmentation of the detected smoke is essential but training data for deep learning-based wildfire smoke segmentation is limited. This study shows how small specialised segmentation models can be trained using only bounding box labels, leveraging zero-shot foundation model supervision. The method offers the advantages of needing only fairly easily obtainable bounding box labels and requiring training solely for the smaller student network. The proposed method achieved 63.3% mIoU on a manually annotated and diverse wildfire dataset. The used model can perform in real-time at ~11 fps with a UAV-carried NVIDIA Jetson Orin NX computer while reliably recognising smoke, demonstrated at real-world forest burning events. Code is available at https://gitlab.com/fgi_nls/public/wildfire-real-time-segmentation

Dense Road Surface Grip Map Prediction from Multimodal Image Data

Apr 26, 2024

Abstract:Slippery road weather conditions are prevalent in many regions and cause a regular risk for traffic. Still, there has been less research on how autonomous vehicles could detect slippery driving conditions on the road to drive safely. In this work, we propose a method to predict a dense grip map from the area in front of the car, based on postprocessed multimodal sensor data. We trained a convolutional neural network to predict pixelwise grip values from fused RGB camera, thermal camera, and LiDAR reflectance images, based on weakly supervised ground truth from an optical road weather sensor. The experiments show that it is possible to predict dense grip values with good accuracy from the used data modalities as the produced grip map follows both ground truth measurements and local weather conditions, such as snowy areas on the road. The model using only the RGB camera or LiDAR reflectance modality provided good baseline results for grip prediction accuracy while using models fusing the RGB camera, thermal camera, and LiDAR modalities improved the grip predictions significantly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge