Julie Tores

MObyGaze: a film dataset of multimodal objectification densely annotated by experts

May 28, 2025

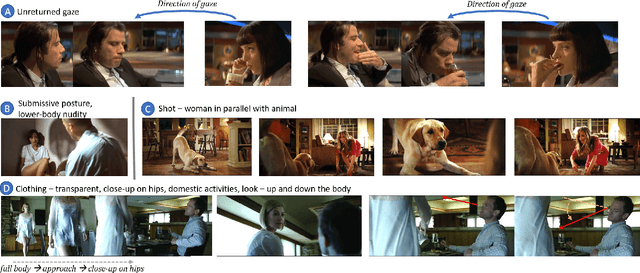

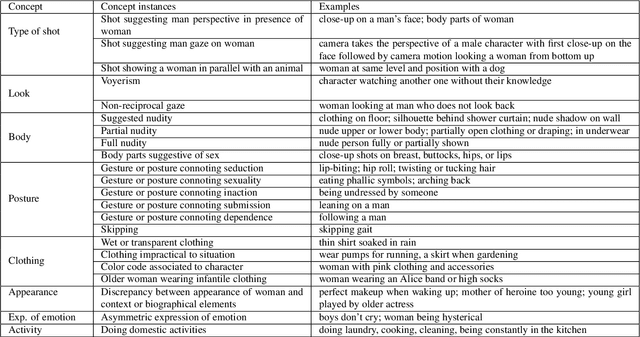

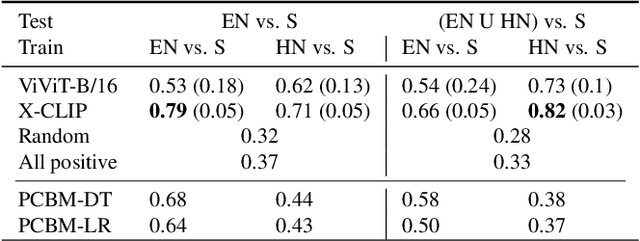

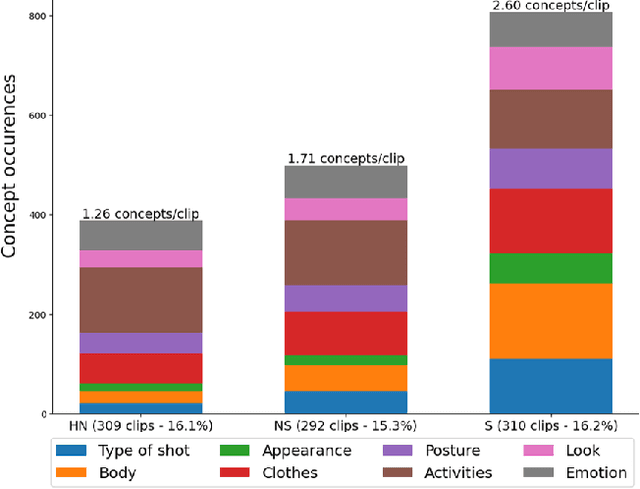

Abstract:Characterizing and quantifying gender representation disparities in audiovisual storytelling contents is necessary to grasp how stereotypes may perpetuate on screen. In this article, we consider the high-level construct of objectification and introduce a new AI task to the ML community: characterize and quantify complex multimodal (visual, speech, audio) temporal patterns producing objectification in films. Building on film studies and psychology, we define the construct of objectification in a structured thesaurus involving 5 sub-constructs manifesting through 11 concepts spanning 3 modalities. We introduce the Multimodal Objectifying Gaze (MObyGaze) dataset, made of 20 movies annotated densely by experts for objectification levels and concepts over freely delimited segments: it amounts to 6072 segments over 43 hours of video with fine-grained localization and categorization. We formulate different learning tasks, propose and investigate best ways to learn from the diversity of labels among a low number of annotators, and benchmark recent vision, text and audio models, showing the feasibility of the task. We make our code and our dataset available to the community and described in the Croissant format: https://anonymous.4open.science/r/MObyGaze-F600/.

Leveraging multimodal explanatory annotations for video interpretation with Modality Specific Dataset

Apr 15, 2025Abstract:We examine the impact of concept-informed supervision on multimodal video interpretation models using MOByGaze, a dataset containing human-annotated explanatory concepts. We introduce Concept Modality Specific Datasets (CMSDs), which consist of data subsets categorized by the modality (visual, textual, or audio) of annotated concepts. Models trained on CMSDs outperform those using traditional legacy training in both early and late fusion approaches. Notably, this approach enables late fusion models to achieve performance close to that of early fusion models. These findings underscore the importance of modality-specific annotations in developing robust, self-explainable video models and contribute to advancing interpretable multimodal learning in complex video analysis.

Visual Objectification in Films: Towards a New AI Task for Video Interpretation

Jan 24, 2024

Abstract:In film gender studies, the concept of 'male gaze' refers to the way the characters are portrayed on-screen as objects of desire rather than subjects. In this article, we introduce a novel video-interpretation task, to detect character objectification in films. The purpose is to reveal and quantify the usage of complex temporal patterns operated in cinema to produce the cognitive perception of objectification. We introduce the ObyGaze12 dataset, made of 1914 movie clips densely annotated by experts for objectification concepts identified in film studies and psychology. We evaluate recent vision models, show the feasibility of the task and where the challenges remain with concept bottleneck models. Our new dataset and code are made available to the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge