Judy Shen

Algorithms with Calibrated Machine Learning Predictions

Feb 05, 2025Abstract:The field of algorithms with predictions incorporates machine learning advice in the design of online algorithms to improve real-world performance. While this theoretical framework often assumes uniform reliability across all predictions, modern machine learning models can now provide instance-level uncertainty estimates. In this paper, we propose calibration as a principled and practical tool to bridge this gap, demonstrating the benefits of calibrated advice through two case studies: the ski rental and online job scheduling problems. For ski rental, we design an algorithm that achieves optimal prediction-dependent performance and prove that, in high-variance settings, calibrated advice offers more effective guidance than alternative methods for uncertainty quantification. For job scheduling, we demonstrate that using a calibrated predictor leads to significant performance improvements over existing methods. Evaluations on real-world data validate our theoretical findings, highlighting the practical impact of calibration for algorithms with predictions.

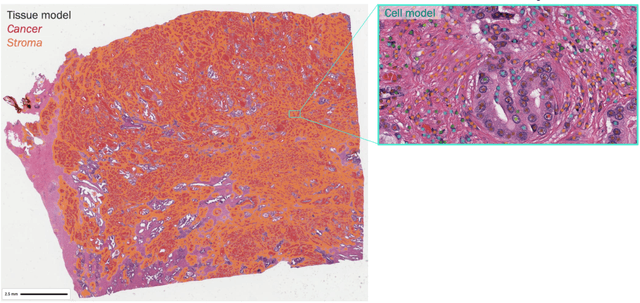

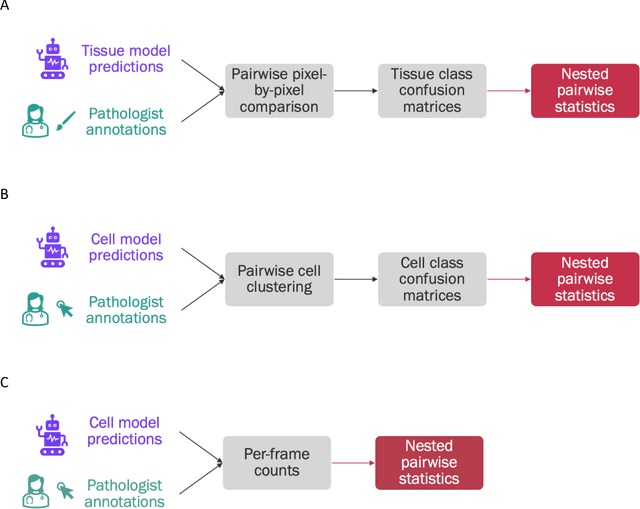

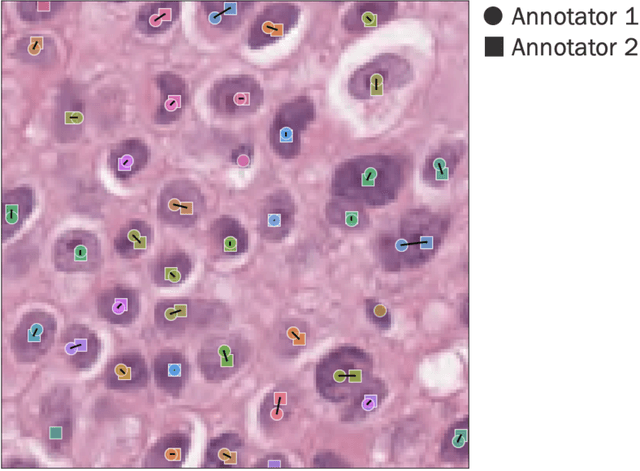

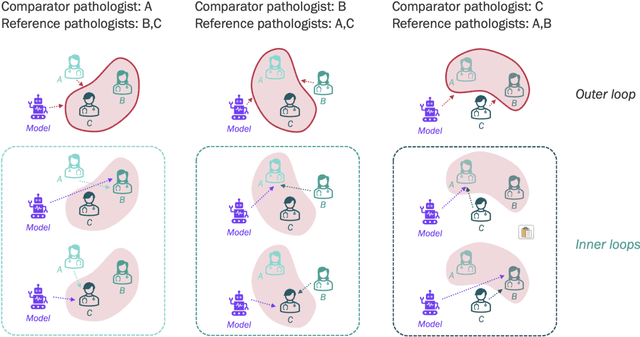

Improved statistical benchmarking of digital pathology models using pairwise frames evaluation

Jun 07, 2023

Abstract:Nested pairwise frames is a method for relative benchmarking of cell or tissue digital pathology models against manual pathologist annotations on a set of sampled patches. At a high level, the method compares agreement between a candidate model and pathologist annotations with agreement among pathologists' annotations. This evaluation framework addresses fundamental issues of data size and annotator variability in using manual pathologist annotations as a source of ground truth for model validation. We implemented nested pairwise frames evaluation for tissue classification, cell classification, and cell count prediction tasks and show results for cell and tissue models deployed on an H&E-stained melanoma dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge