Joshua Ransiek

Adversarial and Reactive Traffic Agents for Realistic Driving Simulation

Sep 21, 2024

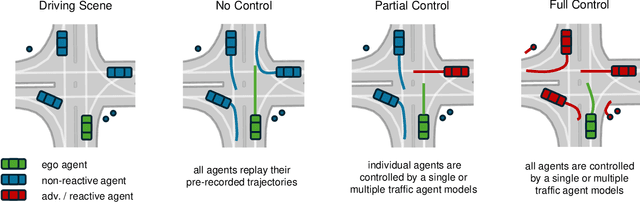

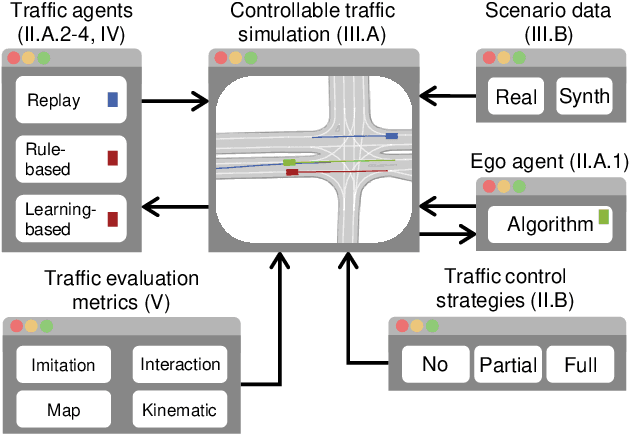

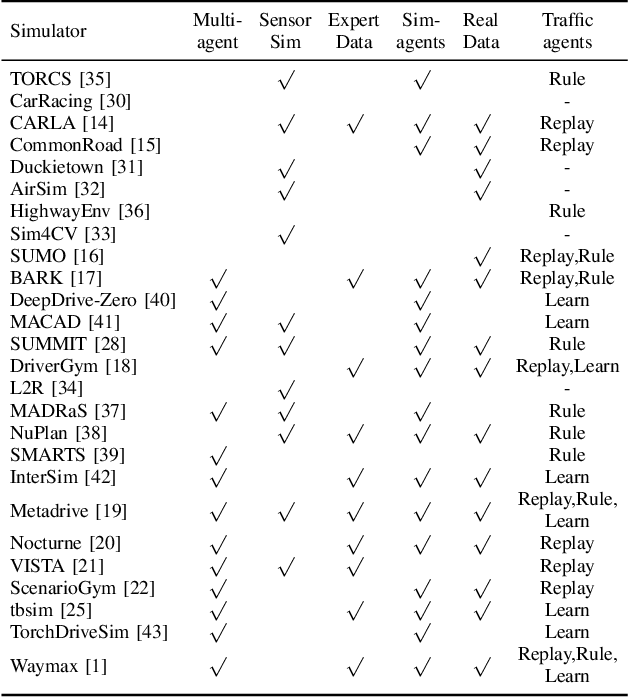

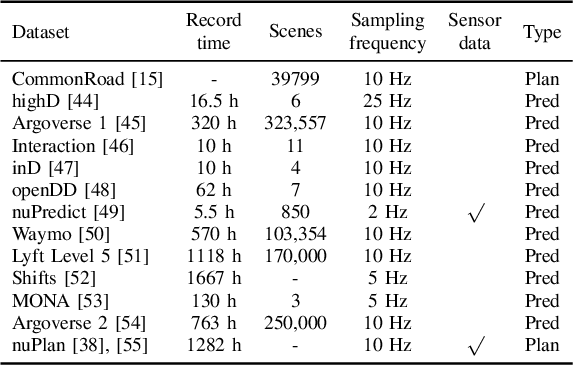

Abstract:Despite advancements in perception and planning for autonomous vehicles (AVs), validating their performance remains a significant challenge. The deployment of planning algorithms in real-world environments is often ineffective due to discrepancies between simulations and real traffic conditions. Evaluating AVs planning algorithms in simulation typically involves replaying driving logs from recorded real-world traffic. However, agents replayed from offline data are not reactive, lack the ability to respond to arbitrary AV behavior, and cannot behave in an adversarial manner to test certain properties of the driving policy. Therefore, simulation with realistic and potentially adversarial agents represents a critical task for AV planning software validation. In this work, we aim to review current research efforts in the field of adversarial and reactive traffic agents, with a particular focus on the application of classical and adversarial learning-based techniques. The objective of this work is to categorize existing approaches based on the proposed scenario controllability, defined by the number of reactive or adversarial agents. Moreover, we examine existing traffic simulations with respect to their employed default traffic agents and potential extensions, collate datasets that provide initial driving data, and collect relevant evaluation metrics.

Disentangling Uncertainty for Safe Social Navigation using Deep Reinforcement Learning

Sep 16, 2024

Abstract:Autonomous mobile robots are increasingly employed in pedestrian-rich environments where safe navigation and appropriate human interaction are crucial. While Deep Reinforcement Learning (DRL) enables socially integrated robot behavior, challenges persist in novel or perturbed scenarios to indicate when and why the policy is uncertain. Unknown uncertainty in decision-making can lead to collisions or human discomfort and is one reason why safe and risk-aware navigation is still an open problem. This work introduces a novel approach that integrates aleatoric, epistemic, and predictive uncertainty estimation into a DRL-based navigation framework for uncertainty estimates in decision-making. We, therefore, incorporate Observation-Dependent Variance (ODV) and dropout into the Proximal Policy Optimization (PPO) algorithm. For different types of perturbations, we compare the ability of Deep Ensembles and Monte-Carlo Dropout (MC-Dropout) to estimate the uncertainties of the policy. In uncertain decision-making situations, we propose to change the robot's social behavior to conservative collision avoidance. The results show that the ODV-PPO algorithm converges faster with better generalization and disentangles the aleatoric and epistemic uncertainties. In addition, the MC-Dropout approach is more sensitive to perturbations and capable to correlate the uncertainty type to the perturbation type better. With the proposed safe action selection scheme, the robot can navigate in perturbed environments with fewer collisions.

1001 Ways of Scenario Generation for Testing of Self-driving Cars: A Survey

Apr 21, 2023

Abstract:Scenario generation is one of the essential steps in scenario-based testing and, therefore, a significant part of the verification and validation of driver assistance functions and autonomous driving systems. However, the term scenario generation is used for many different methods, e.g., extraction of scenarios from naturalistic driving data or variation of scenario parameters. This survey aims to give a systematic overview of different approaches, establish different categories of scenario acquisition and generation, and show that each group of methods has typical input and output types. It shows that although the term is often used throughout literature, the evaluated methods use different inputs and the resulting scenarios differ in abstraction level and from a systematical point of view. Additionally, recent research and literature examples are given to underline this categorization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge