Joseph Z. Xu

Fiona

Assessing Post-Disaster Damage from Satellite Imagery using Semi-Supervised Learning Techniques

Nov 24, 2020

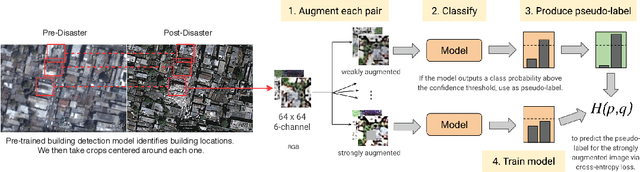

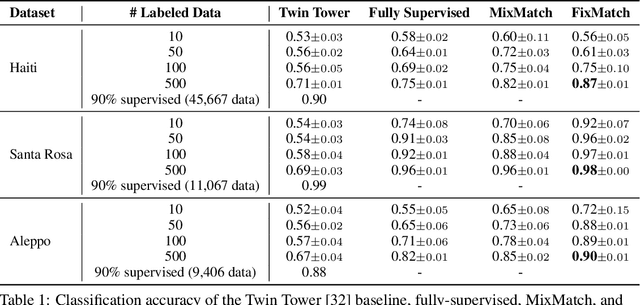

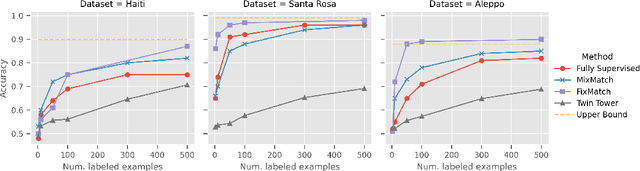

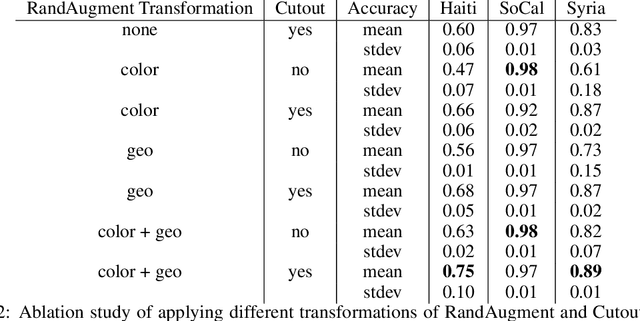

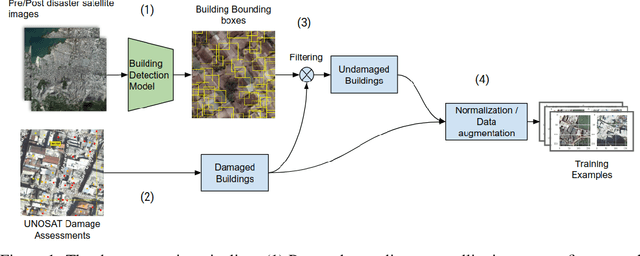

Abstract:To respond to disasters such as earthquakes, wildfires, and armed conflicts, humanitarian organizations require accurate and timely data in the form of damage assessments, which indicate what buildings and population centers have been most affected. Recent research combines machine learning with remote sensing to automatically extract such information from satellite imagery, reducing manual labor and turn-around time. A major impediment to using machine learning methods in real disaster response scenarios is the difficulty of obtaining a sufficient amount of labeled data to train a model for an unfolding disaster. This paper shows a novel application of semi-supervised learning (SSL) to train models for damage assessment with a minimal amount of labeled data and large amount of unlabeled data. We compare the performance of state-of-the-art SSL methods, including MixMatch and FixMatch, to a supervised baseline for the 2010 Haiti earthquake, 2017 Santa Rosa wildfire, and 2016 armed conflict in Syria. We show how models trained with SSL methods can reach fully supervised performance despite using only a fraction of labeled data and identify areas for further improvements.

Building Damage Detection in Satellite Imagery Using Convolutional Neural Networks

Oct 14, 2019

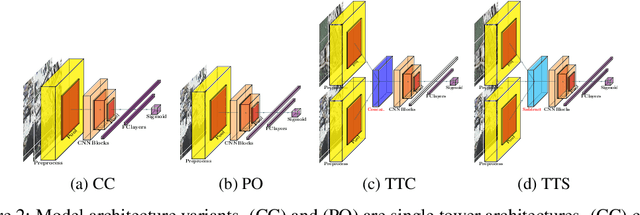

Abstract:In all types of disasters, from earthquakes to armed conflicts, aid workers need accurate and timely data such as damage to buildings and population displacement to mount an effective response. Remote sensing provides this data at an unprecedented scale, but extracting operationalizable information from satellite images is slow and labor-intensive. In this work, we use machine learning to automate the detection of building damage in satellite imagery. We compare the performance of four different convolutional neural network models in detecting damaged buildings in the 2010 Haiti earthquake. We also quantify how well the models will generalize to future disasters by training and testing models on different disaster events.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge