Joseph K. Bradley

SPRIGHT: A Fast and Robust Framework for Sparse Walsh-Hadamard Transform

Aug 26, 2015

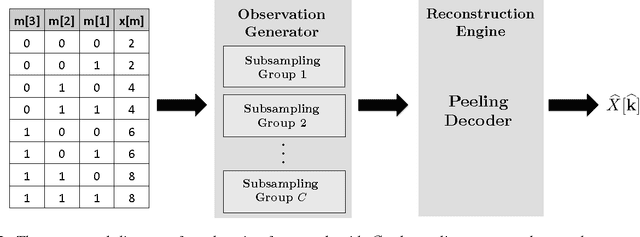

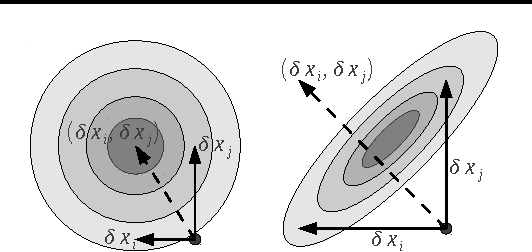

Abstract:We consider the problem of computing the Walsh-Hadamard Transform (WHT) of some $N$-length input vector in the presence of noise, where the $N$-point Walsh spectrum is $K$-sparse with $K = {O}(N^{\delta})$ scaling sub-linearly in the input dimension $N$ for some $0<\delta<1$. Over the past decade, there has been a resurgence in research related to the computation of Discrete Fourier Transform (DFT) for some length-$N$ input signal that has a $K$-sparse Fourier spectrum. In particular, through a sparse-graph code design, our earlier work on the Fast Fourier Aliasing-based Sparse Transform (FFAST) algorithm computes the $K$-sparse DFT in time ${O}(K\log K)$ by taking ${O}(K)$ noiseless samples. Inspired by the coding-theoretic design framework, Scheibler et al. proposed the Sparse Fast Hadamard Transform (SparseFHT) algorithm that elegantly computes the $K$-sparse WHT in the absence of noise using ${O}(K\log N)$ samples in time ${O}(K\log^2 N)$. However, the SparseFHT algorithm explicitly exploits the noiseless nature of the problem, and is not equipped to deal with scenarios where the observations are corrupted by noise. Therefore, a question of critical interest is whether this coding-theoretic framework can be made robust to noise. Further, if the answer is yes, what is the extra price that needs to be paid for being robust to noise? In this paper, we show, quite interestingly, that there is {\it no extra price} that needs to be paid for being robust to noise other than a constant factor. In other words, we can maintain the same sample complexity ${O}(K\log N)$ and the computational complexity ${O}(K\log^2 N)$ as those of the noiseless case, using our SParse Robust Iterative Graph-based Hadamard Transform (SPRIGHT) algorithm.

Parallel Coordinate Descent for L1-Regularized Loss Minimization

May 26, 2011

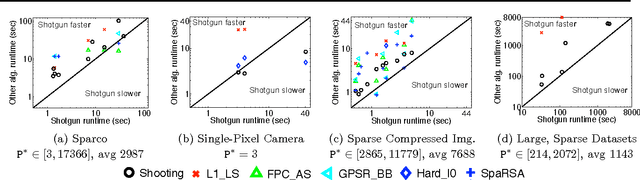

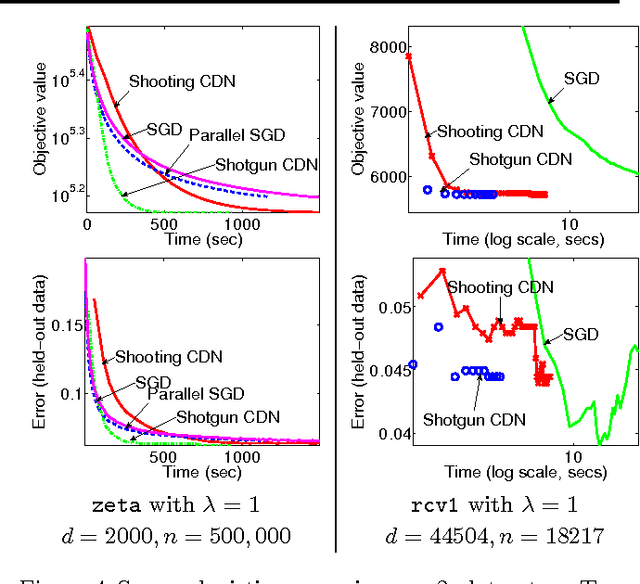

Abstract:We propose Shotgun, a parallel coordinate descent algorithm for minimizing L1-regularized losses. Though coordinate descent seems inherently sequential, we prove convergence bounds for Shotgun which predict linear speedups, up to a problem-dependent limit. We present a comprehensive empirical study of Shotgun for Lasso and sparse logistic regression. Our theoretical predictions on the potential for parallelism closely match behavior on real data. Shotgun outperforms other published solvers on a range of large problems, proving to be one of the most scalable algorithms for L1.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge