Joseph J. LaViola Jr.

AudioMiXR: Spatial Audio Object Manipulation with 6DoF for Sound Design in Augmented Reality

Feb 05, 2025

Abstract:We present AudioMiXR, an augmented reality (AR) interface intended to assess how users manipulate virtual audio objects situated in their physical space using six degrees of freedom (6DoF) deployed on a head-mounted display (Apple Vision Pro) for 3D sound design. Existing tools for 3D sound design are typically constrained to desktop displays, which may limit spatial awareness of mixing within the execution environment. Utilizing an XR HMD to create soundscapes may provide a real-time test environment for 3D sound design, as modern HMDs can provide precise spatial localization assisted by cross-modal interactions. However, there is no research on design guidelines specific to sound design with six degrees of freedom (6DoF) in XR. To provide a first step toward identifying design-related research directions in this space, we conducted an exploratory study where we recruited 27 participants, consisting of expert and non-expert sound designers. The goal was to assess design lessons that can be used to inform future research venues in 3D sound design. We ran a within-subjects study where users designed both a music and cinematic soundscapes. After thematically analyzing participant data, we constructed two design lessons: 1. Proprioception for AR Sound Design, and 2. Balancing Audio-Visual Modalities in AR GUIs. Additionally, we provide application domains that can benefit most from 6DoF sound design based on our results.

A Sketch-Based System for Human-Guided Constrained Object Manipulation

Nov 17, 2019

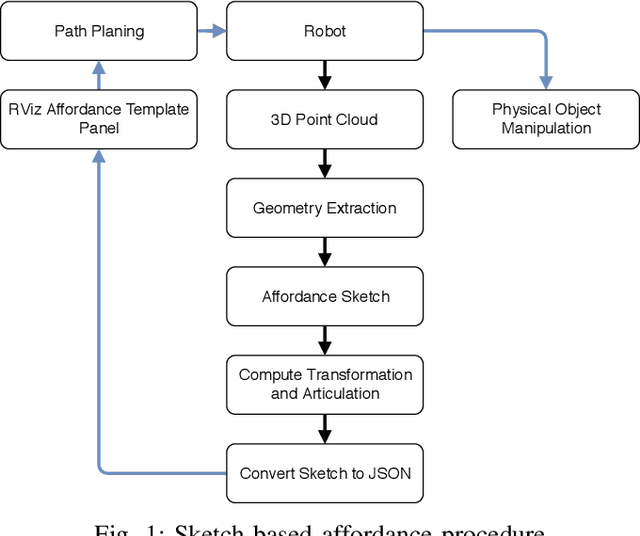

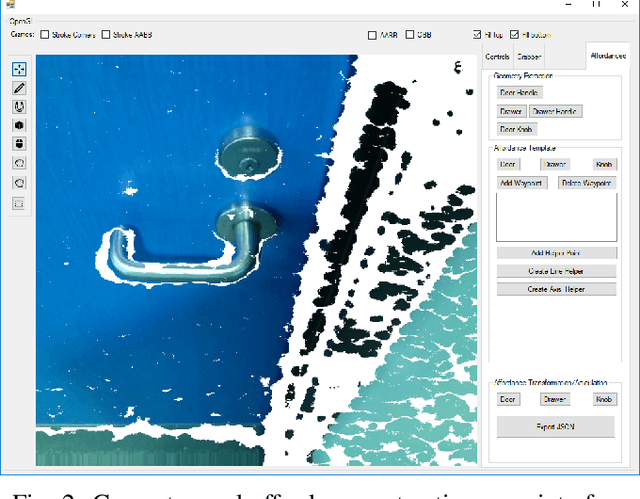

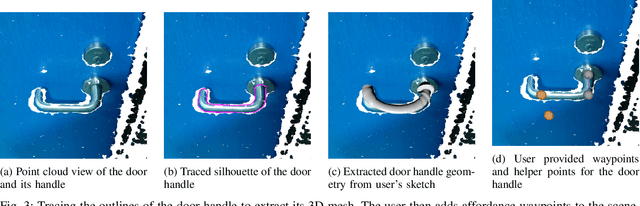

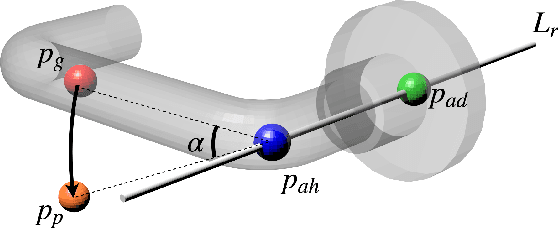

Abstract:In this paper, we present an easy to use sketch-based interface to extract geometries and generate affordance files from 3D point clouds for robot-object interaction tasks. Using our system, even novice users can perform robot task planning by employing such sketch tools. Our focus in this paper is employing human-in-the-loop approach to assist in the generation of more accurate affordance templates and guidance of robot through the task execution process. Since we do not employ any unsupervised learning to generate affordance templates, our system performs much faster and is more versatile for template generation. Our system is based on the extraction of geometries for generalized cylindrical and cuboid shapes, after extracting the geometries, affordances are generated for objects by applying simple sketches. We evaluated our technique by asking users to define affordances by employing sketches on the 3D scenes of a door handle and a drawer handle and used the resulting extracted affordance template files to perform the tasks of turning a door handle and opening a drawer by the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge