Joseph Clements

Rallying Adversarial Techniques against Deep Learning for Network Security

Mar 27, 2019

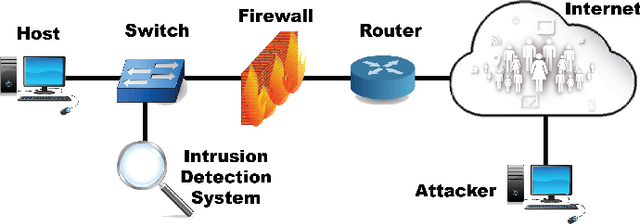

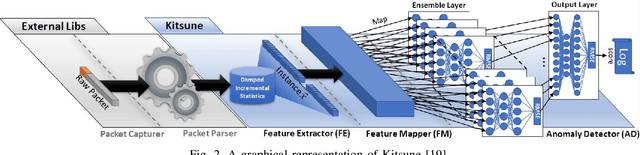

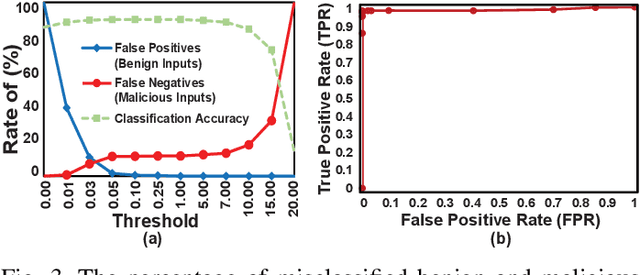

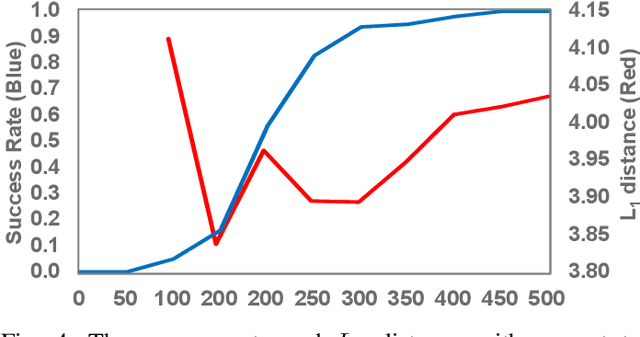

Abstract:Recent advances in artificial intelligence and the increasing need for powerful defensive measures in the domain of network security, have led to the adoption of deep learning approaches for use in network intrusion detection systems. These methods have achieved superior performance against conventional network attacks, which enable the deployment of practical security systems to unique and dynamic sectors. Adversarial machine learning, unfortunately, has recently shown that deep learning models are inherently vulnerable to adversarial modifications on their input data. Because of this susceptibility, the deep learning models deployed to power a network defense could in fact be the weakest entry point for compromising a network system. In this paper, we show that by modifying on average as little as 1.38 of the input features, an adversary can generate malicious inputs which effectively fool a deep learning based NIDS. Therefore, when designing such systems, it is crucial to consider the performance from not only the conventional network security perspective but also the adversarial machine learning domain.

Hardware Trojan Attacks on Neural Networks

Jun 14, 2018

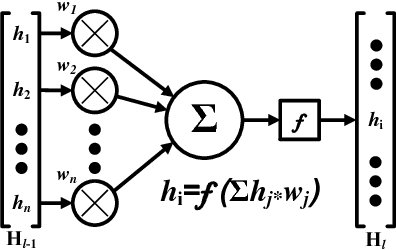

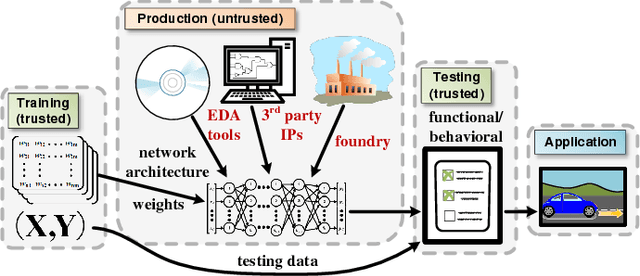

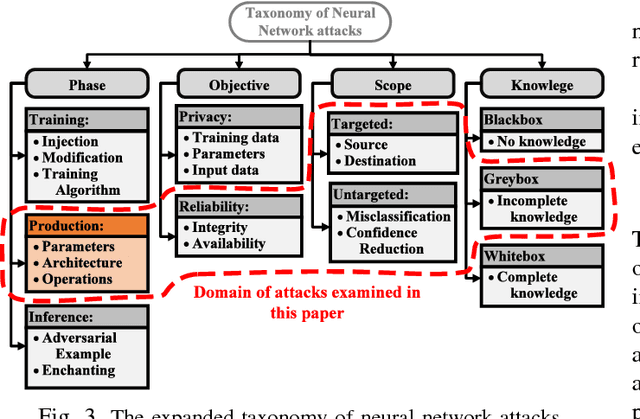

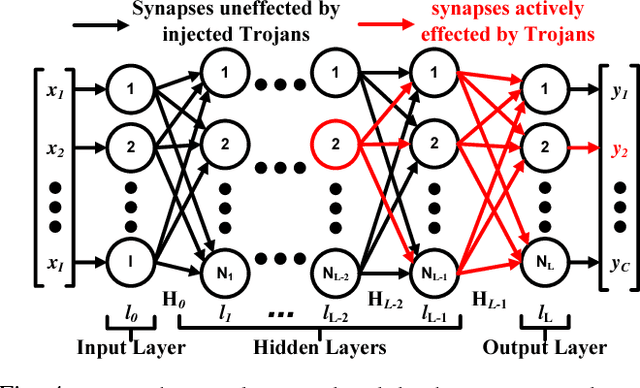

Abstract:With the rising popularity of machine learning and the ever increasing demand for computational power, there is a growing need for hardware optimized implementations of neural networks and other machine learning models. As the technology evolves, it is also plausible that machine learning or artificial intelligence will soon become consumer electronic products and military equipment, in the form of well-trained models. Unfortunately, the modern fabless business model of manufacturing hardware, while economic, leads to deficiencies in security through the supply chain. In this paper, we illuminate these security issues by introducing hardware Trojan attacks on neural networks, expanding the current taxonomy of neural network security to incorporate attacks of this nature. To aid in this, we develop a novel framework for inserting malicious hardware Trojans in the implementation of a neural network classifier. We evaluate the capabilities of the adversary in this setting by implementing the attack algorithm on convolutional neural networks while controlling a variety of parameters available to the adversary. Our experimental results show that the proposed algorithm could effectively classify a selected input trigger as a specified class on the MNIST dataset by injecting hardware Trojans into $0.03\%$, on average, of neurons in the 5th hidden layer of arbitrary 7-layer convolutional neural networks, while undetectable under the test data. Finally, we discuss the potential defenses to protect neural networks against hardware Trojan attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge