Jose R. Beltran

Permutation Invariant Recurrent Neural Networks for Sound Source Tracking Applications

Jun 14, 2023

Abstract:Many multi-source localization and tracking models based on neural networks use one or several recurrent layers at their final stages to track the movement of the sources. Conventional recurrent neural networks (RNNs), such as the long short-term memories (LSTMs) or the gated recurrent units (GRUs), take a vector as their input and use another vector to store their state. However, this approach results in the information from all the sources being contained in a single ordered vector, which is not optimal for permutation-invariant problems such as multi-source tracking. In this paper, we present a new recurrent architecture that uses unordered sets to represent both its input and its state and that is invariant to the permutations of the input set and equivariant to the permutations of the state set. Hence, the information of every sound source is represented in an individual embedding and the new estimates are assigned to the tracked trajectories regardless of their order.

Symbolic Music Structure Analysis with Graph Representations and Changepoint Detection Methods

Mar 24, 2023

Abstract:Music Structure Analysis is an open research task in Music Information Retrieval (MIR). In the past, there have been several works that attempt to segment music into the audio and symbolic domains, however, the identification and segmentation of the music structure at different levels is still an open research problem in this area. In this work we propose three methods, two of which are novel graph-based algorithms that aim to segment symbolic music by its form or structure: Norm, G-PELT and G-Window. We performed an ablation study with two public datasets that have different forms or structures in order to compare such methods varying their parameter values and comparing the performance against different music styles. We have found that encoding symbolic music with graph representations and computing the novelty of Adjacency Matrices obtained from graphs represent the structure of symbolic music pieces well without the need to extract features from it. We are able to detect the boundaries with an online unsupervised changepoint detection method with a F_1 of 0.5640 for a 1 bar tolerance in one of the public datasets that we used for testing our methods. We also provide the performance results of the algorithms at different levels of structure, high, medium and low, to show how the parameters of the proposed methods have to be adjusted depending on the level. We added the best performing method with its parameters for each structure level to musicaiz, an open source python package, to facilitate the reproducibility and usability of this work. We hope that this methods could be used to improve other MIR tasks such as music generation with structure, music classification or key changes detection.

A Survey on Artificial Intelligence for Music Generation: Agents, Domains and Perspectives

Nov 03, 2022Abstract:Music is one of the Gardner's intelligences in his theory of multiple intelligences. How humans perceive and understand music is still being studied and is crucial to develop artificial intelligence models that imitate such processes. Music generation with Artificial Intelligence is an emerging field that is gaining much attention in the recent years. In this paper, we describe how humans compose music and how new AI systems could imitate such process by comparing past and recent advances in the field with music composition techniques. To understand how AI models and algorithms generate music and the potential applications that might appear in the future, we explore, analyze and describe the agents that take part of the music generation process: the datasets, models, interfaces, the users and the generated music. We mention possible applications that might benefit from this field and we also propose new trends and future research directions that could be explored in the future.

musicaiz: A Python Library for Symbolic Music Generation, Analysis and Visualization

Sep 16, 2022

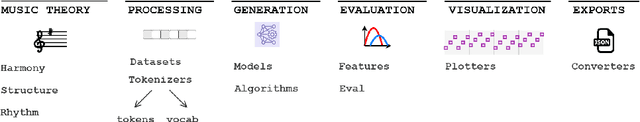

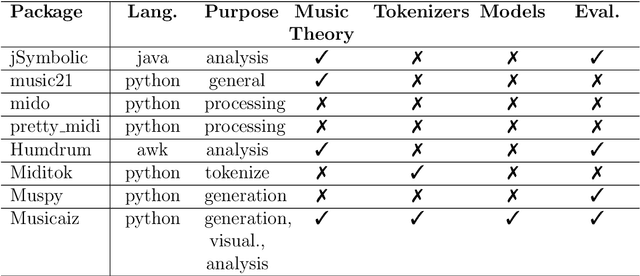

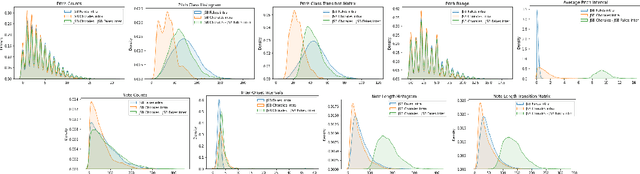

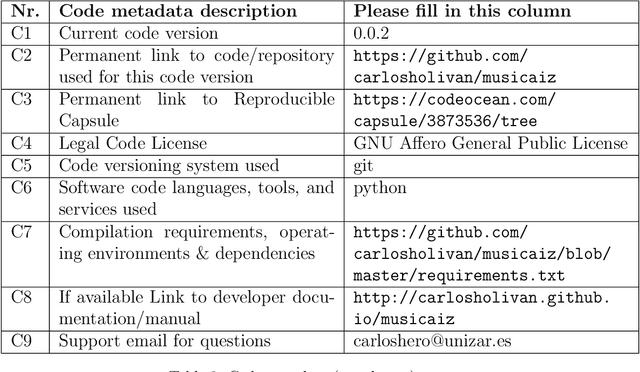

Abstract:In this article, we present musicaiz, an object-oriented library for analyzing, generating and evaluating symbolic music. The submodules of the package allow the user to create symbolic music data from scratch, build algorithms to analyze symbolic music, encode MIDI data as tokens to train deep learning sequence models, modify existing music data and evaluate music generation systems. The evaluation submodule builds on previous work to objectively measure music generation systems and to be able to reproduce the results of music generation models. The library is publicly available online. We encourage the community to contribute and provide feedback.

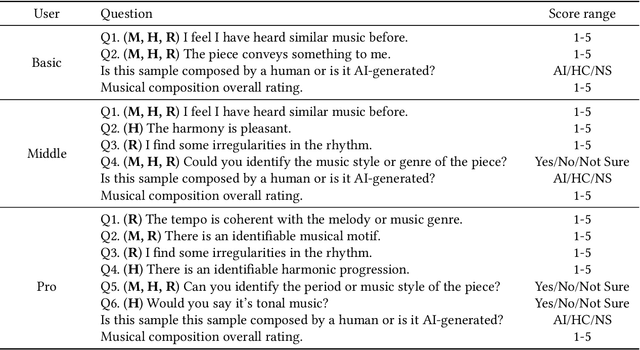

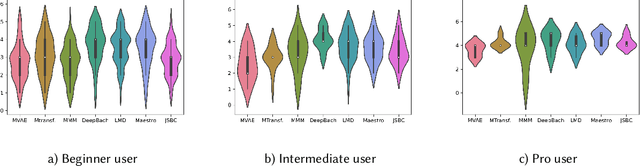

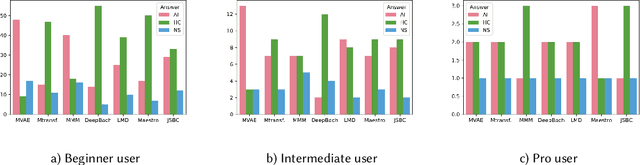

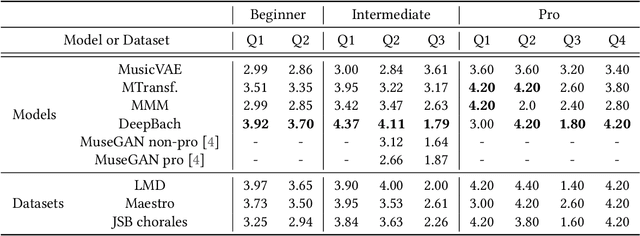

Subjective Evaluation of Deep Learning Models for Symbolic Music Composition

Apr 03, 2022

Abstract:Deep learning models are typically evaluated to measure and compare their performance on a given task. The metrics that are commonly used to evaluate these models are standard metrics that are used for different tasks. In the field of music composition or generation, the standard metrics used in other fields have no clear meaning in terms of music theory. In this paper, we propose a subjective method to evaluate AI-based music composition systems by asking questions related to basic music principles to different levels of users based on their musical experience and knowledge. We use this method to compare state-of-the-art models for music composition with deep learning. We give the results of this evaluation method and we compare the responses of each user level for each evaluated model.

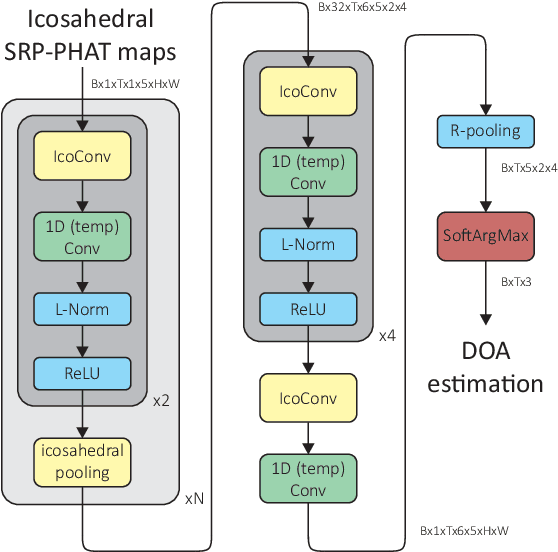

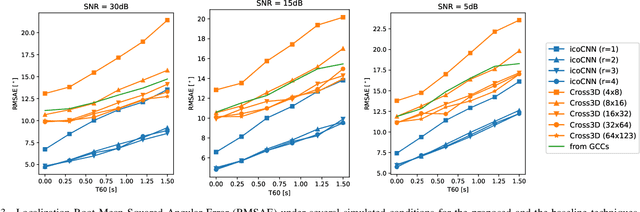

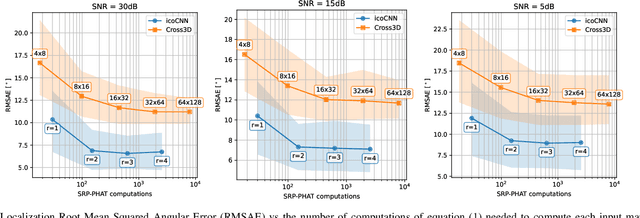

Direction of Arrival Estimation of Sound Sources Using Icosahedral CNNs

Mar 31, 2022

Abstract:In this paper, we present a new model for Direction of Arrival (DOA) estimation of sound sources based on an Icosahedral Convolutional Neural Network (CNN) applied over SRP-PHAT power maps computed from the signals received by a microphone array. This icosahedral CNN is equivariant to the 60 rotational symmetries of the icosahedron, which represent a good approximation of the continuous space of spherical rotations, and can be implemented using standard 2D convolutional layers, having a lower computational cost than most of the spherical CNNs. In addition, instead of using fully connected layers after the icosahedral convolutions, we propose a new soft-argmax function that can be seen as a differentiable version of the argmax function and allows us to solve the DOA estimation as a regression problem interpreting the output of the convolutional layers as a probability distribution. We prove that using models that fit the equivariances of the problem allows us to outperform other state-of-the-art models with a lower computational cost and more robustness, obtaining root mean square localization errors lower than 10{\deg} even in scenarios with a reverberation time $T_{60}$ of 1.5 s.

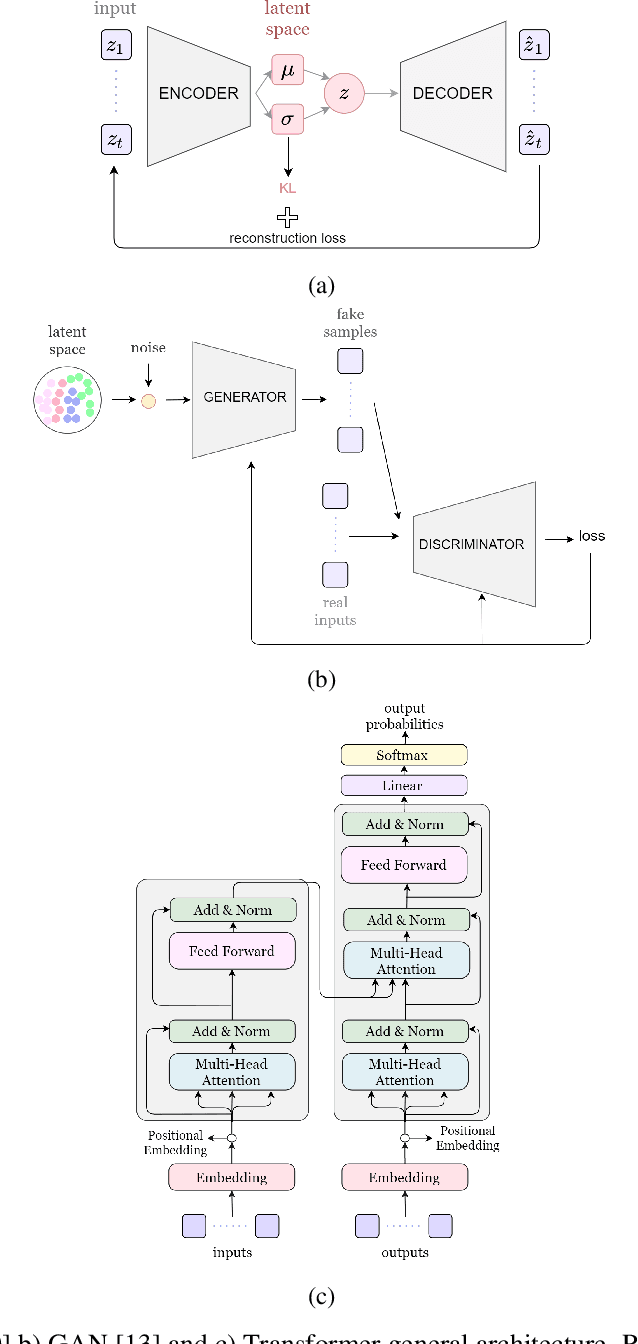

Music Composition with Deep Learning: A Review

Sep 07, 2021

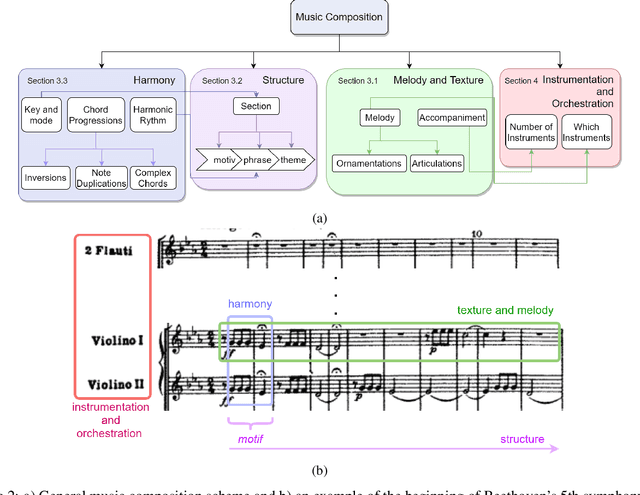

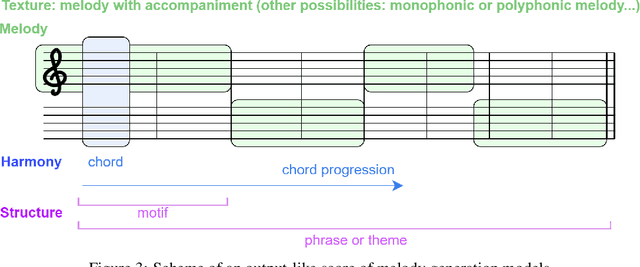

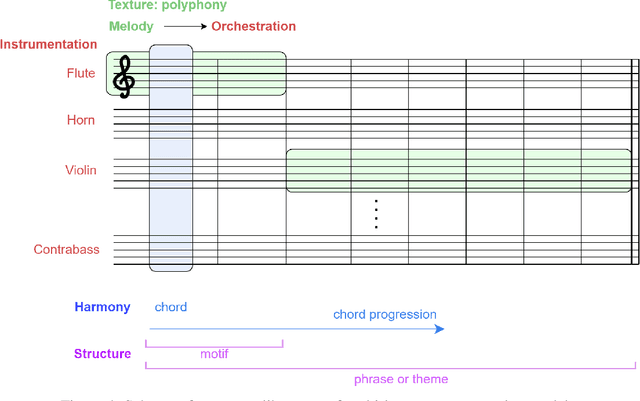

Abstract:Generating a complex work of art such as a musical composition requires exhibiting true creativity that depends on a variety of factors that are related to the hierarchy of musical language. Music generation have been faced with Algorithmic methods and recently, with Deep Learning models that are being used in other fields such as Computer Vision. In this paper we want to put into context the existing relationships between AI-based music composition models and human musical composition and creativity processes. We give an overview of the recent Deep Learning models for music composition and we compare these models to the music composition process from a theoretical point of view. We have tried to answer some of the most relevant open questions for this task by analyzing the ability of current Deep Learning models to generate music with creativity or the similarity between AI and human composition processes, among others.

Timbre Classification of Musical Instruments with a Deep Learning Multi-Head Attention-Based Model

Jul 13, 2021

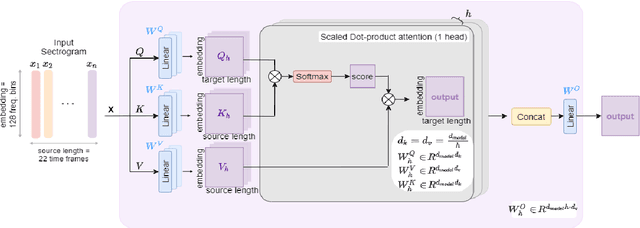

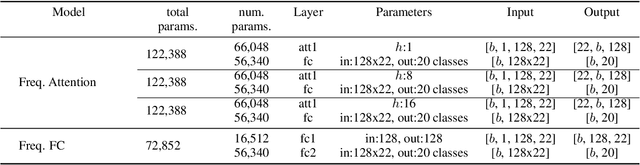

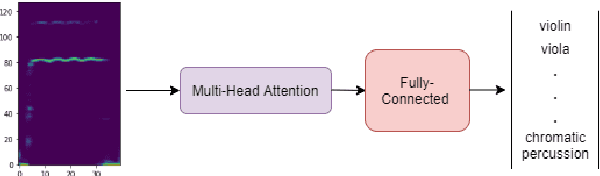

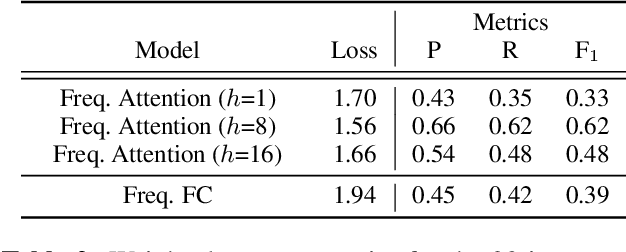

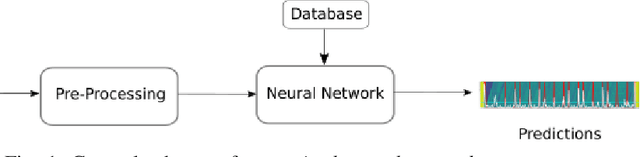

Abstract:The aim of this work is to define a model based on deep learning that is able to identify different instrument timbres with as few parameters as possible. For this purpose, we have worked with classical orchestral instruments played with different dynamics, which are part of a few instrument families and which play notes in the same pitch range. It has been possible to assess the ability to classify instruments by timbre even if the instruments are playing the same note with the same intensity. The network employed uses a multi-head attention mechanism, with 8 heads and a dense network at the output taking as input the log-mel magnitude spectrograms of the sound samples. This network allows the identification of 20 instrument classes of the classical orchestra, achieving an overall F$_1$ value of 0.62. An analysis of the weights of the attention layer has been performed and the confusion matrix of the model is presented, allowing us to assess the ability of the proposed architecture to distinguish timbre and to establish the aspects on which future work should focus.

Music Boundary Detection using Convolutional Neural Networks: A comparative analysis of combined input features

Aug 17, 2020

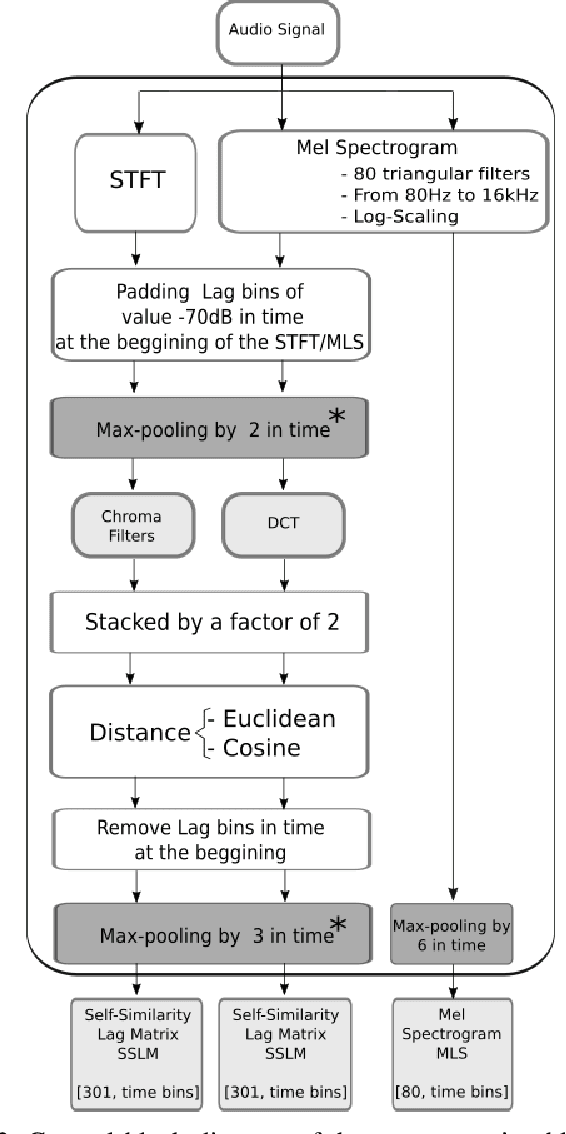

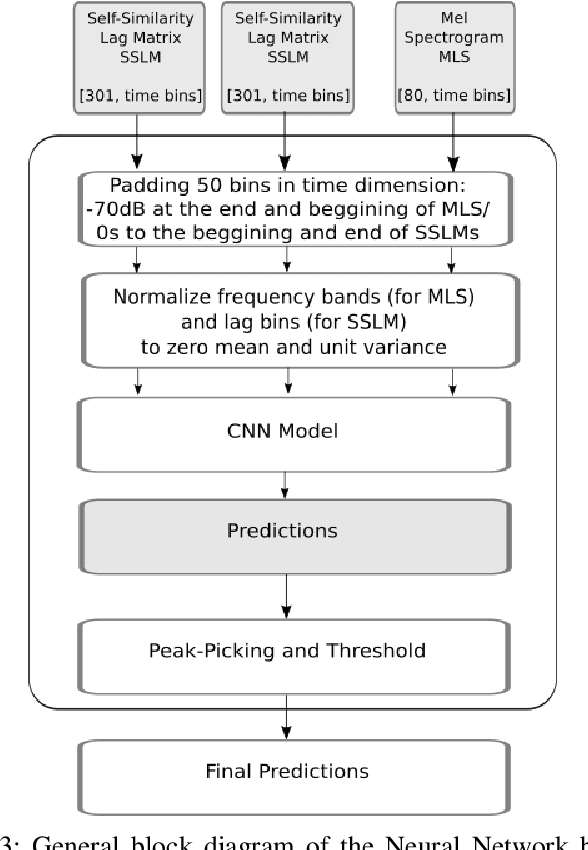

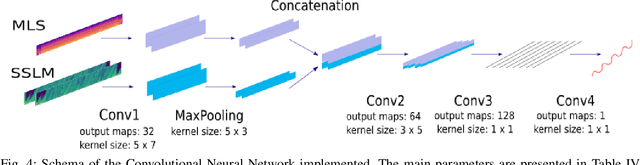

Abstract:The analysis of the structure of musical pieces is a task that remains a challenge for Artificial Intelligence, especially in the field of Deep Learning. It requires prior identification of structural boundaries of the music pieces. This structural boundary analysis has recently been studied with unsupervised methods and \textit{end-to-end} techniques such as Convolutional Neural Networks (CNN) using Mel-Scaled Log-magnitude Spectograms features (MLS), Self-Similarity Matrices (SSM) or Self-Similarity Lag Matrices (SSLM) as inputs and trained with human annotations. Several studies have been published divided into unsupervised and \textit{end-to-end} methods in which pre-processing is done in different ways, using different distance metrics and audio characteristics, so a generalized pre-processing method to compute model inputs is missing. The objective of this work is to establish a general method of pre-processing these inputs by comparing the inputs calculated from different pooling strategies, distance metrics and audio characteristics, also taking into account the computing time to obtain them. We also establish the most effective combination of inputs to be delivered to the CNN in order to establish the most efficient way to extract the limits of the structure of the music pieces. With an adequate combination of input matrices and pooling strategies we obtain a measurement accuracy $F_1$ of 0.411 that outperforms the current one obtained under the same conditions.

Robust Sound Source Tracking Using SRP-PHAT and 3D Convolutional Neural Networks

Jun 16, 2020

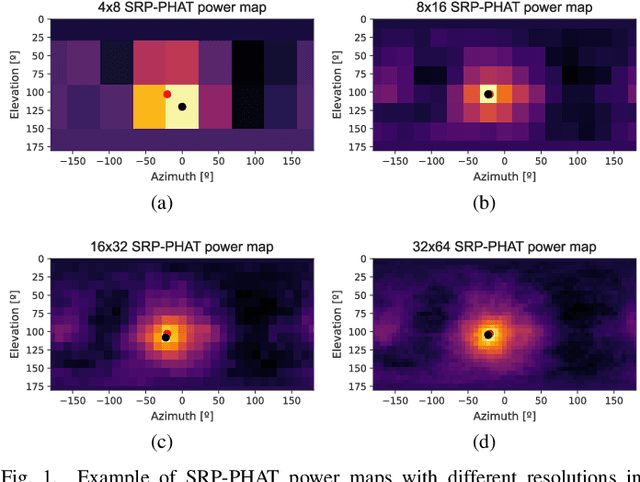

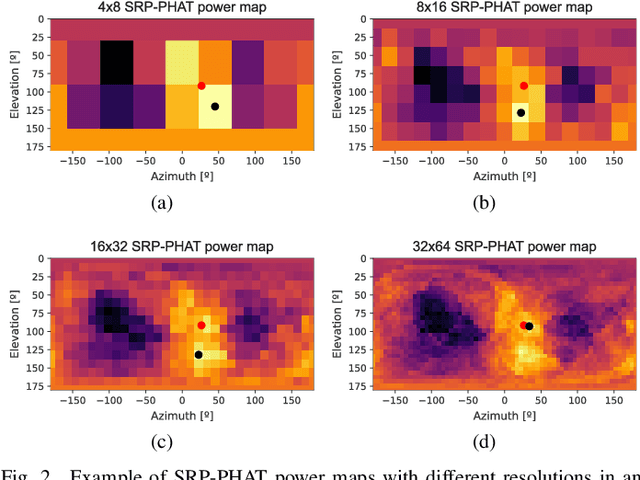

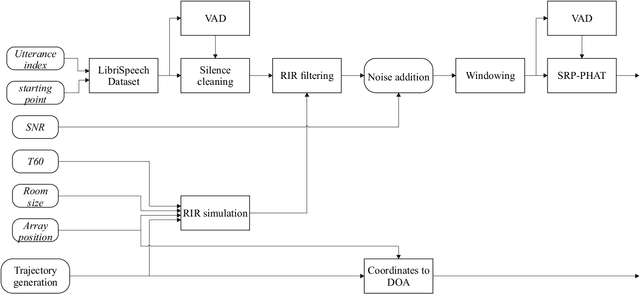

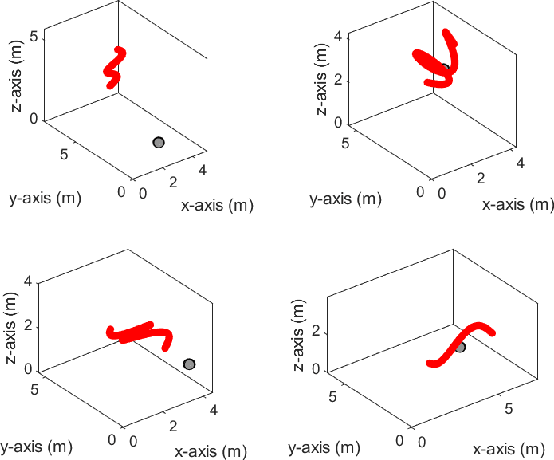

Abstract:In this paper, we present a new sound source DOA estimation and tracking system based on the well known SRP-PHAT algorithm and a three-dimensional Convolutional Neural Network. It uses SRP-PHAT power maps as input features of a fully convolutional causal architecture that uses 3D convolutional layers to accurately perform the tracking of a sound source even in highly reverberant scenarios where most of the state of the art techniques fail. Unlike previous methods, since we do not use bidirectional recurrent layers and all our convolutional layers are causal in the time dimension, our system is feasible for real-time applications and it provides a new DOA estimation for each new SRP-PHAT map. To train the model, we introduce a new procedure to simulate random trajectories as they are needed during the training, equivalent to an infinite-size dataset with high flexibility to modify its acoustical conditions such as the reverberation time. We use both acoustical simulations on a large range of reverberation times and the actual recordings of the LOCATA dataset to prove the robustness of our system and its good performance even using low-resolution SRP-PHAT maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge