Jose L. Pons

Robot-mediated physical Human-Human Interaction in Neurorehabilitation: a position paper

Jul 23, 2025

Abstract:Neurorehabilitation conventionally relies on the interaction between a patient and a physical therapist. Robotic systems can improve and enrich the physical feedback provided to patients after neurological injury, but they under-utilize the adaptability and clinical expertise of trained therapists. In this position paper, we advocate for a novel approach that integrates the therapist's clinical expertise and nuanced decision-making with the strength, accuracy, and repeatability of robotics: Robot-mediated physical Human-Human Interaction. This framework, which enables two individuals to physically interact through robotic devices, has been studied across diverse research groups and has recently emerged as a promising link between conventional manual therapy and rehabilitation robotics, harmonizing the strengths of both approaches. This paper presents the rationale of a multidisciplinary team-including engineers, doctors, and physical therapists-for conducting research that utilizes: a unified taxonomy to describe robot-mediated rehabilitation, a framework of interaction based on social psychology, and a technological approach that makes robotic systems seamless facilitators of natural human-human interaction.

Deep-Learning Control of Lower-Limb Exoskeletons via simplified Therapist Input

Dec 10, 2024

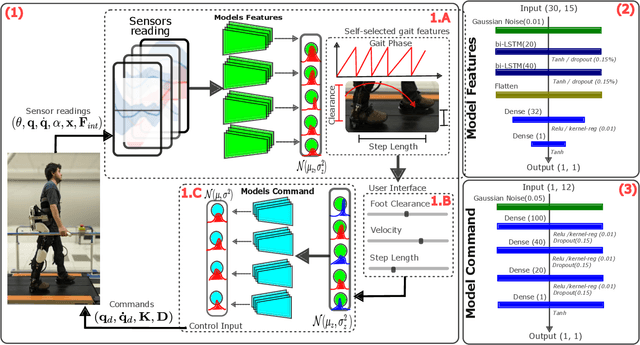

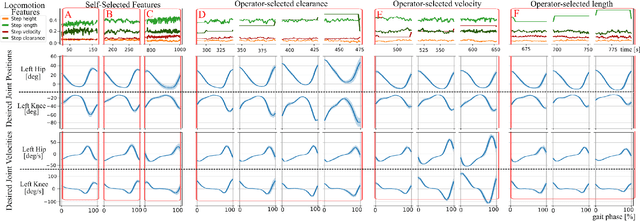

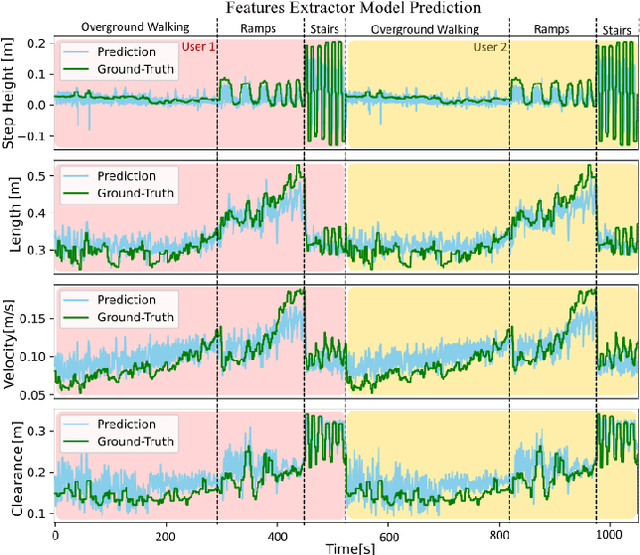

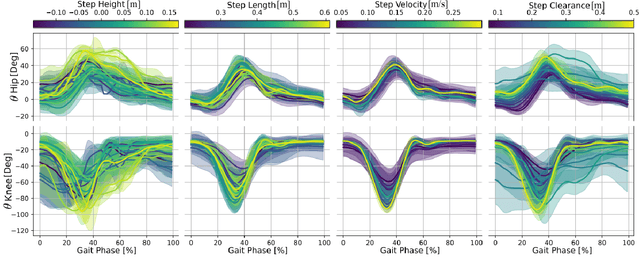

Abstract:Partial-assistance exoskeletons hold significant potential for gait rehabilitation by promoting active participation during (re)learning of normative walking patterns. Typically, the control of interaction torques in partial-assistance exoskeletons relies on a hierarchical control structure. These approaches require extensive calibration due to the complexity of the controller and user-specific parameter tuning, especially for activities like stair or ramp navigation. To address the limitations of hierarchical control in exoskeletons, this work proposes a three-step, data-driven approach: (1) using recent sensor data to probabilistically infer locomotion states (landing step length, landing step height, walking velocity, step clearance, gait phase), (2) allowing therapists to modify these features via a user interface, and (3) using the adjusted locomotion features to predict the desired joint posture and model stiffness in a spring-damper system based on prediction uncertainty. We evaluated the proposed approach with two healthy participants engaging in treadmill walking and stair ascent and descent at varying speeds, with and without external modification of the gait features through a user interface. Results showed a variation in kinematics according to the gait characteristics and a negative interaction power suggesting exoskeleton assistance across the different conditions.

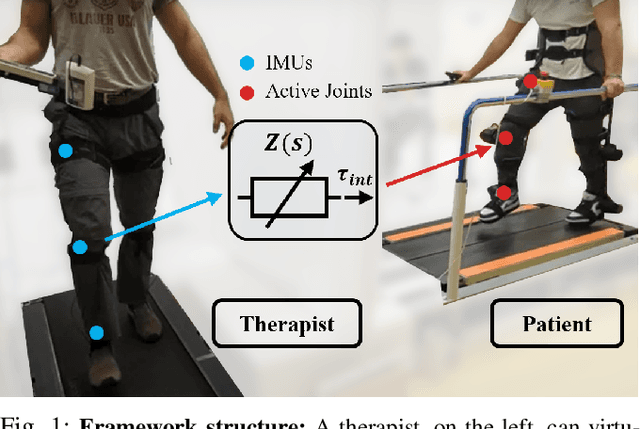

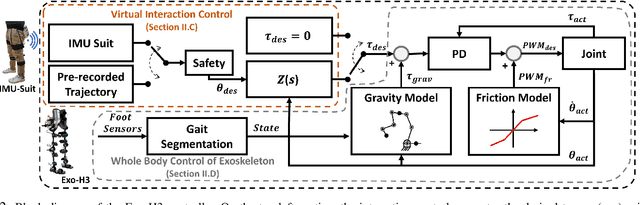

Unidirectional Human-Robot-Human Physical Interaction for Gait Training

Sep 17, 2024

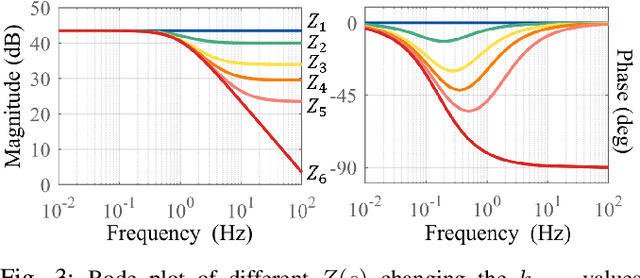

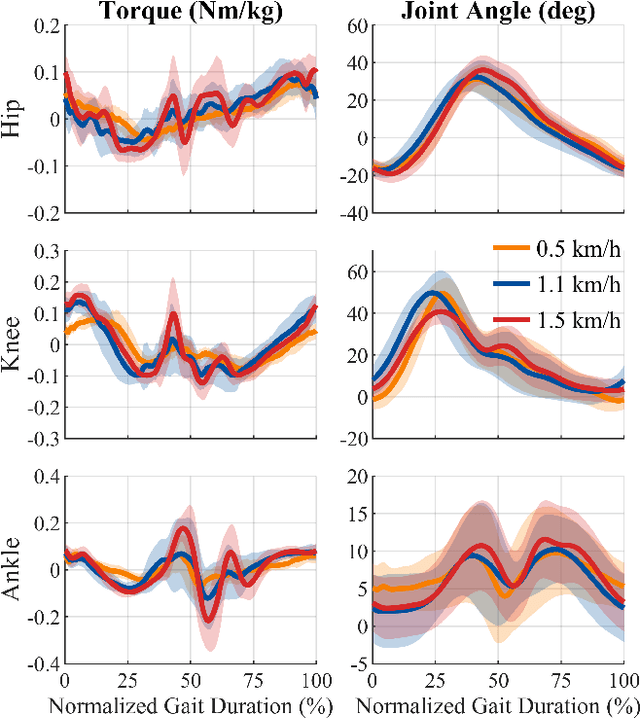

Abstract:This work presents a novel rehabilitation framework designed for a therapist, wearing an inertial measurement unit (IMU) suit, to virtually interact with a lower-limb exoskeleton worn by a patient with motor impairments. This framework aims to harmonize the skills and knowledge of the therapist with the capabilities of the exoskeleton. The therapist can guide the patient's movements by moving their own joints and making real-time adjustments to meet the patient's needs, while reducing the physical effort of the therapist. This eliminates the need for a predefined trajectory for the patient to follow, as in conventional robotic gait training. For the virtual interaction medium between the therapist and patient, we propose an impedance profile that is stiff at low frequencies and less stiff at high frequencies, that can be tailored to individual patient needs and different stages of rehabilitation. The desired interaction torque from this medium is commanded to a whole-exoskeleton closed-loop compensation controller. The proposed virtual interaction framework was evaluated with a pair of unimpaired individuals in different teacher-student gait training exercises. Results show the proposed interaction control effectively transmits haptic cues, informing future applications in rehabilitation scenarios.

Deep-Learning Estimation of Weight Distribution Using Joint Kinematics for Lower-Limb Exoskeleton Control

Feb 06, 2024

Abstract:In the control of lower-limb exoskeletons with feet, the phase in the gait cycle can be identified by monitoring the weight distribution at the feet. This phase information can be used in the exoskeleton's controller to compensate the dynamics of the exoskeleton and to assign impedance parameters. Typically the weight distribution is calculated using data from sensors such as treadmill force plates or insole force sensors. However, these solutions increase both the setup complexity and cost. For this reason, we propose a deep-learning approach that uses a short time window of joint kinematics to predict the weight distribution of an exoskeleton in real time. The model was trained on treadmill walking data from six users wearing a four-degree-of-freedom exoskeleton and tested in real time on three different users wearing the same device. This test set includes two users not present in the training set to demonstrate the model's ability to generalize across individuals. Results show that the proposed method is able to fit the actual weight distribution with R2=0.9 and is suitable for real-time control with prediction times less than 1 ms. Experiments in closed-loop exoskeleton control show that deep-learning-based weight distribution estimation can be used to replace force sensors in overground and treadmill walking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge