Jordan Prosky

A Strong Baseline for Domain Adaptation and Generalization in Medical Imaging

Apr 02, 2019

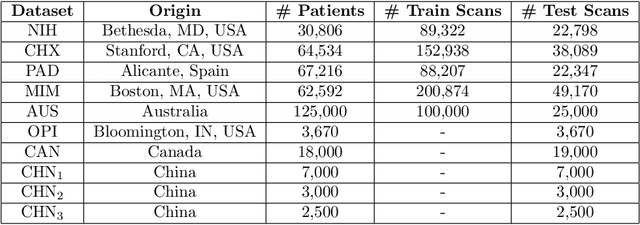

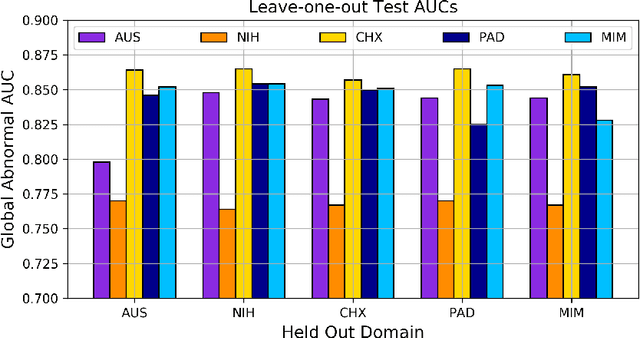

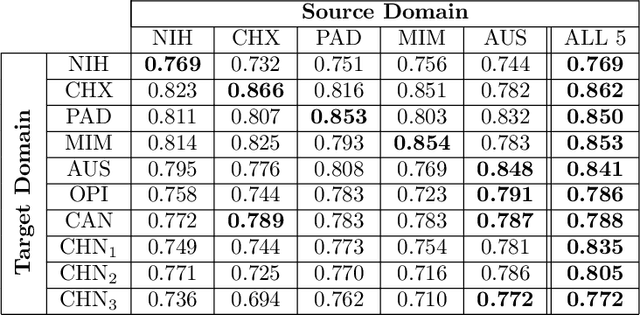

Abstract:This work provides a strong baseline for the problem of multi-source multi-target domain adaptation and generalization in medical imaging. Using a diverse collection of ten chest X-ray datasets, we empirically demonstrate the benefits of training medical imaging deep learning models on varied patient populations for generalization to out-of-sample domains.

Efficient and Accurate Abnormality Mining from Radiology Reports with Customized False Positive Reduction

Oct 01, 2018

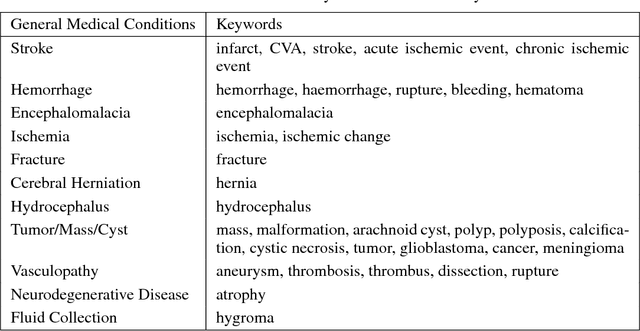

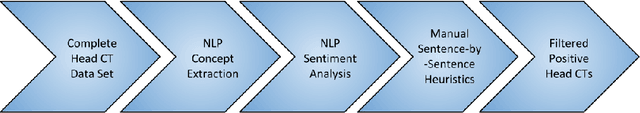

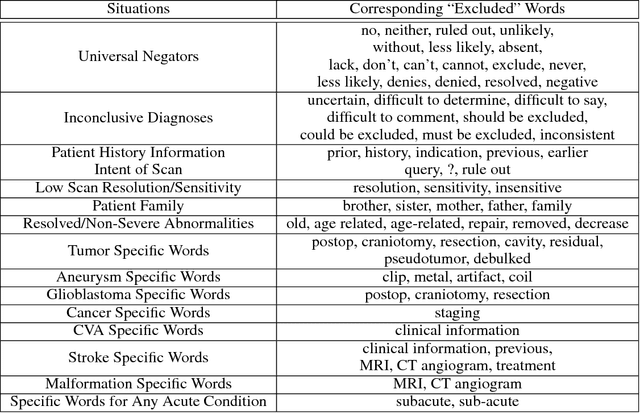

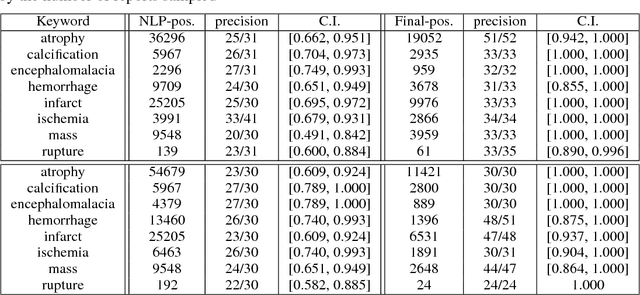

Abstract:Obtaining datasets labeled to facilitate model development is a challenge for most machine learning tasks. The difficulty is heightened for medical imaging, where data itself is limited in accessibility and labeling requires costly time and effort by trained medical specialists. Medical imaging studies, however, are often accompanied by a medical report produced by a radiologist, identifying important features on the corresponding scan for other physicians not specifically trained in radiology. We propose a methodology for approximating image-level labels for radiology studies from associated reports using a general purpose language processing tool for medical concept extraction and sentiment analysis, and simple manually crafted heuristics for false positive reduction. Using this approach, we label more than 175,000 Head CT studies for the presence of 33 features indicative of 11 clinically relevant conditions. For 27 of the 30 keywords that yielded positive results (3 had no occurrences), the lower bound of the confidence intervals created to estimate the percentage of accurately labeled reports was above 85%, with the average being above 95%. Though noisier then manual labeling, these results suggest this method to be a viable means of labeling medical images at scale.

Weakly Supervised Medical Diagnosis and Localization from Multiple Resolutions

Mar 21, 2018

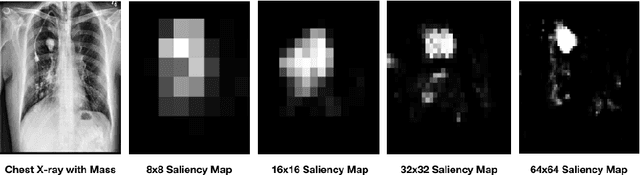

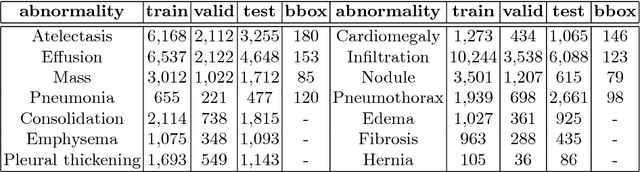

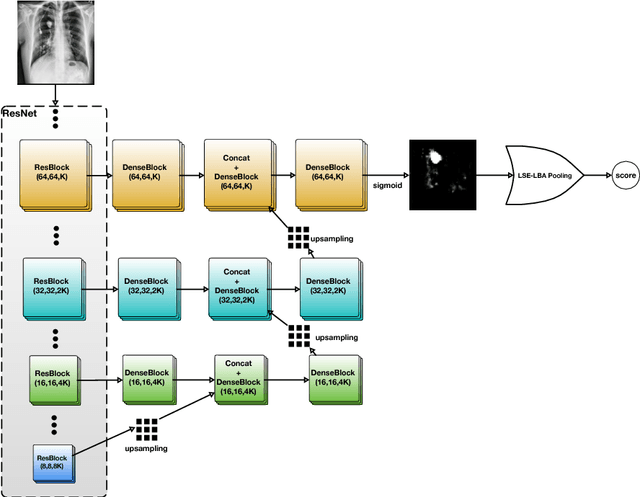

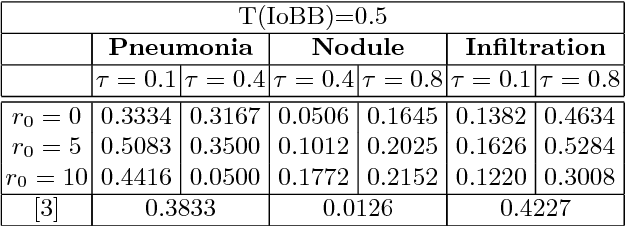

Abstract:Diagnostic imaging often requires the simultaneous identification of a multitude of findings of varied size and appearance. Beyond global indication of said findings, the prediction and display of localization information improves trust in and understanding of results when augmenting clinical workflow. Medical training data rarely includes more than global image-level labels as segmentations are time-consuming and expensive to collect. We introduce an approach to managing these practical constraints by applying a novel architecture which learns at multiple resolutions while generating saliency maps with weak supervision. Further, we parameterize the Log-Sum-Exp pooling function with a learnable lower-bounded adaptation (LSE-LBA) to build in a sharpness prior and better handle localizing abnormalities of different sizes using only image-level labels. Applying this approach to interpreting chest x-rays, we set the state of the art on 9 abnormalities in the NIH's CXR14 dataset while generating saliency maps with the highest resolution to date.

Sentiment Predictability for Stocks

Jan 18, 2018

Abstract:In this work, we present our findings and experiments for stock-market prediction using various textual sentiment analysis tools, such as mood analysis and event extraction, as well as prediction models, such as LSTMs and specific convolutional architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge