Jonas Stapmanns

Runtime Construction of Large-Scale Spiking Neuronal Network Models on GPU Devices

Jun 16, 2023

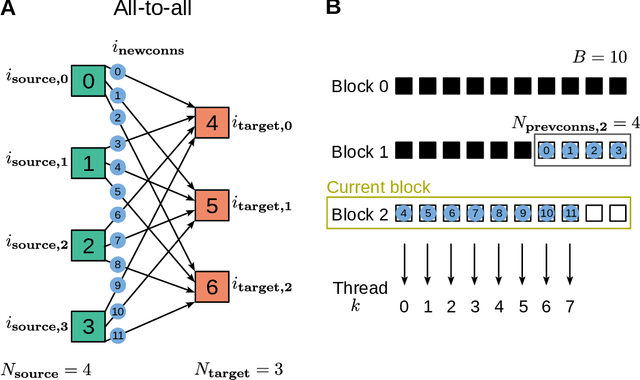

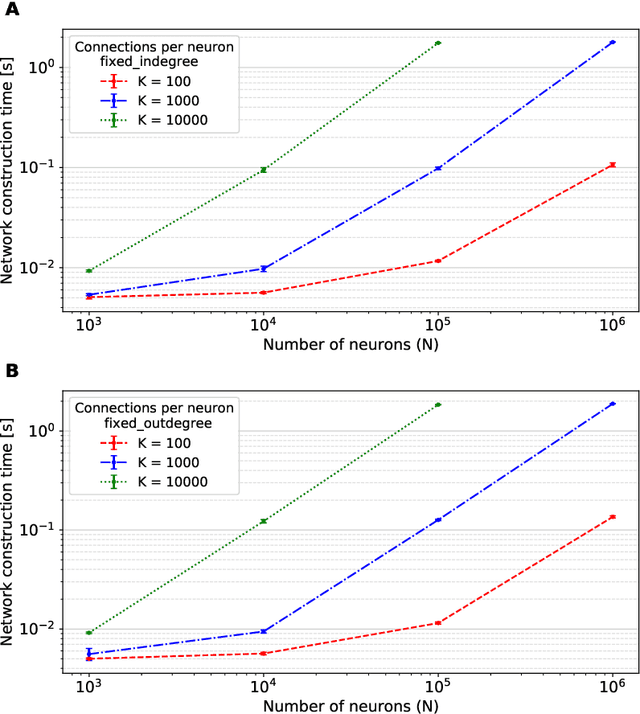

Abstract:Simulation speed matters for neuroscientific research: this includes not only how quickly the simulated model time of a large-scale spiking neuronal network progresses, but also how long it takes to instantiate the network model in computer memory. On the hardware side, acceleration via highly parallel GPUs is being increasingly utilized. On the software side, code generation approaches ensure highly optimized code, at the expense of repeated code regeneration and recompilation after modifications to the network model. Aiming for a greater flexibility with respect to iterative model changes, here we propose a new method for creating network connections interactively, dynamically, and directly in GPU memory through a set of commonly used high-level connection rules. We validate the simulation performance with both consumer and data center GPUs on two neuroscientifically relevant models: a cortical microcircuit of about 77,000 leaky-integrate-and-fire neuron models and 300 million static synapses, and a two-population network recurrently connected using a variety of connection rules. With our proposed ad hoc network instantiation, both network construction and simulation times are comparable or shorter than those obtained with other state-of-the-art simulation technologies, while still meeting the flexibility demands of explorative network modeling.

Phenomenological modeling of diverse and heterogeneous synaptic dynamics at natural density

Dec 10, 2022Abstract:This chapter sheds light on the synaptic organization of the brain from the perspective of computational neuroscience. It provides an introductory overview on how to account for empirical data in mathematical models, implement them in software, and perform simulations reflecting experiments. This path is demonstrated with respect to four key aspects of synaptic signaling: the connectivity of brain networks, synaptic transmission, synaptic plasticity, and the heterogeneity across synapses. Each step and aspect of the modeling and simulation workflow comes with its own challenges and pitfalls, which are highlighted and addressed in detail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge