João G. Melo

Planning the path with Reinforcement Learning: Optimal Robot Motion Planning in RoboCup Small Size League Environments

Apr 23, 2024Abstract:This work investigates the potential of Reinforcement Learning (RL) to tackle robot motion planning challenges in the dynamic RoboCup Small Size League (SSL). Using a heuristic control approach, we evaluate RL's effectiveness in obstacle-free and single-obstacle path-planning environments. Ablation studies reveal significant performance improvements. Our method achieved a 60% time gain in obstacle-free environments compared to baseline algorithms. Additionally, our findings demonstrated dynamic obstacle avoidance capabilities, adeptly navigating around moving blocks. These findings highlight the potential of RL to enhance robot motion planning in the challenging and unpredictable SSL environment.

An Embedded Monocular Vision Approach for Ground-Aware Objects Detection and Position Estimation

Jul 20, 2022

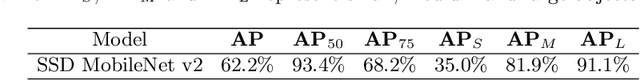

Abstract:In the RoboCup Small Size League (SSL), teams are encouraged to propose solutions for executing basic soccer tasks inside the SSL field using only embedded sensing information. Thus, this work proposes an embedded monocular vision approach for detecting objects and estimating relative positions inside the soccer field. Prior knowledge from the environment is exploited by assuming objects lay on the ground, and the onboard camera has its position fixed on the robot. We implemented the proposed method on an NVIDIA Jetson Nano and employed SSD MobileNet v2 for 2D Object Detection with TensorRT optimization, detecting balls, robots, and goals with distances up to 3.5 meters. Ball localization evaluation shows that the proposed solution overcomes the currently used SSL vision system for positions closer than 1 meter to the onboard camera with a Root Mean Square Error of 14.37 millimeters. In addition, the proposed method achieves real-time performance with an average processing speed of 30 frames per second.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge