Jiwon Shin

SCORPION: Addressing Scanner-Induced Variability in Histopathology

Jul 28, 2025

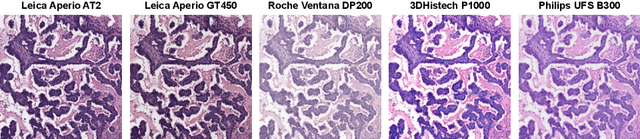

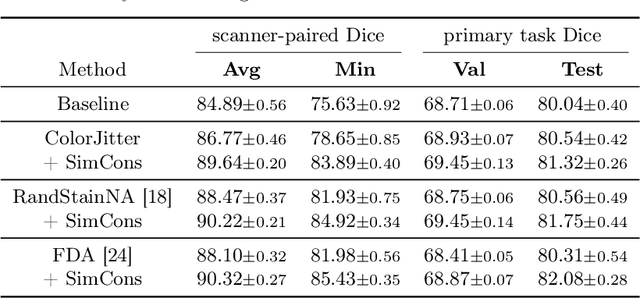

Abstract:Ensuring reliable model performance across diverse domains is a critical challenge in computational pathology. A particular source of variability in Whole-Slide Images is introduced by differences in digital scanners, thus calling for better scanner generalization. This is critical for the real-world adoption of computational pathology, where the scanning devices may differ per institution or hospital, and the model should not be dependent on scanner-induced details, which can ultimately affect the patient's diagnosis and treatment planning. However, past efforts have primarily focused on standard domain generalization settings, evaluating on unseen scanners during training, without directly evaluating consistency across scanners for the same tissue. To overcome this limitation, we introduce SCORPION, a new dataset explicitly designed to evaluate model reliability under scanner variability. SCORPION includes 480 tissue samples, each scanned with 5 scanners, yielding 2,400 spatially aligned patches. This scanner-paired design allows for the isolation of scanner-induced variability, enabling a rigorous evaluation of model consistency while controlling for differences in tissue composition. Furthermore, we propose SimCons, a flexible framework that combines augmentation-based domain generalization techniques with a consistency loss to explicitly address scanner generalization. We empirically show that SimCons improves model consistency on varying scanners without compromising task-specific performance. By releasing the SCORPION dataset and proposing SimCons, we provide the research community with a crucial resource for evaluating and improving model consistency across diverse scanners, setting a new standard for reliability testing.

AVCap: Leveraging Audio-Visual Features as Text Tokens for Captioning

Jul 11, 2024

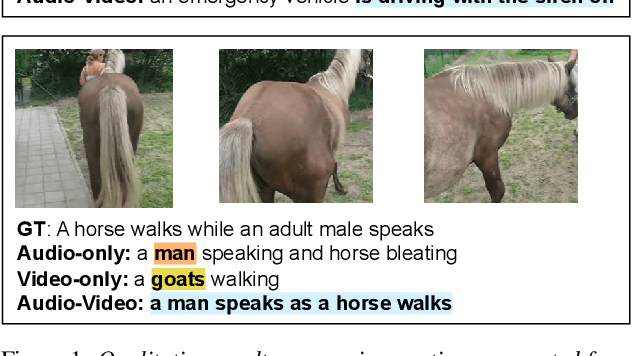

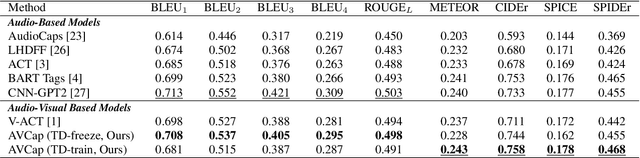

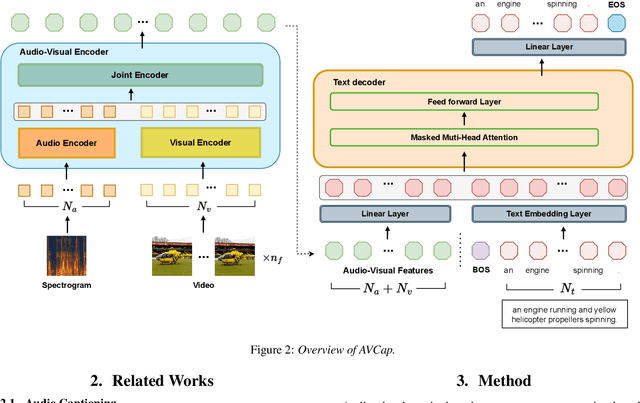

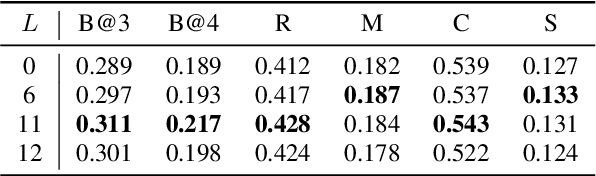

Abstract:In recent years, advancements in representation learning and language models have propelled Automated Captioning (AC) to new heights, enabling the generation of human-level descriptions. Leveraging these advancements, we propose AVCap, an Audio-Visual Captioning framework, a simple yet powerful baseline approach applicable to audio-visual captioning. AVCap utilizes audio-visual features as text tokens, which has many advantages not only in performance but also in the extensibility and scalability of the model. AVCap is designed around three pivotal dimensions: the exploration of optimal audio-visual encoder architectures, the adaptation of pre-trained models according to the characteristics of generated text, and the investigation into the efficacy of modality fusion in captioning. Our method outperforms existing audio-visual captioning methods across all metrics and the code is available on https://github.com/JongSuk1/AVCap

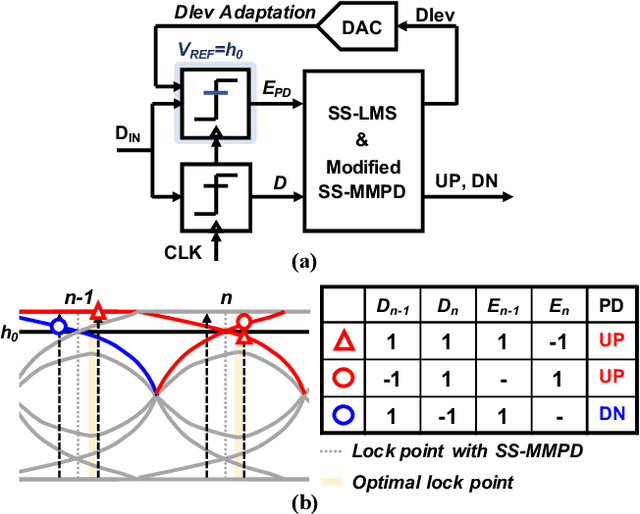

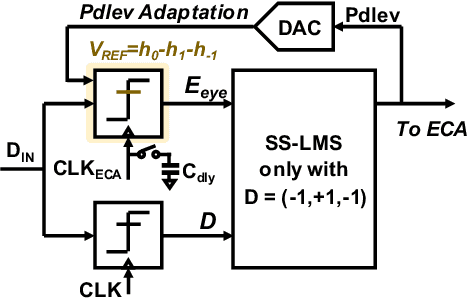

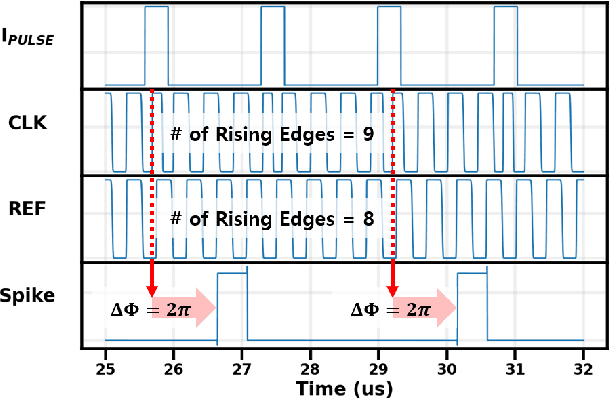

A 4x32Gb/s 1.8pJ/bit Collaborative Baud-Rate CDR with Background Eye-Climbing Algorithm and Low-Power Global Clock Distribution

Apr 10, 2024

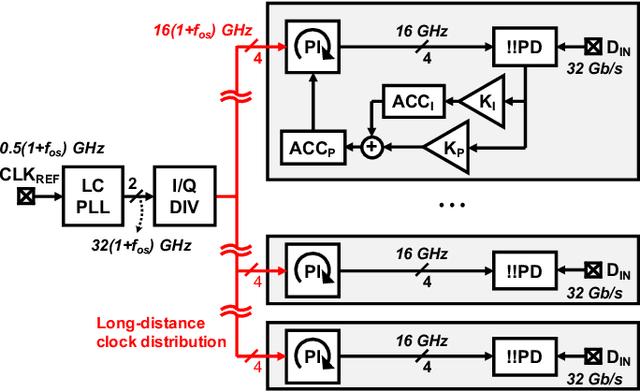

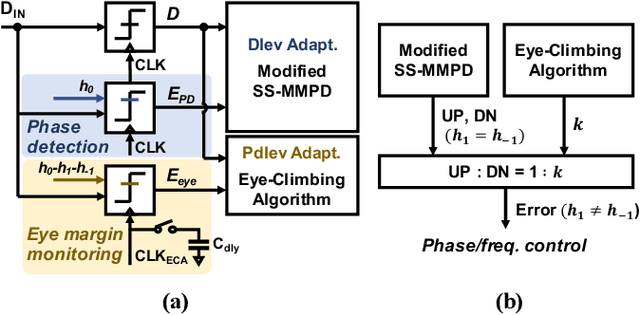

Abstract:This paper presents design techniques for an energy-efficient multi-lane receiver (RX) with baud-rate clock and data recovery (CDR), which is essential for high-throughput low-latency communication in high-performance computing systems. The proposed low-power global clock distribution not only significantly reduces power consumption across multi-lane RXs but is capable of compensating for the frequency offset without any phase interpolators. To this end, a fractional divider controlled by CDR is placed close to the global phase locked loop. Moreover, in order to address the sub-optimal lock point of conventional baud-rate phase detectors, the proposed CDR employs a background eye-climbing algorithm, which optimizes the sampling phase and maximizes the vertical eye margin (VEM). Fabricated in a 28nm CMOS process, the proposed 4x32Gb/s RX shows a low integrated fractional spur of -40.4dBc at a 2500ppm frequency offset. Furthermore, it improves bit-error-rate performance by increasing the VEM by 17%. The entire RX achieves the energy efficiency of 1.8pJ/bit with the aggregate data rate of 128Gb/s.

A 0.65-pJ/bit 3.6-TB/s/mm I/O Interface with XTalk Minimizing Affine Signaling for Next-Generation HBM with High Interconnect Density

Apr 08, 2024

Abstract:This paper presents an I/O interface with Xtalk Minimizing Affine Signaling (XMAS), which is designed to support high-speed data transmission in die-to-die communication over silicon interposers or similar high-density interconnects susceptible to crosstalk. The operating principles of XMAS are elucidated through rigorous analyses, and its advantages over existing signaling are validated through numerical experiments. XMAS not only demonstrates exceptional crosstalk removing capabilities but also exhibits robustness against noise, especially simultaneous switching noise. Fabricated in a 28-nm CMOS process, the prototype XMAS transceiver achieves an edge density of 3.6TB/s/mm and an energy efficiency of 0.65pJ/b. Compared to the single-ended signaling, the crosstalk-induced peak-to-peak jitter of the received eye with XMAS is reduced by 75% at 10GS/s/pin data rate, and the horizontal eye opening extends to 0.2UI at a bit error rate < 10$^{-12}$.

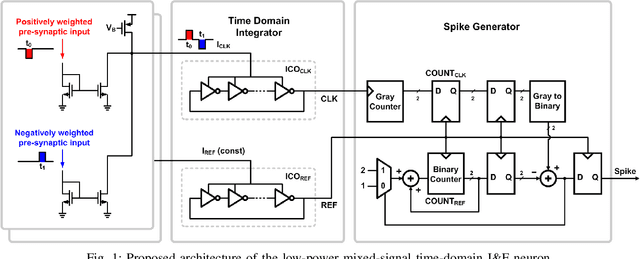

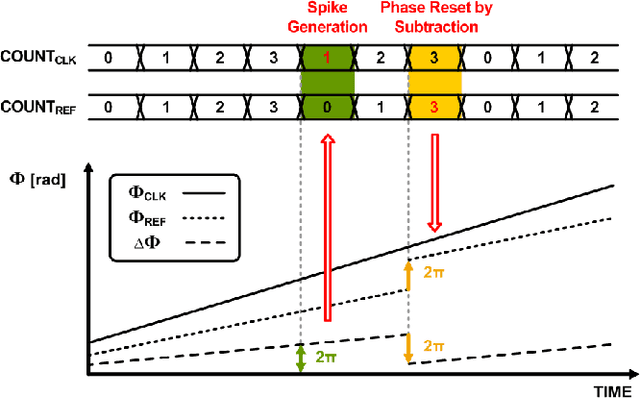

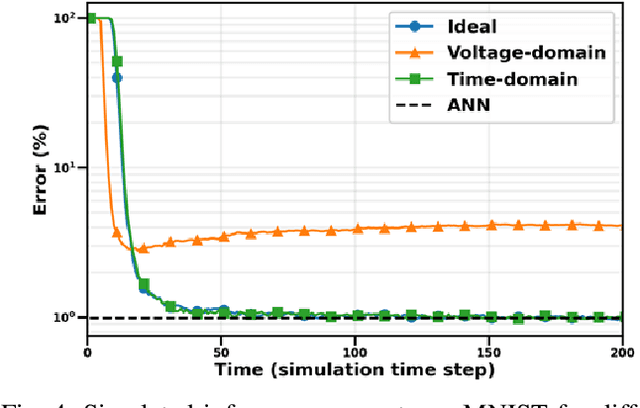

Energy-Efficient High-Accuracy Spiking Neural Network Inference Using Time-Domain Neurons

Feb 04, 2022

Abstract:Due to the limitations of realizing artificial neural networks on prevalent von Neumann architectures, recent studies have presented neuromorphic systems based on spiking neural networks (SNNs) to reduce power and computational cost. However, conventional analog voltage-domain integrate-and-fire (I&F) neuron circuits, based on either current mirrors or op-amps, pose serious issues such as nonlinearity or high power consumption, thereby degrading either inference accuracy or energy efficiency of the SNN. To achieve excellent energy efficiency and high accuracy simultaneously, this paper presents a low-power highly linear time-domain I&F neuron circuit. Designed and simulated in a 28nm CMOS process, the proposed neuron leads to more than 4.3x lower error rate on the MNIST inference over the conventional current-mirror-based neurons. In addition, the power consumed by the proposed neuron circuit is simulated to be 0.230uW per neuron, which is orders of magnitude lower than the existing voltage-domain neurons.

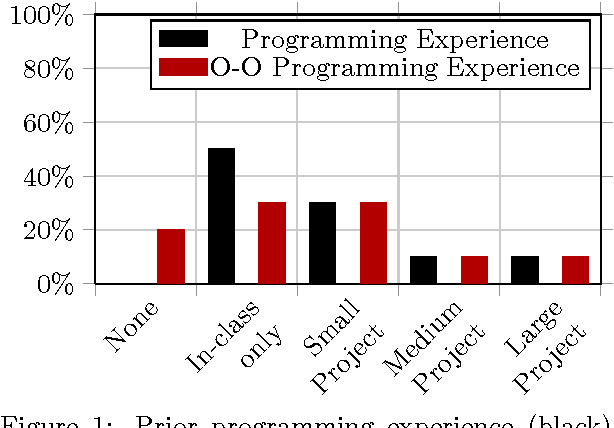

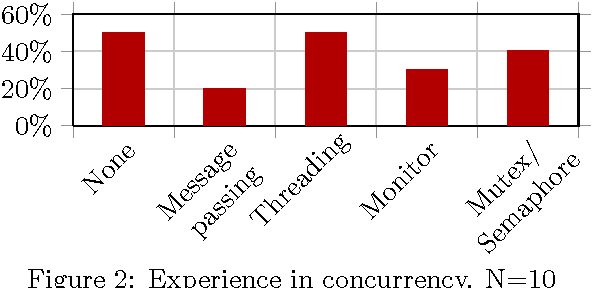

Teaching Software Engineering through Robotics

Jun 17, 2014

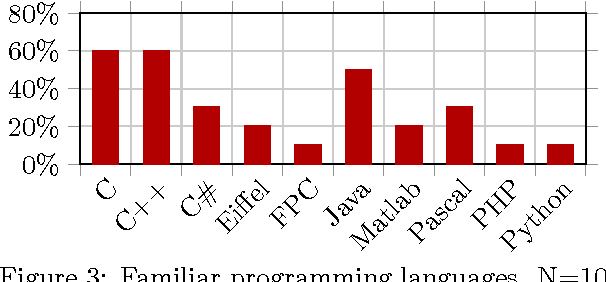

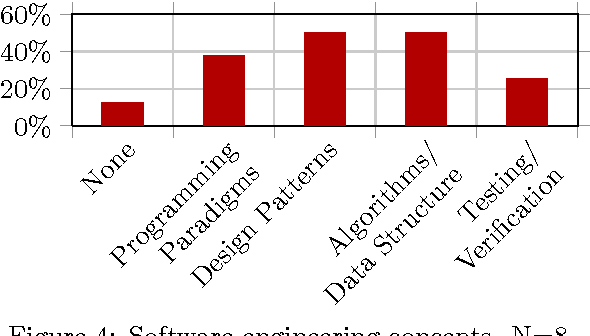

Abstract:This paper presents a newly-developed robotics programming course and reports the initial results of software engineering education in robotics context. Robotics programming, as a multidisciplinary course, puts equal emphasis on software engineering and robotics. It teaches students proper software engineering -- in particular, modularity and documentation -- by having them implement four core robotics algorithms for an educational robot. To evaluate the effect of software engineering education in robotics context, we analyze pre- and post-class survey data and the four assignments our students completed for the course. The analysis suggests that the students acquired an understanding of software engineering techniques and principles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge