Jinshan Ding

RIS-Assisted Joint Uplink Communication and Imaging: Phase Optimization and Bayesian Echo Decoupling

Jan 10, 2023Abstract:Achieving integrated sensing and communication (ISAC) via uplink transmission is challenging due to the unknown waveform and the coupling of communication and sensing echoes. In this paper, a joint uplink communication and imaging system is proposed for the first time, where a reconfigurable intelligent surface (RIS) is used to manipulate the electromagnetic signals for echo decoupling at the base station (BS). Aiming to enhance the transmission gain in desired directions and generate required radiation pattern in the region of interest (RoI), a phase optimization problem for RIS is formulated, which is high dimensional and nonconvex with discrete constraints. To tackle this problem, a back propagation based phase design scheme for both continuous and discrete phase models is developed. Moreover, the echo decoupling problem is tackled using the Bayesian method with the factor graph technique, where the problem is represented by a graph model which consists of difficult local functions. Based on the graph model, a message-passing algorithm is derived, which can efficiently cooperate with the adaptive sparse Bayesian learning (SBL) to achieve joint communication and imaging. Numerical results show that the proposed method approaches the relevant lower bound asymptotically, and the communication performance can be enhanced with the utilization of imaging echoes.

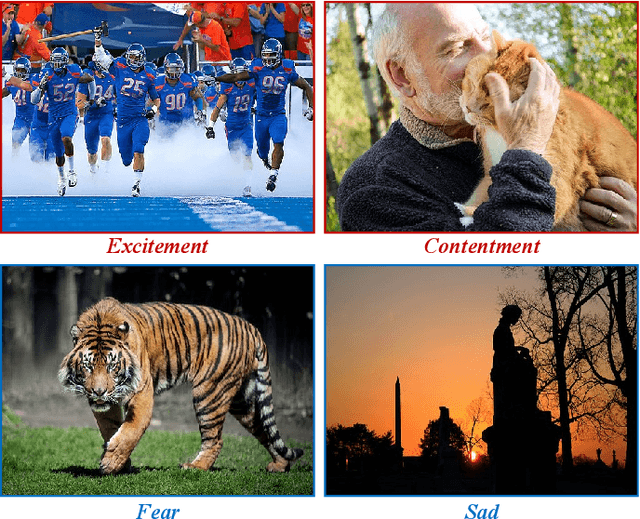

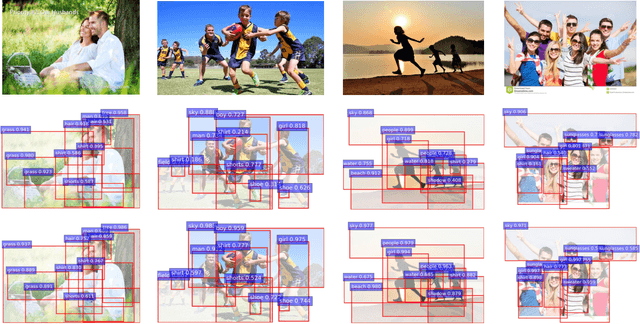

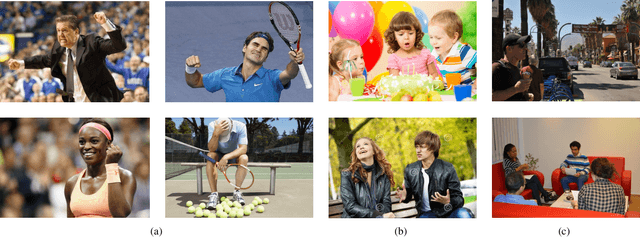

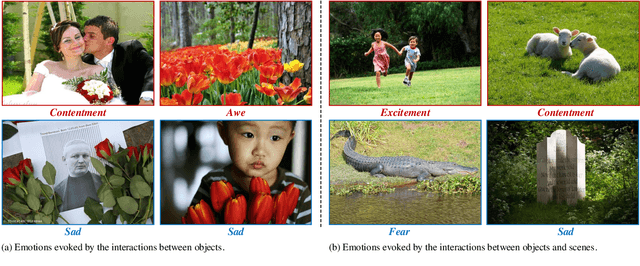

SOLVER: Scene-Object Interrelated Visual Emotion Reasoning Network

Oct 24, 2021

Abstract:Visual Emotion Analysis (VEA) aims at finding out how people feel emotionally towards different visual stimuli, which has attracted great attention recently with the prevalence of sharing images on social networks. Since human emotion involves a highly complex and abstract cognitive process, it is difficult to infer visual emotions directly from holistic or regional features in affective images. It has been demonstrated in psychology that visual emotions are evoked by the interactions between objects as well as the interactions between objects and scenes within an image. Inspired by this, we propose a novel Scene-Object interreLated Visual Emotion Reasoning network (SOLVER) to predict emotions from images. To mine the emotional relationships between distinct objects, we first build up an Emotion Graph based on semantic concepts and visual features. Then, we conduct reasoning on the Emotion Graph using Graph Convolutional Network (GCN), yielding emotion-enhanced object features. We also design a Scene-Object Fusion Module to integrate scenes and objects, which exploits scene features to guide the fusion process of object features with the proposed scene-based attention mechanism. Extensive experiments and comparisons are conducted on eight public visual emotion datasets, and the results demonstrate that the proposed SOLVER consistently outperforms the state-of-the-art methods by a large margin. Ablation studies verify the effectiveness of our method and visualizations prove its interpretability, which also bring new insight to explore the mysteries in VEA. Notably, we further discuss SOLVER on three other potential datasets with extended experiments, where we validate the robustness of our method and notice some limitations of it.

* Accepted by TIP

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge