Jinhe Zhang

Overcoming the Identity Mapping Problem in Self-Supervised Hyperspectral Anomaly Detection

Apr 05, 2025Abstract:The surge of deep learning has catalyzed considerable progress in self-supervised Hyperspectral Anomaly Detection (HAD). The core premise for self-supervised HAD is that anomalous pixels are inherently more challenging to reconstruct, resulting in larger errors compared to the background. However, owing to the powerful nonlinear fitting capabilities of neural networks, self-supervised models often suffer from the Identity Mapping Problem (IMP). The IMP manifests as a tendency for the model to overfit to the entire image, particularly with increasing network complexity or prolonged training iterations. Consequently, the whole image can be precisely reconstructed, and even the anomalous pixels exhibit imperceptible errors, making them difficult to detect. Despite the proposal of several models aimed at addressing the IMP-related issues, a unified descriptive framework and validation of solutions for IMP remain lacking. In this paper, we conduct an in-depth exploration to IMP, and summarize a unified framework that describes IMP from the perspective of network optimization, which encompasses three aspects: perturbation, reconstruction, and regularization. Correspondingly, we introduce three solutions: superpixel pooling and uppooling for perturbation, error-adaptive convolution for reconstruction, and online background pixel mining for regularization. With extensive experiments being conducted to validate the effectiveness, it is hoped that our work will provide valuable insights and inspire further research for self-supervised HAD. Code: \url{https://github.com/yc-cui/Super-AD}.

NAS-BNN: Neural Architecture Search for Binary Neural Networks

Aug 28, 2024

Abstract:Binary Neural Networks (BNNs) have gained extensive attention for their superior inferencing efficiency and compression ratio compared to traditional full-precision networks. However, due to the unique characteristics of BNNs, designing a powerful binary architecture is challenging and often requires significant manpower. A promising solution is to utilize Neural Architecture Search (NAS) to assist in designing BNNs, but current NAS methods for BNNs are relatively straightforward and leave a performance gap between the searched models and manually designed ones. To address this gap, we propose a novel neural architecture search scheme for binary neural networks, named NAS-BNN. We first carefully design a search space based on the unique characteristics of BNNs. Then, we present three training strategies, which significantly enhance the training of supernet and boost the performance of all subnets. Our discovered binary model family outperforms previous BNNs for a wide range of operations (OPs) from 20M to 200M. For instance, we achieve 68.20% top-1 accuracy on ImageNet with only 57M OPs. In addition, we validate the transferability of these searched BNNs on the object detection task, and our binary detectors with the searched BNNs achieve a novel state-of-the-art result, e.g., 31.6% mAP with 370M OPs, on MS COCO dataset. The source code and models will be released at https://github.com/VDIGPKU/NAS-BNN.

DynamicDet: A Unified Dynamic Architecture for Object Detection

Apr 12, 2023

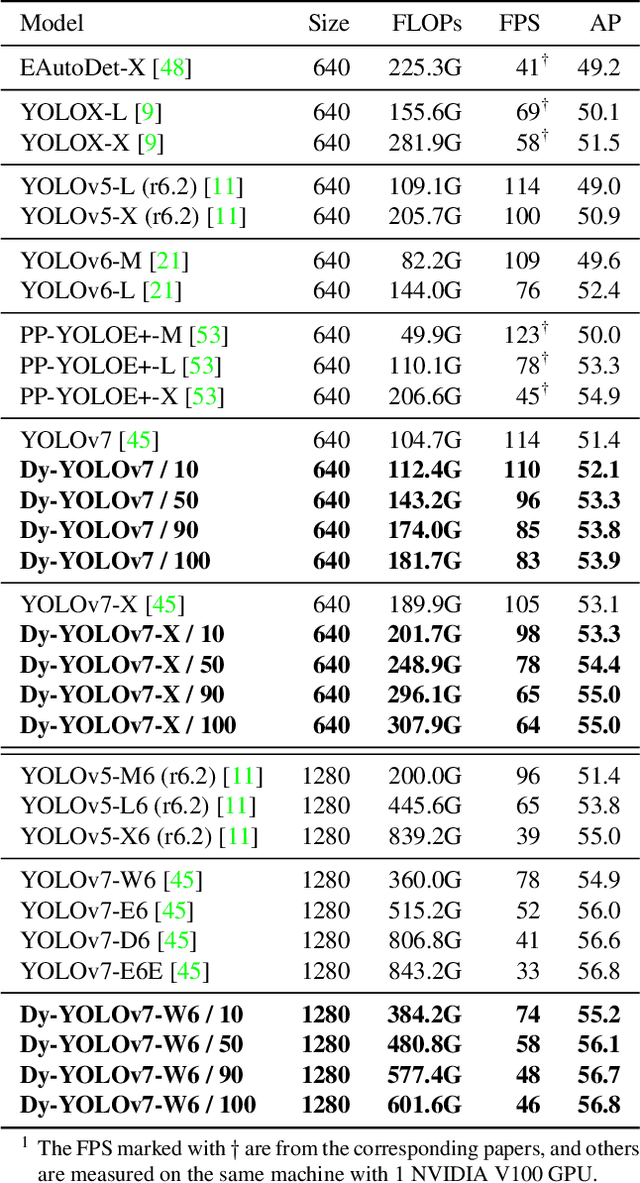

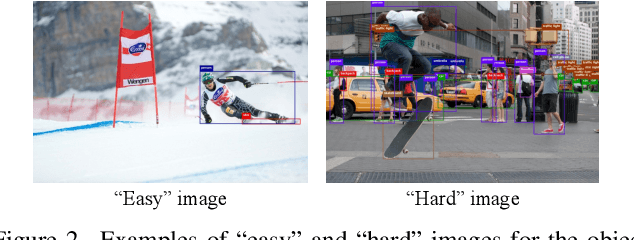

Abstract:Dynamic neural network is an emerging research topic in deep learning. With adaptive inference, dynamic models can achieve remarkable accuracy and computational efficiency. However, it is challenging to design a powerful dynamic detector, because of no suitable dynamic architecture and exiting criterion for object detection. To tackle these difficulties, we propose a dynamic framework for object detection, named DynamicDet. Firstly, we carefully design a dynamic architecture based on the nature of the object detection task. Then, we propose an adaptive router to analyze the multi-scale information and to decide the inference route automatically. We also present a novel optimization strategy with an exiting criterion based on the detection losses for our dynamic detectors. Last, we present a variable-speed inference strategy, which helps to realize a wide range of accuracy-speed trade-offs with only one dynamic detector. Extensive experiments conducted on the COCO benchmark demonstrate that the proposed DynamicDet achieves new state-of-the-art accuracy-speed trade-offs. For instance, with comparable accuracy, the inference speed of our dynamic detector Dy-YOLOv7-W6 surpasses YOLOv7-E6 by 12%, YOLOv7-D6 by 17%, and YOLOv7-E6E by 39%. The code is available at https://github.com/VDIGPKU/DynamicDet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge