Jingjing Qian

GeoPredict: Leveraging Predictive Kinematics and 3D Gaussian Geometry for Precise VLA Manipulation

Dec 18, 2025Abstract:Vision-Language-Action (VLA) models achieve strong generalization in robotic manipulation but remain largely reactive and 2D-centric, making them unreliable in tasks that require precise 3D reasoning. We propose GeoPredict, a geometry-aware VLA framework that augments a continuous-action policy with predictive kinematic and geometric priors. GeoPredict introduces a trajectory-level module that encodes motion history and predicts multi-step 3D keypoint trajectories of robot arms, and a predictive 3D Gaussian geometry module that forecasts workspace geometry with track-guided refinement along future keypoint trajectories. These predictive modules serve exclusively as training-time supervision through depth-based rendering, while inference requires only lightweight additional query tokens without invoking any 3D decoding. Experiments on RoboCasa Human-50, LIBERO, and real-world manipulation tasks show that GeoPredict consistently outperforms strong VLA baselines, especially in geometry-intensive and spatially demanding scenarios.

Universal Rate-Distortion-Perception Representations for Lossy Compression

Jun 18, 2021

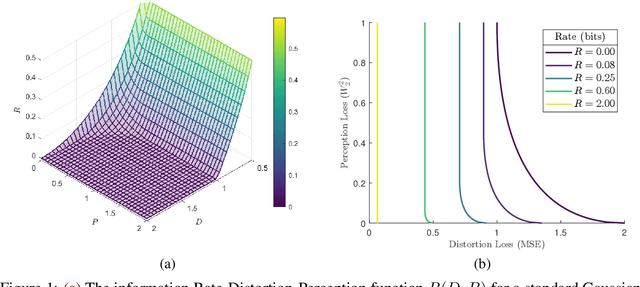

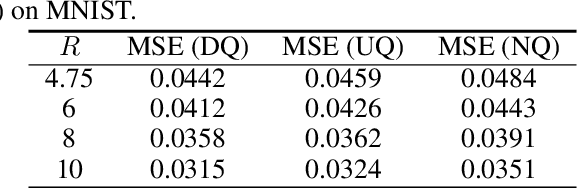

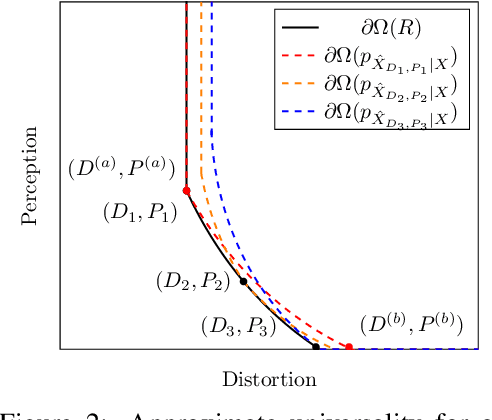

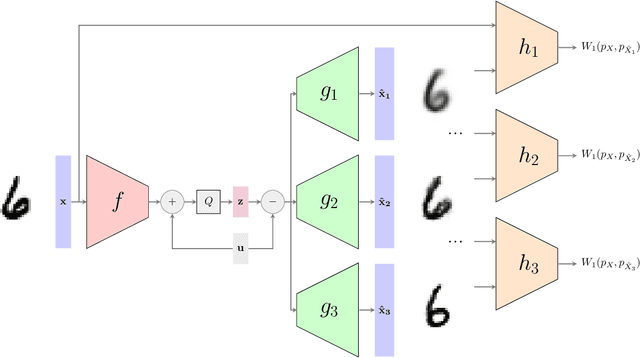

Abstract:In the context of lossy compression, Blau & Michaeli (2019) adopt a mathematical notion of perceptual quality and define the information rate-distortion-perception function, generalizing the classical rate-distortion tradeoff. We consider the notion of universal representations in which one may fix an encoder and vary the decoder to achieve any point within a collection of distortion and perception constraints. We prove that the corresponding information-theoretic universal rate-distortion-perception function is operationally achievable in an approximate sense. Under MSE distortion, we show that the entire distortion-perception tradeoff of a Gaussian source can be achieved by a single encoder of the same rate asymptotically. We then characterize the achievable distortion-perception region for a fixed representation in the case of arbitrary distributions, identify conditions under which the aforementioned results continue to hold approximately, and study the case when the rate is not fixed in advance. This motivates the study of practical constructions that are approximately universal across the RDP tradeoff, thereby alleviating the need to design a new encoder for each objective. We provide experimental results on MNIST and SVHN suggesting that on image compression tasks, the operational tradeoffs achieved by machine learning models with a fixed encoder suffer only a small penalty when compared to their variable encoder counterparts.

Stripe-based and Attribute-aware Network: A Two-Branch Deep Model for Vehicle Re-identification

Oct 12, 2019

Abstract:Vehicle re-identification (Re-ID) has been attracting increasing interest in the field of computer vision due to the growing utilization of surveillance cameras in public security. However, vehicle Re-ID still suffers a similarity challenge despite the efforts made to solve this problem. This challenge involves distinguishing different instances with nearly identical appearances. In this paper, we propose a novel two-branch stripe-based and attribute-aware deep convolutional neural network (SAN) to learn the efficient feature embedding for vehicle Re-ID task. The two-branch neural network, consisting of stripe-based branch and attribute-aware branches, can adaptively extract the discriminative features from the visual appearance of vehicles. A horizontal average pooling and dimension-reduced convolutional layers are inserted into the stripe-based branch to achieve part-level features. Meanwhile, the attribute-aware branch extracts the global feature under the supervision of vehicle attribute labels to separate the similar vehicle identities with different attribute annotations. Finally, the part-level and global features are concatenated together to form the final descriptor of the input image for vehicle Re-ID. The final descriptor not only can separate vehicles with different attributes but also distinguish vehicle identities with the same attributes. The extensive experiments on both VehicleID and VeRi databases show that the proposed SAN method outperforms other state-of-the-art vehicle Re-ID approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge