Jin Seob Kim

Optical Fiber-Based Needle Shape Sensing in Real Tissue: Single Core vs. Multicore Approaches

Sep 08, 2023

Abstract:Flexible needle insertion procedures are common for minimally-invasive surgeries for diagnosing and treating prostate cancer. Bevel-tip needles provide physicians the capability to steer the needle during long insertions to avoid vital anatomical structures in the patient and reduce post-operative patient discomfort. To provide needle placement feedback to the physician, sensors are embedded into needles for determining the real-time 3D shape of the needle during operation without needing to visualize the needle intra-operatively. Through expansive research in fiber optics, a plethora of bio-compatible, MRI-compatible, optical shape-sensors have been developed to provide real-time shape feedback, such as single-core and multicore fiber Bragg gratings. In this paper, we directly compare single-core fiber-based and multicore fiber-based needle shape-sensing through identically constructed, four-active area sensorized bevel-tip needles inserted into phantom and \exvivo tissue on the same experimental platform. In this work, we found that for shape-sensing in phantom tissue, the two needles performed identically with a $p$-value of $0.164 > 0.05$, but in \exvivo real tissue, the single-core fiber sensorized needle significantly outperformed the multicore fiber configuration with a $p$-value of $0.0005 < 0.05$. This paper also presents the experimental platform and method for directly comparing these optical shape sensors for the needle shape-sensing task, as well as provides direction, insight and required considerations for future work in constructively optimizing sensorized needles.

Quotienting Impertinent Camera Kinematics for 3D Video Stabilization

Mar 21, 2019

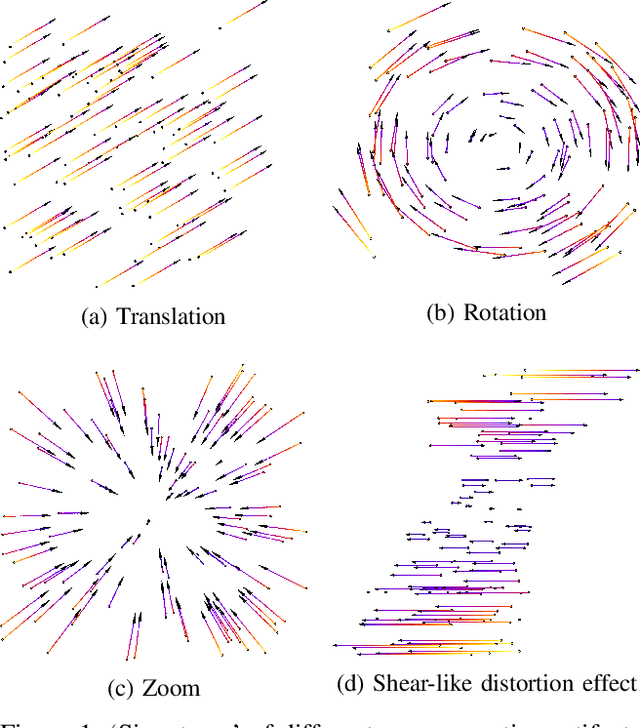

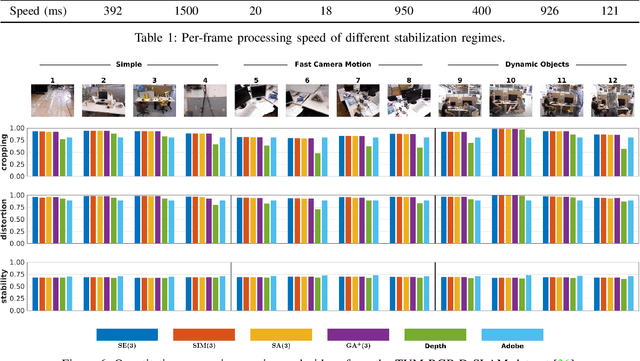

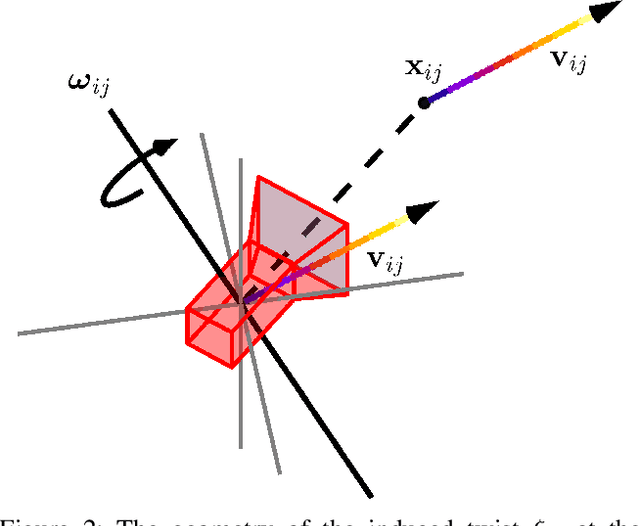

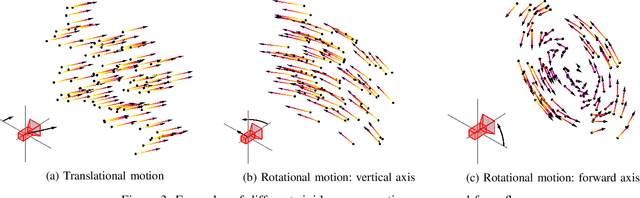

Abstract:With the recent advent of methods that allow for real-time computation, dense 3D flows have become a viable basis for fast camera motion estimation. Most importantly, dense flows are more robust than the sparse feature matching techniques used by existing 3D stabilization methods, able to better handle large camera displacements and occlusions similar to those often found in consumer videos. Here we introduce a framework for 3D video stabilization that relies on dense scene flow alone. The foundation of this approach is a novel camera motion model that allows for real-world camera poses to be recovered directly from 3D motion fields. Moreover, this model can be extended to describe certain types of non-rigid artifacts that are commonly found in videos, such as those resulting from zooms. This framework gives rise to several robust regimes that produce high-quality stabilization of the kind achieved by prior full 3D methods while avoiding the fragility typically present in feature-based approaches. As an added benefit, our framework is fast: the simplicity of our motion model and efficient flow calculations combine to enable stabilization at a high frame rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge