Jimmy Hall

AMDet: A Tool for Mitotic Cell Detection in Histopathology Slides

Aug 08, 2021

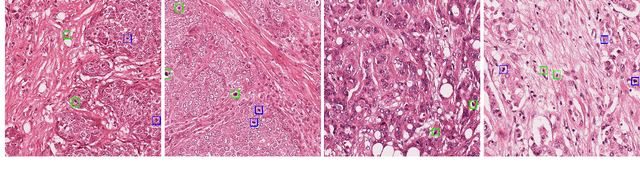

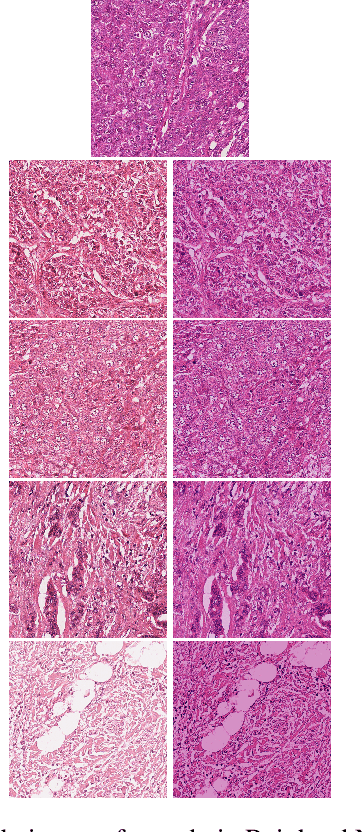

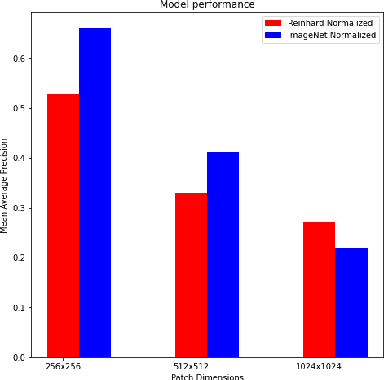

Abstract:Breast Cancer is the most prevalent cancer in the world. The World Health Organization reports that the disease still affects a significant portion of the developing world citing increased mortality rates in the majority of low to middle income countries. The most popular protocol pathologists use for diagnosing breast cancer is the Nottingham grading system which grades the proliferation of tumors based on 3 major criteria, the most important of them being mitotic cell count. The way in which pathologists evaluate mitotic cell count is to subjectively and qualitatively analyze cells present in stained slides of tissue and make a decision on its mitotic state i.e. is it mitotic or not?This process is extremely inefficient and tiring for pathologists and so an efficient, accurate, and fully automated tool to aid with the diagnosis is extremely desirable. Fortunately, creating such a tool is made significantly easier with the AutoML tool available from Microsoft Azure, however to the best of our knowledge the AutoML tool has never been formally evaluated for use in mitotic cell detection in histopathology images. This paper serves as an evaluation of the AutoML tool for this purpose and will provide a first look on how the tool handles this challenging problem. All code is available athttps://github.com/WaltAFWilliams/AMDet

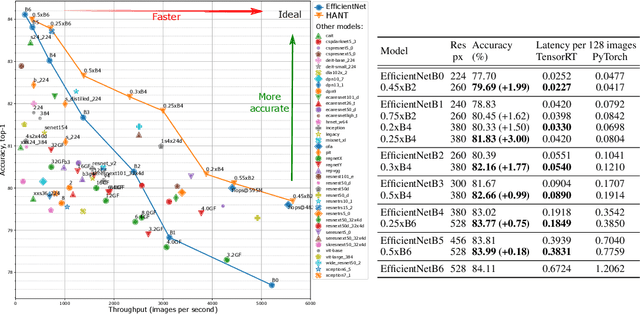

HANT: Hardware-Aware Network Transformation

Jul 12, 2021

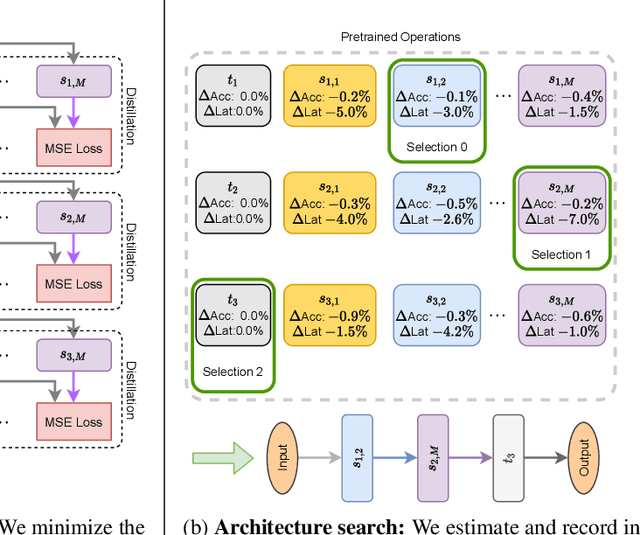

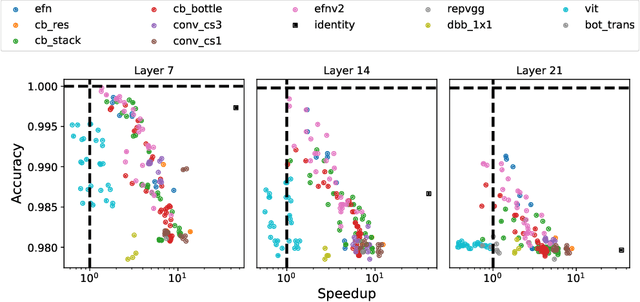

Abstract:Given a trained network, how can we accelerate it to meet efficiency needs for deployment on particular hardware? The commonly used hardware-aware network compression techniques address this question with pruning, kernel fusion, quantization and lowering precision. However, these approaches do not change the underlying network operations. In this paper, we propose hardware-aware network transformation (HANT), which accelerates a network by replacing inefficient operations with more efficient alternatives using a neural architecture search like approach. HANT tackles the problem in two phase: In the first phase, a large number of alternative operations per every layer of the teacher model is trained using layer-wise feature map distillation. In the second phase, the combinatorial selection of efficient operations is relaxed to an integer optimization problem that can be solved in a few seconds. We extend HANT with kernel fusion and quantization to improve throughput even further. Our experimental results on accelerating the EfficientNet family show that HANT can accelerate them by up to 3.6x with <0.4% drop in the top-1 accuracy on the ImageNet dataset. When comparing the same latency level, HANT can accelerate EfficientNet-B4 to the same latency as EfficientNet-B1 while having 3% higher accuracy. We examine a large pool of operations, up to 197 per layer, and we provide insights into the selected operations and final architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge