Jihoon Yang

RAMiT: Reciprocal Attention Mixing Transformer for Lightweight Image Restoration

May 22, 2023

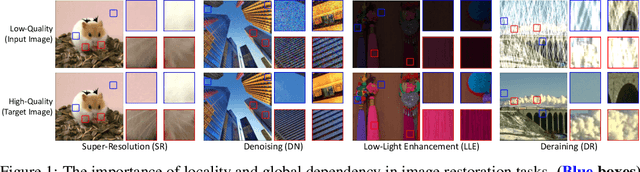

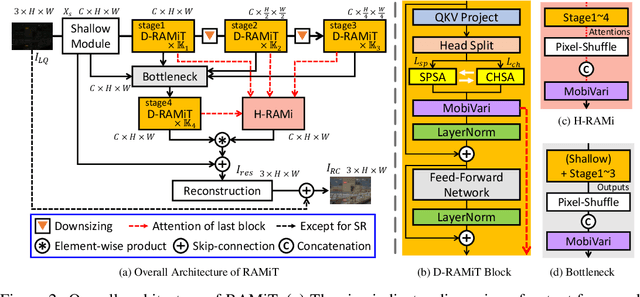

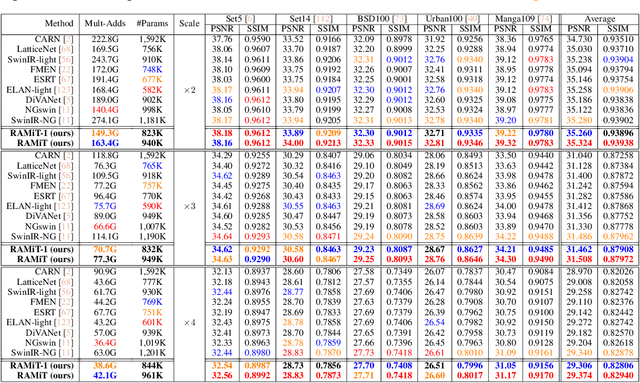

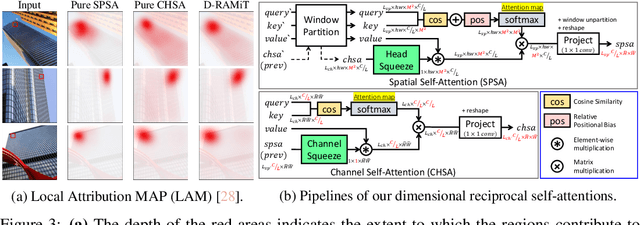

Abstract:Although many recent works have made advancements in the image restoration (IR) field, they often suffer from an excessive number of parameters. Another issue is that most Transformer-based IR methods focus only on either local or global features, leading to limited receptive fields or deficient parameter issues. To address these problems, we propose a lightweight IR network, Reciprocal Attention Mixing Transformer (RAMiT). It employs our proposed dimensional reciprocal attention mixing Transformer (D-RAMiT) blocks, which compute bi-dimensional (spatial and channel) self-attentions in parallel with different numbers of multi-heads. The bi-dimensional attentions help each other to complement their counterpart's drawbacks and are then mixed. Additionally, we introduce a hierarchical reciprocal attention mixing (H-RAMi) layer that compensates for pixel-level information losses and utilizes semantic information while maintaining an efficient hierarchical structure. Furthermore, we revisit and modify MobileNet V1 and V2 to attach efficient convolutions to our proposed components. The experimental results demonstrate that RAMiT achieves state-of-the-art performance on multiple lightweight IR tasks, including super-resolution, color denoising, grayscale denoising, low-light enhancement, and deraining. Codes will be available soon.

Exploration of Lightweight Single Image Denoising with Transformers and Truly Fair Training

Apr 04, 2023

Abstract:As multimedia content often contains noise from intrinsic defects of digital devices, image denoising is an important step for high-level vision recognition tasks. Although several studies have developed the denoising field employing advanced Transformers, these networks are too momory-intensive for real-world applications. Additionally, there is a lack of research on lightweight denosing (LWDN) with Transformers. To handle this, this work provides seven comparative baseline Transformers for LWDN, serving as a foundation for future research. We also demonstrate the parts of randomly cropped patches significantly affect the denoising performances during training. While previous studies have overlooked this aspect, we aim to train our baseline Transformers in a truly fair manner. Furthermore, we conduct empirical analyses of various components to determine the key considerations for constructing LWDN Transformers. Codes are available at https://github.com/rami0205/LWDN.

N-Gram in Swin Transformers for Efficient Lightweight Image Super-Resolution

Nov 21, 2022Abstract:While some studies have proven that Swin Transformer (SwinT) with window self-attention (WSA) is suitable for single image super-resolution (SR), SwinT ignores the broad regions for reconstructing high-resolution images due to window and shift size. In addition, many deep learning SR methods suffer from intensive computations. To address these problems, we introduce the N-Gram context to the image domain for the first time in history. We define N-Gram as neighboring local windows in SwinT, which differs from text analysis that views N-Gram as consecutive characters or words. N-Grams interact with each other by sliding-WSA, expanding the regions seen to restore degraded pixels. Using the N-Gram context, we propose NGswin, an efficient SR network with SCDP bottleneck taking all outputs of the hierarchical encoder. Experimental results show that NGswin achieves competitive performance while keeping an efficient structure, compared with previous leading methods. Moreover, we also improve other SwinT-based SR methods with the N-Gram context, thereby building an enhanced model: SwinIR-NG. Our improved SwinIR-NG outperforms the current best lightweight SR approaches and establishes state-of-the-art results. Codes will be available soon.

StatAssist & GradBoost: A Study on Optimal INT8 Quantization-aware Training from Scratch

Jun 17, 2020

Abstract:This paper studies the scratch training of quantization-aware training (QAT), which has been applied to the lossless conversion of lower-bit, especially for INT8 quantization. Due to its training instability, QAT have required a full-precision (FP) pre-trained weight for fine-tuning and the performance is bound to the original FP model with floating-point computations. Here, we propose critical but straightforward optimization methods which enable the scratch training: floating-point statistic assisting (StatAssist) and stochastic-gradient boosting (GradBoost). We discovered that, first, the scratch QAT get comparable and often surpasses the performance of the floating-point counterpart without any help of the pre-trained model, especially when the model becomes complicated.We also show that our method can even train the minimax generation loss, which is very unstable and hence difficult to apply QAT fine-tuning. From extent experiments, we show that our method successfully enables QAT to train various deep models from scratch: classification, object detection, semantic segmentation, and style transfer, with comparable or often better performance than their FP baselines.

Abstractive Text Classification Using Sequence-to-convolution Neural Networks

Jun 24, 2018

Abstract:We propose a new deep neural network model and its training scheme for text classification. Our model Sequence-to-convolution Neural Networks(Seq2CNN) consists of two blocks: Sequential Block that summarizes input texts and Convolution Block that receives summary of input and classifies it to a label. Seq2CNN is trained end-to-end to classify various-length texts without preprocessing inputs into fixed length. We also present Gradual Weight Shift(GWS) method that stabilizes training. GWS is applied to our model's loss function. We compared our model with word-based TextCNN trained with different data preprocessing methods. We obtained significant improvement in classification accuracy over word-based TextCNN without any ensemble or data augmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge