Jihoon Jeong

Knowing When to Answer: Adaptive Confidence Refinement for Reliable Audio-Visual Question Answering

Feb 04, 2026Abstract:We present a formal problem formulation for \textit{Reliable} Audio-Visual Question Answering ($\mathcal{R}$-AVQA), where we prefer abstention over answering incorrectly. While recent AVQA models have high accuracy, their ability to identify when they are likely wrong and their consequent abstention from answering remain underexplored areas of research. To fill this gap, we explore several approaches and then propose Adaptive Confidence Refinement (ACR), a lightweight method to further enhance the performance of $\mathcal{R}$-AVQA. Our key insight is that the Maximum Softmax Probability (MSP) is Bayes-optimal only under strong calibration, a condition usually not met in deep neural networks, particularly in multimodal models. Instead of replacing MSP, our ACR maintains it as a primary confidence signal and applies input-adaptive residual corrections when MSP is deemed unreliable. ACR introduces two learned heads: i) a Residual Risk Head that predicts low-magnitude correctness residuals that MSP does not capture, and ii) a Confidence Gating Head to determine MSP trustworthiness. Our experiments and theoretical analysis show that ACR consistently outperforms existing methods on in- and out-of-disrtibution, and data bias settings across three different AVQA architectures, establishing a solid foundation for $\mathcal{R}$-AVQA task. The code and checkpoints will be available upon acceptance \href{https://github.com/PhuTran1005/R-AVQA}{at here}

Time-Efficient and Identity-Consistent Virtual Try-On Using A Variant of Altered Diffusion Models

Mar 12, 2024Abstract:This study discusses the critical issues of Virtual Try-On in contemporary e-commerce and the prospective metaverse, emphasizing the challenges of preserving intricate texture details and distinctive features of the target person and the clothes in various scenarios, such as clothing texture and identity characteristics like tattoos or accessories. In addition to the fidelity of the synthesized images, the efficiency of the synthesis process presents a significant hurdle. Various existing approaches are explored, highlighting the limitations and unresolved aspects, e.g., identity information omission, uncontrollable artifacts, and low synthesis speed. It then proposes a novel diffusion-based solution that addresses garment texture preservation and user identity retention during virtual try-on. The proposed network comprises two primary modules - a warping module aligning clothing with individual features and a try-on module refining the attire and generating missing parts integrated with a mask-aware post-processing technique ensuring the integrity of the individual's identity. It demonstrates impressive results, surpassing the state-of-the-art in speed by nearly 20 times during inference, with superior fidelity in qualitative assessments. Quantitative evaluations confirm comparable performance with the recent SOTA method on the VITON-HD and Dresscode datasets.

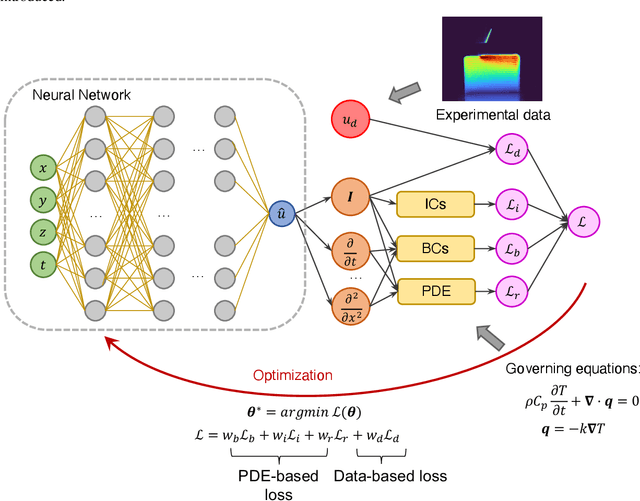

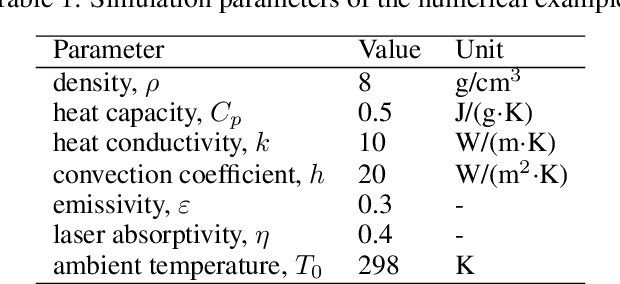

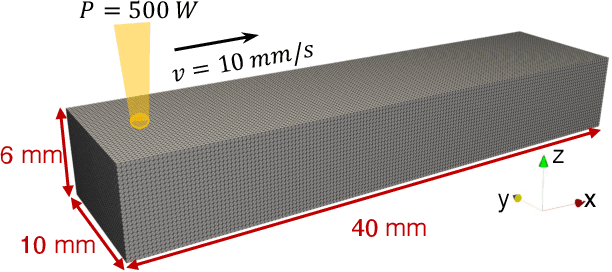

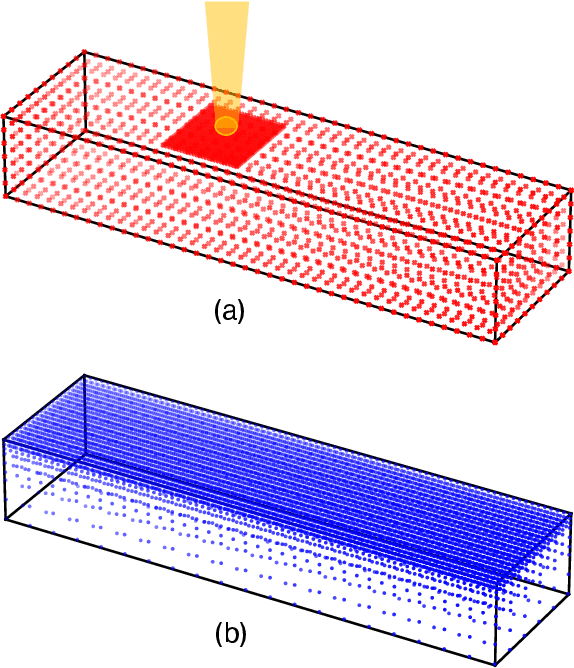

Hybrid full-field thermal characterization of additive manufacturing processes using physics-informed neural networks with data

Jun 15, 2022

Abstract:Understanding the thermal behavior of additive manufacturing (AM) processes is crucial for enhancing the quality control and enabling customized process design. Most purely physics-based computational models suffer from intensive computational costs, thus not suitable for online control and iterative design application. Data-driven models taking advantage of the latest developed computational tools can serve as a more efficient surrogate, but they are usually trained over a large amount of simulation data and often fail to effectively use small but high-quality experimental data. In this work, we developed a hybrid physics-based data-driven thermal modeling approach of AM processes using physics-informed neural networks. Specifically, partially observed temperature data measured from an infrared camera is combined with the physics laws to predict full-field temperature history and to discover unknown material and process parameters. In the numerical and experimental examples, the effectiveness of adding auxiliary training data and using the technique of transfer learning on training efficiency and prediction accuracy, as well as the ability to identify unknown parameters with partially observed data, are demonstrated. The results show that the hybrid thermal model can effectively identify unknown parameters and capture the full-field temperature accurately, and thus it has the potential to be used in iterative process design and real-time process control of AM.

Convolutional Attention Networks for Multimodal Emotion Recognition from Speech and Text Data

May 17, 2018

Abstract:Emotion recognition has become a popular topic of interest, especially in the field of human computer interaction. Previous works involve unimodal analysis of emotion, while recent efforts focus on multi-modal emotion recognition from vision and speech. In this paper, we propose a new method of learning about the hidden representations between just speech and text data using convolutional attention networks. Compared to the shallow model which employs simple concatenation of feature vectors, the proposed attention model performs much better in classifying emotion from speech and text data contained in the CMU-MOSEI dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge