Jiayan Cao

LanePtrNet: Revisiting Lane Detection as Point Voting and Grouping on Curves

Mar 08, 2024

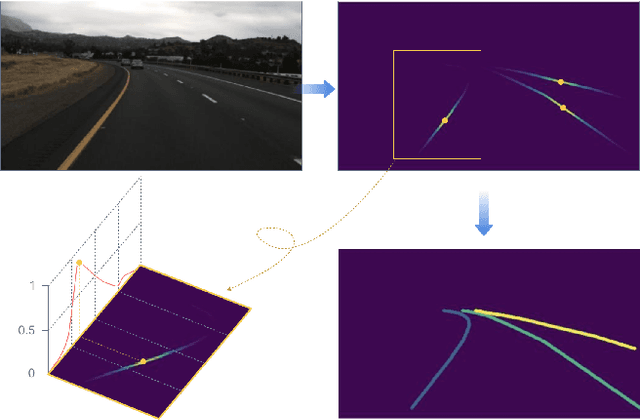

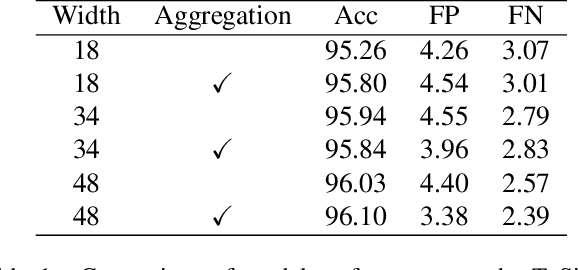

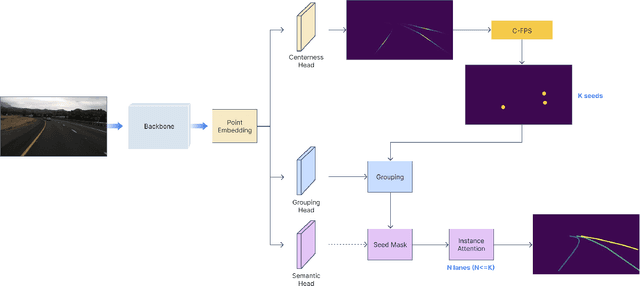

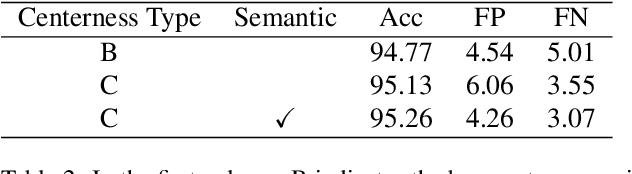

Abstract:Lane detection plays a critical role in the field of autonomous driving. Prevailing methods generally adopt basic concepts (anchors, key points, etc.) from object detection and segmentation tasks, while these approaches require manual adjustments for curved objects, involve exhaustive searches on predefined anchors, require complex post-processing steps, and may lack flexibility when applied to real-world scenarios.In this paper, we propose a novel approach, LanePtrNet, which treats lane detection as a process of point voting and grouping on ordered sets: Our method takes backbone features as input and predicts a curve-aware centerness, which represents each lane as a point and assigns the most probable center point to it. A novel point sampling method is proposed to generate a set of candidate points based on the votes received. By leveraging features from local neighborhoods, and cross-instance attention score, we design a grouping module that further performs lane-wise clustering between neighboring and seeding points. Furthermore, our method can accommodate a point-based framework, (PointNet++ series, etc.) as an alternative to the backbone. This flexibility enables effortless extension to 3D lane detection tasks. We conduct comprehensive experiments to validate the effectiveness of our proposed approach, demonstrating its superior performance.

Voxel-FPN: multi-scale voxel feature aggregation in 3D object detection from point clouds

Jul 16, 2019

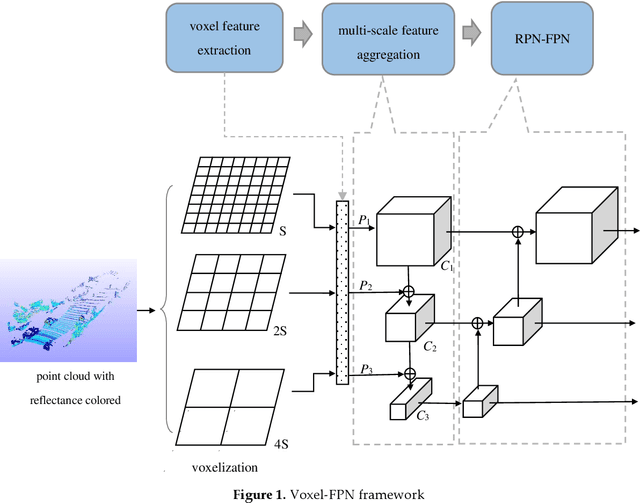

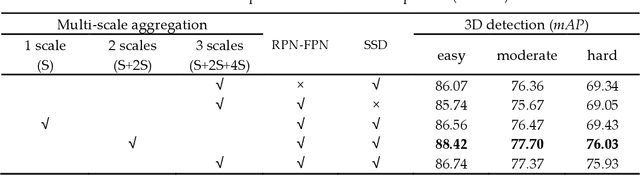

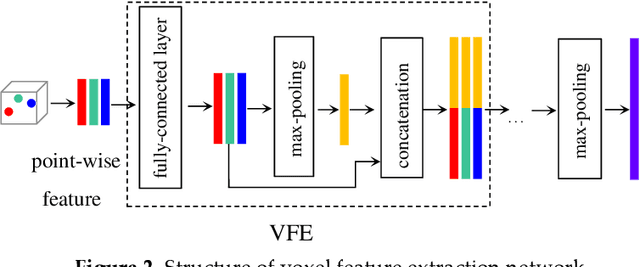

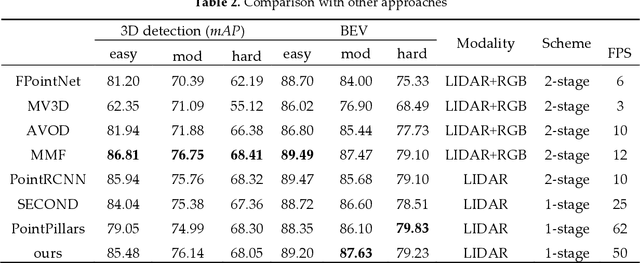

Abstract:Object detection in point cloud data is one of the key components in computer vision systems, especially for autonomous driving applications. In this work, we present Voxel-FPN, a novel one-stage 3D object detector that utilizes raw data from LIDAR sensors only. The core framework consists of an encoder network and a corresponding decoder followed by a region proposal network. Encoder extracts multi-scale voxel information in a bottom-up manner while decoder fuses multiple feature maps from various scales in a top-down way. Extensive experiments show that the proposed method has better performance on extracting features from point data and demonstrates its superiority over some baselines on the challenging KITTI-3D benchmark, obtaining good performance on both speed and accuracy in real-world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge