Jianqing Wu

DynamicLight: Dynamically Tuning Traffic Signal Duration with DRL

Nov 02, 2022

Abstract:Deep reinforcement learning (DRL) is becoming increasingly popular in implementing traffic signal control (TSC). However, most existing DRL methods employ fixed control strategies, making traffic signal phase duration less flexible. Additionally, the trend of using more complex DRL models makes real-life deployment more challenging. To address these two challenges, we firstly propose a two-stage DRL framework, named DynamicLight, which uses Max Queue-Length to select the proper phase and employs a deep Q-learning network to determine the duration of the corresponding phase. Based on the design of DynamicLight, we also introduce two variants: (1) DynamicLight-Lite, which addresses the first challenge by using only 19 parameters to achieve dynamic phase duration settings; and (2) DynamicLight-Cycle, which tackles the second challenge by actuating a set of phases in a fixed cyclical order to implement flexible phase duration in the respective cyclical phase structure. Numerical experiments are conducted using both real-world and synthetic datasets, covering four most commonly adopted traffic signal intersections in real life. Experimental results show that: (1) DynamicLight can learn satisfactorily on determining the phase duration and achieve a new state-of-the-art, with improvement up to 6% compared to the baselines in terms of adjusted average travel time; (2) DynamicLight-Lite matches or outperforms most baseline methods with only 19 parameters; and (3) DynamicLight-Cycle demonstrates high performance for current TSC systems without remarkable modification in an actual deployment. Our code is released at Github.

Expression is enough: Improving traffic signal control with advanced traffic state representation

Dec 19, 2021

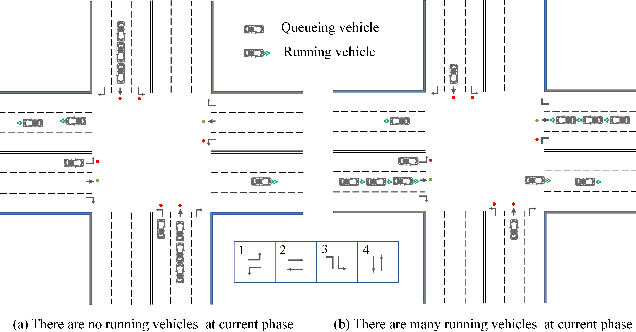

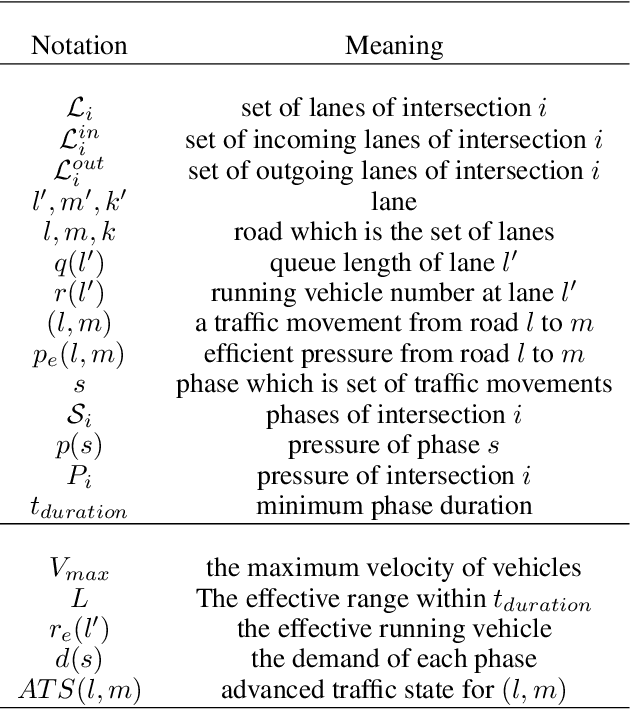

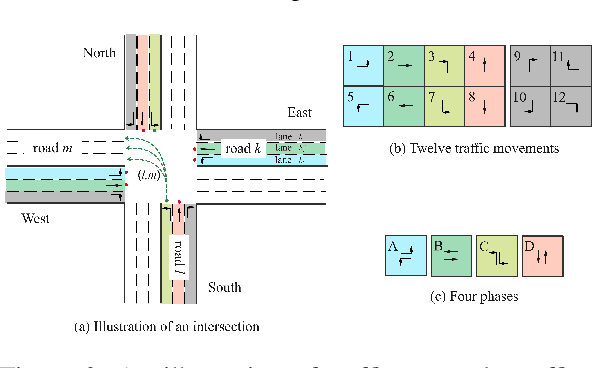

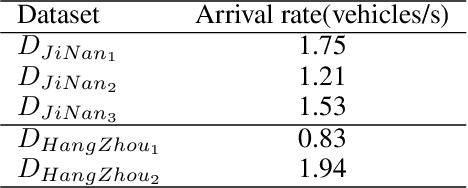

Abstract:Recently, finding fundamental properties for traffic state representation is more critical than complex algorithms for traffic signal control (TSC).In this paper, we (1) present a novel, flexible and straightforward method advanced max pressure (Advanced-MP), taking both running and queueing vehicles into consideration to decide whether to change current phase; (2) novelty design the traffic movement representation with the efficient pressure and effective running vehicles from Advanced-MP, namely advanced traffic state (ATS); (3) develop an RL-based algorithm template Advanced-XLight, by combining ATS with current RL approaches and generate two RL algorithms, "Advanced-MPLight" and "Advanced-CoLight". Comprehensive experiments on multiple real-world datasets show that: (1) the Advanced-MP outperforms baseline methods, which is efficient and reliable for deployment; (2) Advanced-MPLight and Advanced-CoLight could achieve new state-of-the-art. Our code is released on Github.

Efficient Pressure: Improving efficiency for signalized intersections

Dec 04, 2021

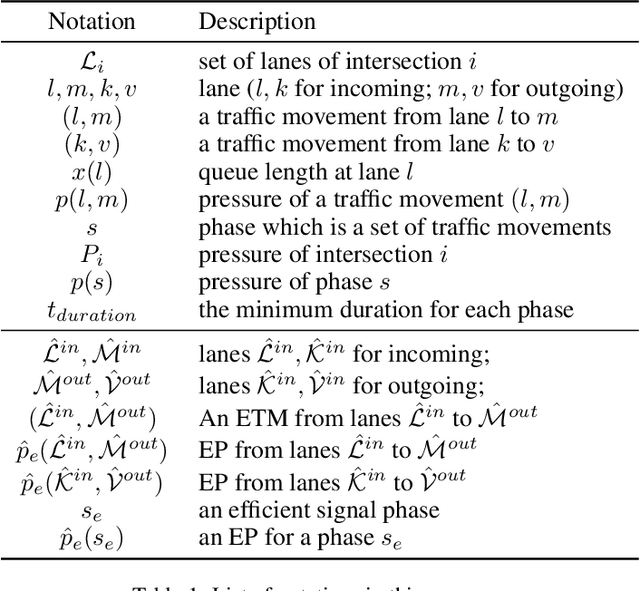

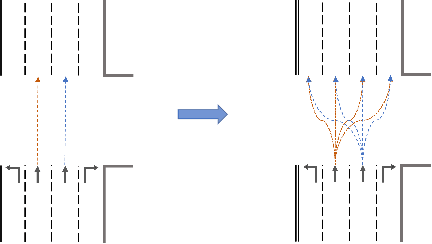

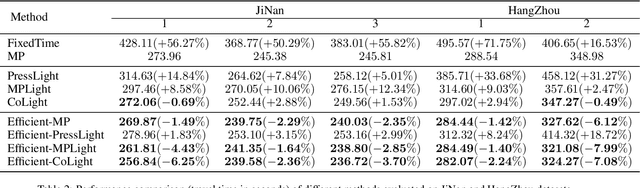

Abstract:Since conventional approaches could not adapt to dynamic traffic conditions, reinforcement learning (RL) has attracted more attention to help solve the traffic signal control (TSC) problem. However, existing RL-based methods are rarely deployed considering that they are neither cost-effective in terms of computing resources nor more robust than traditional approaches, which raises a critical research question: how to construct an adaptive controller for TSC with less training and reduced complexity based on RL-based approach? To address this question, in this paper, we (1) innovatively specify the traffic movement representation as a simple but efficient pressure of vehicle queues in a traffic network, namely efficient pressure (EP); (2) build a traffic signal settings protocol, including phase duration, signal phase number and EP for TSC; (3) design a TSC approach based on the traditional max pressure (MP) approach, namely efficient max pressure (Efficient-MP) using the EP to capture the traffic state; and (4) develop a general RL-based TSC algorithm template: efficient Xlight (Efficient-XLight) under EP. Through comprehensive experiments on multiple real-world datasets in our traffic signal settings' protocol for TSC, we demonstrate that efficient pressure is complementary to traditional and RL-based modeling to design better TSC methods. Our code is released on Github.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge