Jianli Wei

A Gis Aided Approach for Geolocalizing an Unmanned Aerial System Using Deep Learning

Aug 25, 2022

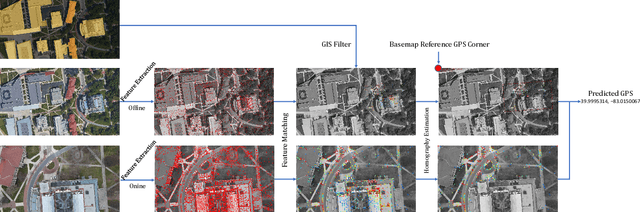

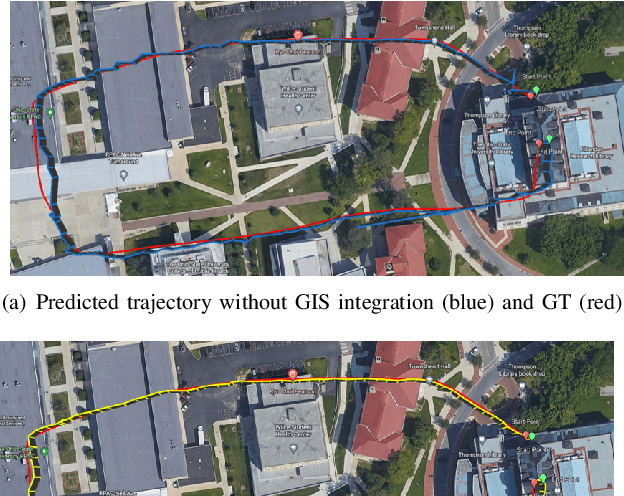

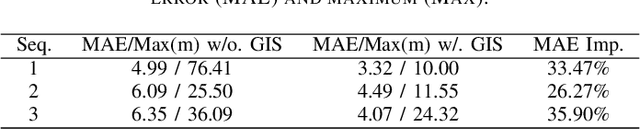

Abstract:The Global Positioning System (GPS) has become a part of our daily life with the primary goal of providing geopositioning service. For an unmanned aerial system (UAS), geolocalization ability is an extremely important necessity which is achieved using Inertial Navigation System (INS) with the GPS at its heart. Without geopositioning service, UAS is unable to fly to its destination or come back home. Unfortunately, GPS signals can be jammed and suffer from a multipath problem in urban canyons. Our goal is to propose an alternative approach to geolocalize a UAS when GPS signal is degraded or denied. Considering UAS has a downward-looking camera on its platform that can acquire real-time images as the platform flies, we apply modern deep learning techniques to achieve geolocalization. In particular, we perform image matching to establish latent feature conjugates between UAS acquired imagery and satellite orthophotos. A typical application of feature matching suffers from high-rise buildings and new constructions in the field that introduce uncertainties into homography estimation, hence results in poor geolocalization performance. Instead, we extract GIS information from OpenStreetMap (OSM) to semantically segment matched features into building and terrain classes. The GIS mask works as a filter in selecting semantically matched features that enhance coplanarity conditions and the UAS geolocalization accuracy. Once the paper is published our code will be publicly available at https://github.com/OSUPCVLab/UbihereDrone2021.

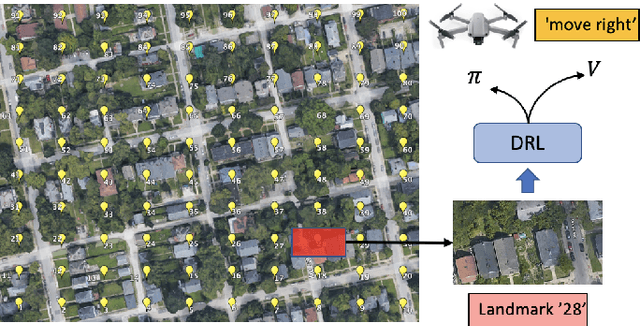

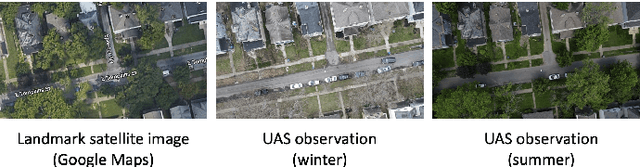

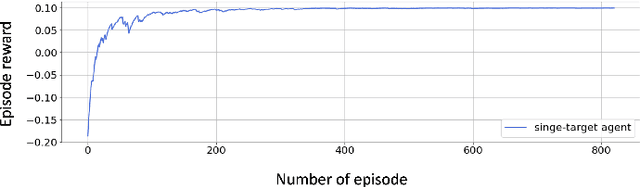

UAS Navigation in the Real World Using Visual Observation

Aug 25, 2022

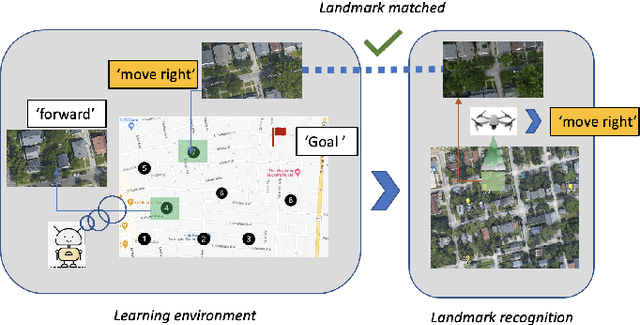

Abstract:This paper presents a novel end-to-end Unmanned Aerial System (UAS) navigation approach for long-range visual navigation in the real world. Inspired by dual-process visual navigation system of human's instinct: environment understanding and landmark recognition, we formulate the UAS navigation task into two same phases. Our system combines the reinforcement learning (RL) and image matching approaches. First, the agent learns the navigation policy using RL in the specified environment. To achieve this, we design an interactive UASNAV environment for the training process. Once the agent learns the navigation policy, which means 'familiarized themselves with the environment', we let the UAS fly in the real world to recognize the landmarks using image matching method and take action according to the learned policy. During the navigation process, the UAS is embedded with single camera as the only visual sensor. We demonstrate that the UAS can learn navigating to the destination hundreds meters away from the starting point with the shortest path in the real world scenario.

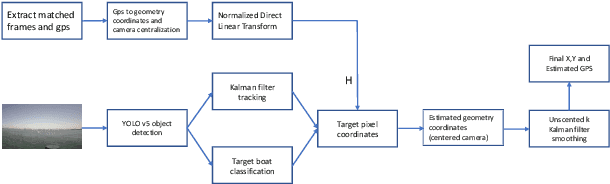

DeepTracks: Geopositioning Maritime Vehicles in Video Acquired from a Moving Platform

Sep 02, 2021

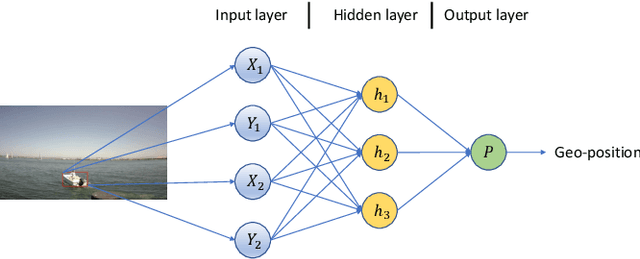

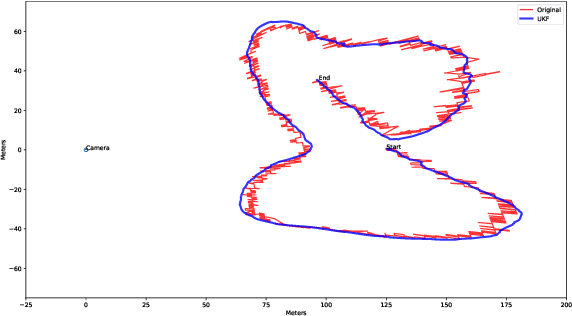

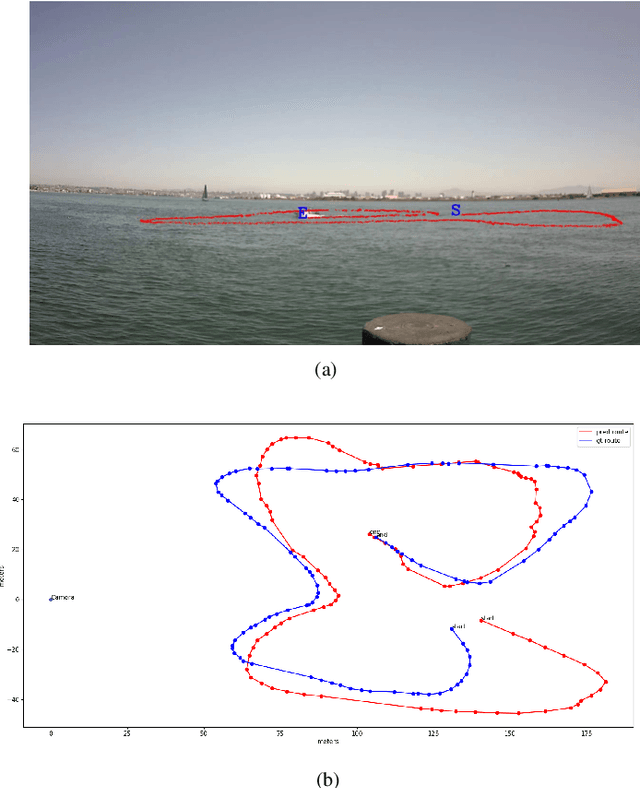

Abstract:Geopositioning and tracking a moving boat at sea is a very challenging problem, requiring boat detection, matching and estimating its GPS location from imagery with no common features. The problem can be stated as follows: given imagery from a camera mounted on a moving platform with known GPS location as the only valid sensor, we predict the geoposition of a target boat visible in images. Our solution uses recent ML algorithms, the camera-scene geometry and Bayesian filtering. The proposed pipeline first detects and tracks the target boat's location in the image with the strategy of tracking by detection. This image location is then converted to geoposition to the local sea coordinates referenced to the camera GPS location using plane projective geometry. Finally, target boat local coordinates are transformed to global GPS coordinates to estimate the geoposition. To achieve a smooth geotrajectory, we apply unscented Kalman filter (UKF) which implicitly overcomes small detection errors in the early stages of the pipeline. We tested the performance of our approach using GPS ground truth and show the accuracy and speed of the estimated geopositions. Our code is publicly available at https://github.com/JianliWei1995/AI-Track-at-Sea.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge