Jessica Y. Bo

Steerable Chatbots: Personalizing LLMs with Preference-Based Activation Steering

May 07, 2025Abstract:As large language models (LLMs) improve in their capacity to serve as personal AI assistants, their ability to output uniquely tailored, personalized responses that align with the soft preferences of their users is essential for enhancing user satisfaction and retention. However, untrained lay users have poor prompt specification abilities and often struggle with conveying their latent preferences to AI assistants. To address this, we leverage activation steering to guide LLMs to align with interpretable preference dimensions during inference. In contrast to memory-based personalization methods that require longer user history, steering is extremely lightweight and can be easily controlled by the user via an linear strength factor. We embed steering into three different interactive chatbot interfaces and conduct a within-subjects user study (n=14) to investigate how end users prefer to personalize their conversations. The results demonstrate the effectiveness of preference-based steering for aligning real-world conversations with hidden user preferences, and highlight further insights on how diverse values around control, usability, and transparency lead users to prefer different interfaces.

Incremental XAI: Memorable Understanding of AI with Incremental Explanations

Apr 10, 2024

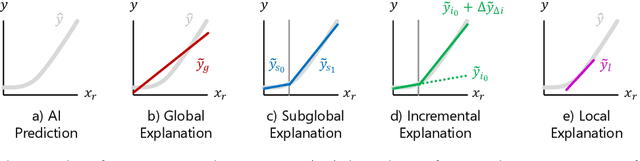

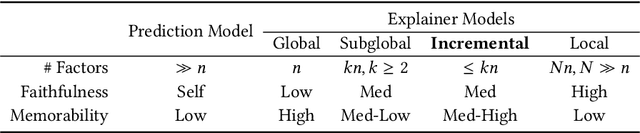

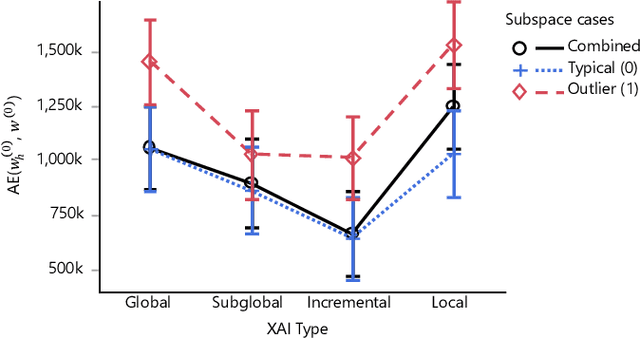

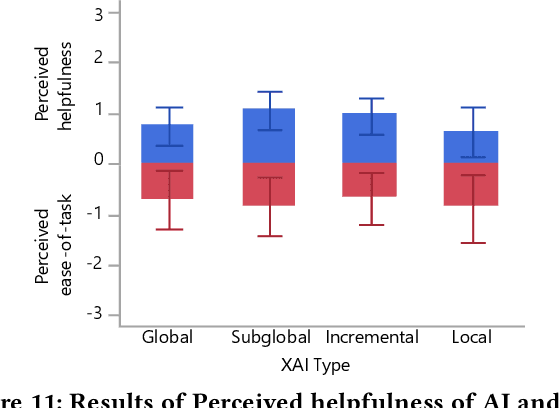

Abstract:Many explainable AI (XAI) techniques strive for interpretability by providing concise salient information, such as sparse linear factors. However, users either only see inaccurate global explanations, or highly-varying local explanations. We propose to provide more detailed explanations by leveraging the human cognitive capacity to accumulate knowledge by incrementally receiving more details. Focusing on linear factor explanations (factors $\times$ values = outcome), we introduce Incremental XAI to automatically partition explanations for general and atypical instances by providing Base + Incremental factors to help users read and remember more faithful explanations. Memorability is improved by reusing base factors and reducing the number of factors shown in atypical cases. In modeling, formative, and summative user studies, we evaluated the faithfulness, memorability and understandability of Incremental XAI against baseline explanation methods. This work contributes towards more usable explanation that users can better ingrain to facilitate intuitive engagement with AI.

Pretraining ECG Data with Adversarial Masking Improves Model Generalizability for Data-Scarce Tasks

Nov 15, 2022

Abstract:Medical datasets often face the problem of data scarcity, as ground truth labels must be generated by medical professionals. One mitigation strategy is to pretrain deep learning models on large, unlabelled datasets with self-supervised learning (SSL). Data augmentations are essential for improving the generalizability of SSL-trained models, but they are typically handcrafted and tuned manually. We use an adversarial model to generate masks as augmentations for 12-lead electrocardiogram (ECG) data, where masks learn to occlude diagnostically-relevant regions of the ECGs. Compared to random augmentations, adversarial masking reaches better accuracy when transferring to to two diverse downstream objectives: arrhythmia classification and gender classification. Compared to a state-of-art ECG augmentation method 3KG, adversarial masking performs better in data-scarce regimes, demonstrating the generalizability of our model.

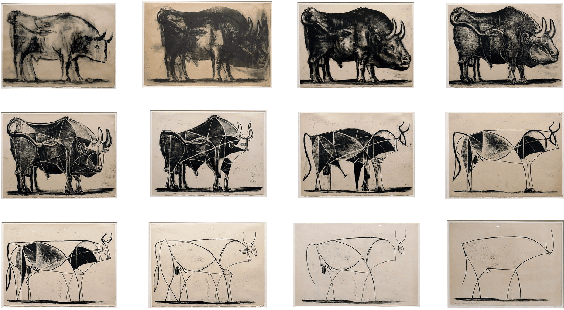

CLIPasso: Semantically-Aware Object Sketching

Feb 11, 2022

Abstract:Abstraction is at the heart of sketching due to the simple and minimal nature of line drawings. Abstraction entails identifying the essential visual properties of an object or scene, which requires semantic understanding and prior knowledge of high-level concepts. Abstract depictions are therefore challenging for artists, and even more so for machines. We present an object sketching method that can achieve different levels of abstraction, guided by geometric and semantic simplifications. While sketch generation methods often rely on explicit sketch datasets for training, we utilize the remarkable ability of CLIP (Contrastive-Language-Image-Pretraining) to distill semantic concepts from sketches and images alike. We define a sketch as a set of B\'ezier curves and use a differentiable rasterizer to optimize the parameters of the curves directly with respect to a CLIP-based perceptual loss. The abstraction degree is controlled by varying the number of strokes. The generated sketches demonstrate multiple levels of abstraction while maintaining recognizability, underlying structure, and essential visual components of the subject drawn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge