Jerrin Thomas Panachakel

Ethical Challenges of Using Artificial Intelligence in Judiciary

Apr 27, 2025Abstract:Artificial intelligence (AI) has emerged as a ubiquitous concept in numerous domains, including the legal system. AI has the potential to revolutionize the functioning of the judiciary and the dispensation of justice. Incorporating AI into the legal system offers the prospect of enhancing decision-making for judges, lawyers, and legal professionals, while concurrently providing the public with more streamlined, efficient, and cost-effective services. The integration of AI into the legal landscape offers manifold benefits, encompassing tasks such as document review, legal research, contract analysis, case prediction, and decision-making. By automating laborious and error-prone procedures, AI has the capacity to alleviate the burden associated with these arduous tasks. Consequently, courts around the world have begun embracing AI technology as a means to enhance the administration of justice. However, alongside its potential advantages, the use of AI in the judiciary poses a range of ethical challenges. These ethical quandaries must be duly addressed to ensure the responsible and equitable deployment of AI systems. This article delineates the principal ethical challenges entailed in employing AI within the judiciary and provides recommendations to effectively address these issues.

Navigating AI Policy Landscapes: Insights into Human Rights Considerations Across IEEE Regions

Apr 27, 2025Abstract:This paper explores the integration of human rights considerations into AI regulatory frameworks across different IEEE regions - specifically the United States (Region 1-6), Europe (Region 8), China (part of Region 10), and Singapore (part of Region 10). While all acknowledge the transformative potential of AI and the necessity of ethical guidelines, their regulatory approaches significantly differ. Europe exhibits a rigorous framework with stringent protections for individual rights, while the U.S. promotes innovation with less restrictive regulations. China emphasizes state control and societal order in its AI strategies. In contrast, Singapore's advisory framework encourages self-regulation and aligns closely with international norms. This comparative analysis underlines the need for ongoing global dialogue to harmonize AI regulations that safeguard human rights while promoting technological advancement, reflecting the diverse perspectives and priorities of each region.

Subject-independent Classification of Meditative State from the Resting State using EEG

Apr 25, 2025Abstract:While it is beneficial to objectively determine whether a subject is meditating, most research in the literature reports good results only in a subject-dependent manner. This study aims to distinguish the modified state of consciousness experienced during Rajyoga meditation from the resting state of the brain in a subject-independent manner using EEG data. Three architectures have been proposed and evaluated: The CSP-LDA Architecture utilizes common spatial pattern (CSP) for feature extraction and linear discriminant analysis (LDA) for classification. The CSP-LDA-LSTM Architecture employs CSP for feature extraction, LDA for dimensionality reduction, and long short-term memory (LSTM) networks for classification, modeling the binary classification problem as a sequence learning problem. The SVD-NN Architecture uses singular value decomposition (SVD) to select the most relevant components of the EEG signals and a shallow neural network (NN) for classification. The CSP-LDA-LSTM architecture gives the best performance with 98.2% accuracy for intra-subject classification. The SVD-NN architecture provides significant performance with 96.4\% accuracy for inter-subject classification. This is comparable to the best-reported accuracies in the literature for intra-subject classification. Both architectures are capable of capturing subject-invariant EEG features for effectively classifying the meditative state from the resting state. The high intra-subject and inter-subject classification accuracies indicate these systems' robustness and their ability to generalize across different subjects.

Advancements in Myocardial Infarction Detection and Classification Using Wearable Devices: A Comprehensive Review

Nov 27, 2024

Abstract:Myocardial infarction (MI), commonly known as a heart attack, is a critical health condition caused by restricted blood flow to the heart. Early-stage detection through continuous ECG monitoring is essential to minimize irreversible damage. This review explores advancements in MI classification methodologies for wearable devices, emphasizing their potential in real-time monitoring and early diagnosis. It critically examines traditional approaches, such as morphological filtering and wavelet decomposition, alongside cutting-edge techniques, including Convolutional Neural Networks (CNNs) and VLSI-based methods. By synthesizing findings on machine learning, deep learning, and hardware innovations, this paper highlights their strengths, limitations, and future prospects. The integration of these techniques into wearable devices offers promising avenues for efficient, accurate, and energy-aware MI detection, paving the way for next-generation wearable healthcare solutions.

Emotion Detection from EEG using Transfer Learning

Jun 09, 2023

Abstract:The detection of emotions using an Electroencephalogram (EEG) is a crucial area in brain-computer interfaces and has valuable applications in fields such as rehabilitation and medicine. In this study, we employed transfer learning to overcome the challenge of limited data availability in EEG-based emotion detection. The base model used in this study was Resnet50. Additionally, we employed a novel feature combination in EEG-based emotion detection. The input to the model was in the form of an image matrix, which comprised Mean Phase Coherence (MPC) and Magnitude Squared Coherence (MSC) in the upper-triangular and lower-triangular matrices, respectively. We further improved the technique by incorporating features obtained from the Differential Entropy (DE) into the diagonal, which previously held little to no useful information for classifying emotions. The dataset used in this study, SEED EEG (62 channel EEG), comprises three classes (Positive, Neutral, and Negative). We calculated both subject-independent and subject-dependent accuracy. The subject-dependent accuracy was obtained using a 10-fold cross-validation method and was 93.1%, while the subject-independent classification was performed by employing the leave-one-subject-out (LOSO) strategy. The accuracy obtained in subject-independent classification was 71.6%. Both of these accuracies are at least twice better than the chance accuracy of classifying 3 classes. The study found the use of MSC and MPC in EEG-based emotion detection promising for emotion classification. The future scope of this work includes the use of data augmentation techniques, enhanced classifiers, and better features for emotion classification.

A Novel Deep Learning Architecture for Decoding Imagined Speech from EEG

Mar 19, 2020

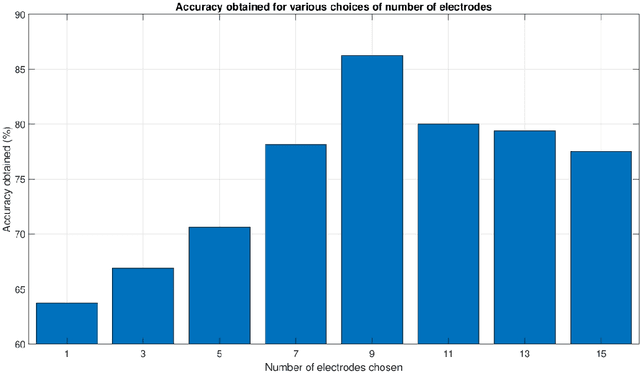

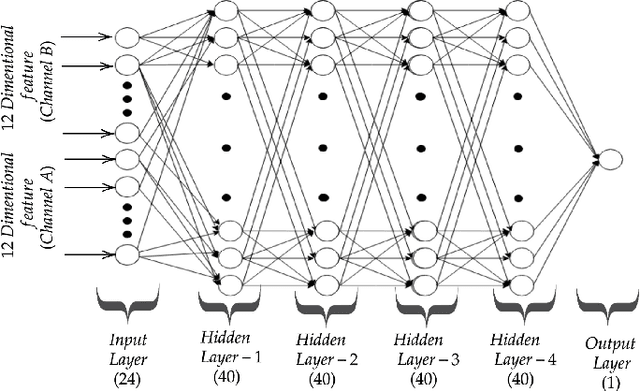

Abstract:The recent advances in the field of deep learning have not been fully utilised for decoding imagined speech primarily because of the unavailability of sufficient training samples to train a deep network. In this paper, we present a novel architecture that employs deep neural network (DNN) for classifying the words "in" and "cooperate" from the corresponding EEG signals in the ASU imagined speech dataset. Nine EEG channels, which best capture the underlying cortical activity, are chosen using common spatial pattern (CSP) and are treated as independent data vectors. Discrete wavelet transform (DWT) is used for feature extraction. To the best of our knowledge, so far DNN has not been employed as a classifier in decoding imagined speech. Treating the selected EEG channels corresponding to each imagined word as independent data vectors helps in providing sufficient number of samples to train a DNN. For each test trial, the final class label is obtained by applying a majority voting on the classification results of the individual channels considered in the trial. We have achieved accuracies comparable to the state-of-the-art results. The results can be further improved by using a higher-density EEG acquisition system in conjunction with other deep learning techniques such as long short-term memory.

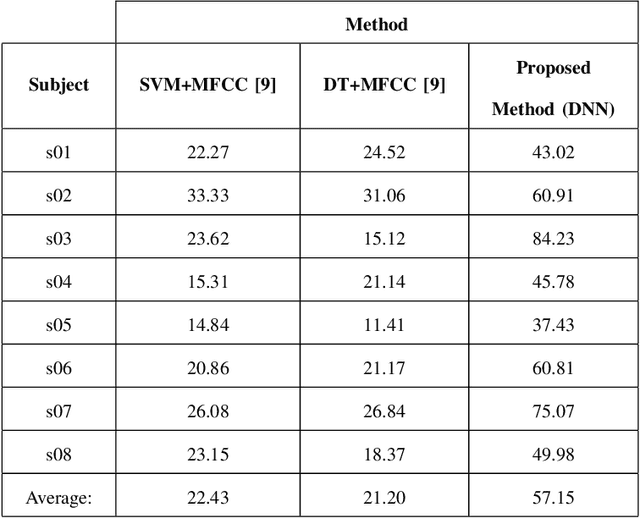

Decoding Imagined Speech using Wavelet Features and Deep Neural Networks

Mar 19, 2020

Abstract:This paper proposes a novel approach that uses deep neural networks for classifying imagined speech, significantly increasing the classification accuracy. The proposed approach employs only the EEG channels over specific areas of the brain for classification, and derives distinct feature vectors from each of those channels. This gives us more data to train a classifier, enabling us to use deep learning approaches. Wavelet and temporal domain features are extracted from each channel. The final class label of each test trial is obtained by applying a majority voting on the classification results of the individual channels considered in the trial. This approach is used for classifying all the 11 prompts in the KaraOne dataset of imagined speech. The proposed architecture and the approach of treating the data have resulted in an average classification accuracy of 57.15%, which is an improvement of around 35% over the state-of-the-art results.

Two Tier Prediction of Stroke Using Artificial Neural Networks and Support Vector Machines

Mar 19, 2020

Abstract:Cerebrovascular accident (CVA) or stroke is the rapid loss of brain function due to disturbance in the blood supply to the brain. Statistically, stroke is the second leading cause of death. This has motivated us to suggest a two-tier system for predicting stroke; the first tier makes use of Artificial Neural Network (ANN) to predict the chances of a person suffering from stroke. The ANN is trained the using the values of various risk factors of stroke of several patients who had stroke. Once a person is classified as having a high risk of stroke, s/he undergoes another the tier-2 classification test where his/her neuro MRI (Magnetic resonance imaging) is analysed to predict the chances of stroke. The tier-2 uses Non-negative Matrix Factorization and Haralick Textural features for feature extraction and SVM classifier for classification. We have obtained an accuracy of 96.67% in tier-1 and an accuracy of 70% in tier-2.

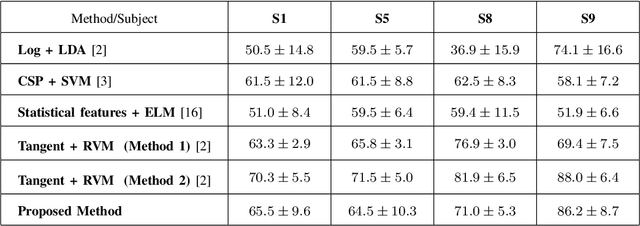

An Improved EEG Acquisition Protocol Facilitates Localized Neural Activation

Mar 13, 2020

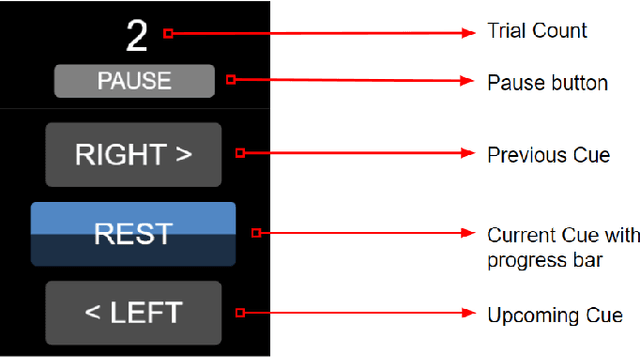

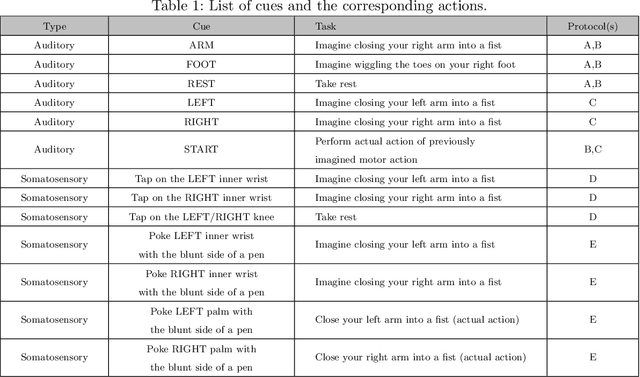

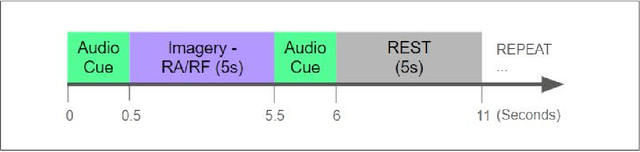

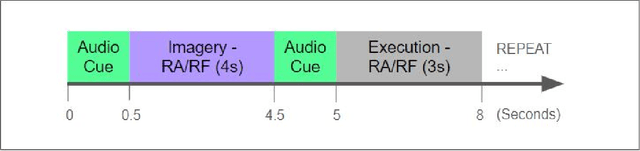

Abstract:This work proposes improvements in the electroencephalogram (EEG) recording protocols for motor imagery through the introduction of actual motor movement and/or somatosensory cues. The results obtained demonstrate the advantage of requiring the subjects to perform motor actions following the trials of imagery. By introducing motor actions in the protocol, the subjects are able to perform actual motor planning, rather than just visualizing the motor movement, thus greatly improving the ease with which the motor movements can be imagined. This study also probes the added advantage of administering somatosensory cues in the subject, as opposed to the conventional auditory/visual cues. These changes in the protocol show promise in terms of the aptness of the spatial filters obtained on the data, on application of the well-known common spatial pattern (CSP) algorithms. The regions highlighted by the spatial filters are more localized and consistent across the subjects when the protocol is augmented with somatosensory stimuli. Hence, we suggest that this may prove to be a better EEG acquisition protocol for detecting brain activation in response to intended motor commands in (clinically) paralyzed/locked-in patients.

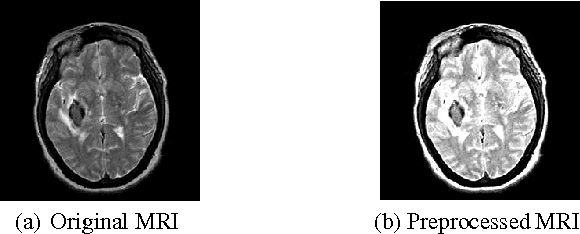

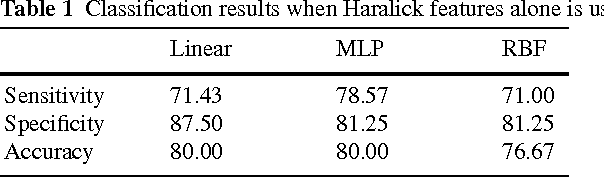

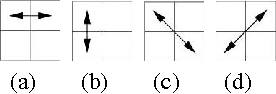

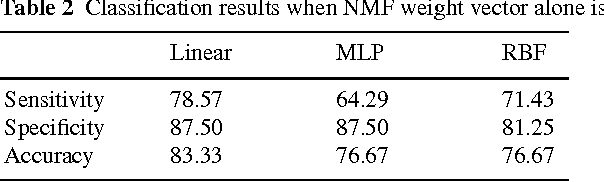

Multi-level SVM Based CAD Tool for Classifying Structural MRIs

Jun 26, 2017

Abstract:The revolutionary developments in the field of supervised machine learning have paved way to the development of CAD tools for assisting doctors in diagnosis. Recently, the former has been employed in the prediction of neurological disorders such as Alzheimer's disease. We propose a CAD (Computer Aided Diagnosis tool for differentiating neural lesions caused by CVA (Cerebrovascular Accident) from the lesions caused by other neural disorders by using Non-negative Matrix Factorisation (NMF) and Haralick features for feature extraction and SVM (Support Vector Machine) for pattern recognition. We also introduce a multi-level classification system that has better classification efficiency, sensitivity and specificity when compared to systems using NMF or Haralick features alone as features for classification. Cross-validation was performed using LOOCV (Leave-One-Out Cross Validation) method and our proposed system has a classification accuracy of over 86%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge