Jeffrey Fong

MIRROR: Differentiable Deep Social Projection for Assistive Human-Robot Communication

Mar 06, 2022

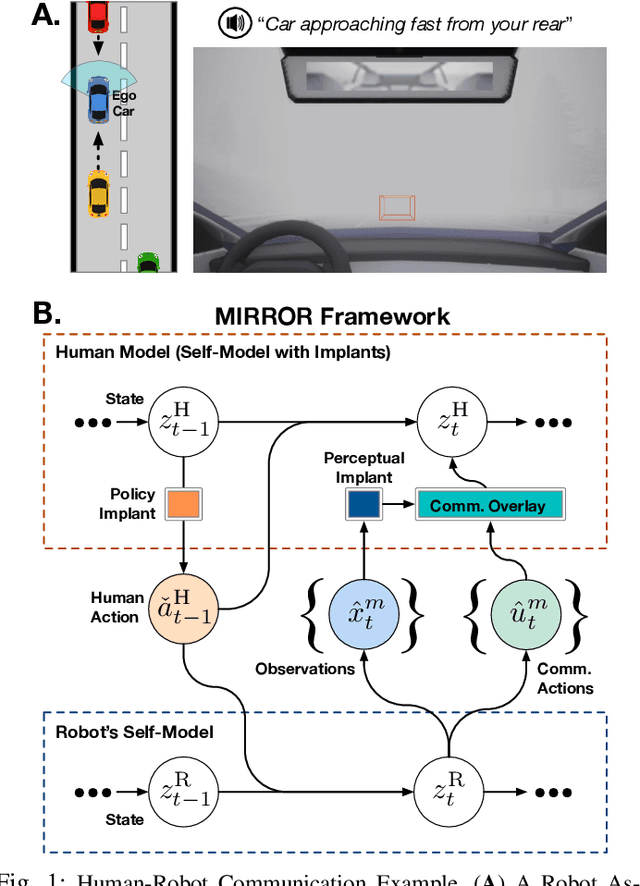

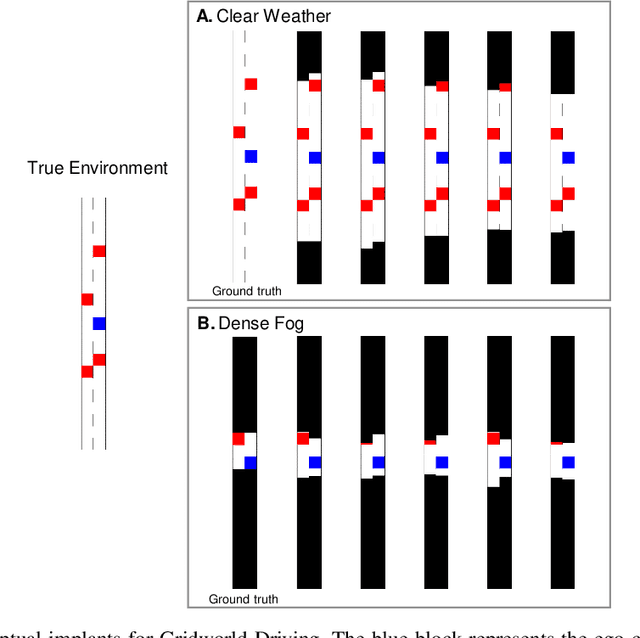

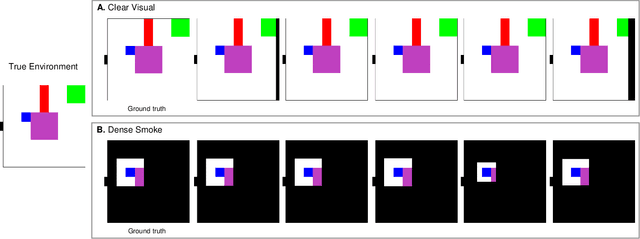

Abstract:Communication is a hallmark of intelligence. In this work, we present MIRROR, an approach to (i) quickly learn human models from human demonstrations, and (ii) use the models for subsequent communication planning in assistive shared-control settings. MIRROR is inspired by social projection theory, which hypothesizes that humans use self-models to understand others. Likewise, MIRROR leverages self-models learned using reinforcement learning to bootstrap human modeling. Experiments with simulated humans show that this approach leads to rapid learning and more robust models compared to existing behavioral cloning and state-of-the-art imitation learning methods. We also present a human-subject study using the CARLA simulator which shows that (i) MIRROR is able to scale to complex domains with high-dimensional observations and complicated world physics and (ii) provides effective assistive communication that enabled participants to drive more safely in adverse weather conditions.

Improving Layer-wise Adaptive Rate Methods using Trust Ratio Clipping

Nov 27, 2020

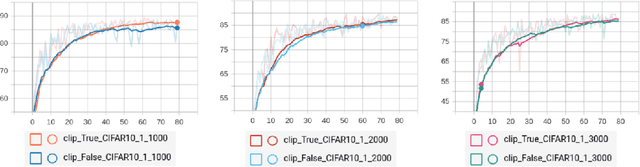

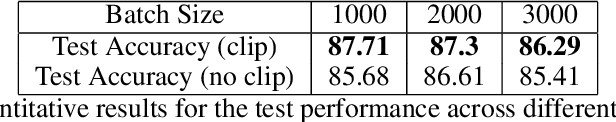

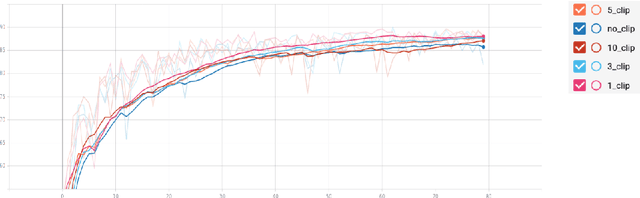

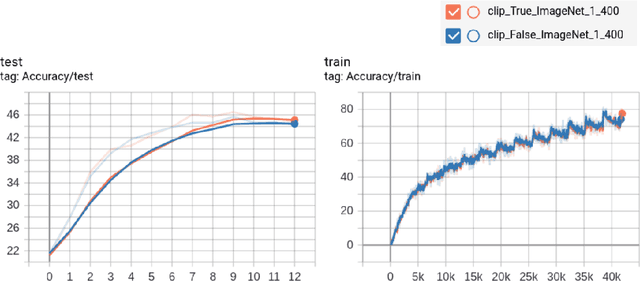

Abstract:Training neural networks with large batch is of fundamental significance to deep learning. Large batch training remarkably reduces the amount of training time but has difficulties in maintaining accuracy. Recent works have put forward optimization methods such as LARS and LAMB to tackle this issue through adaptive layer-wise optimization using trust ratios. Though prevailing, such methods are observed to still suffer from unstable and extreme trust ratios which degrades performance. In this paper, we propose a new variant of LAMB, called LAMBC, which employs trust ratio clipping to stabilize its magnitude and prevent extreme values. We conducted experiments on image classification tasks such as ImageNet and CIFAR-10 and our empirical results demonstrate promising improvements across different batch sizes.

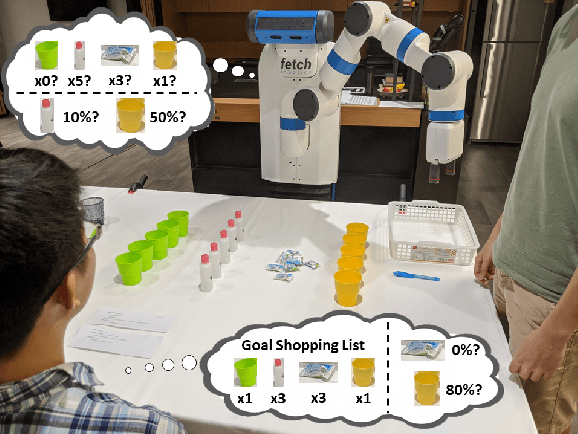

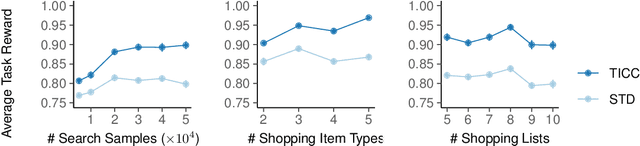

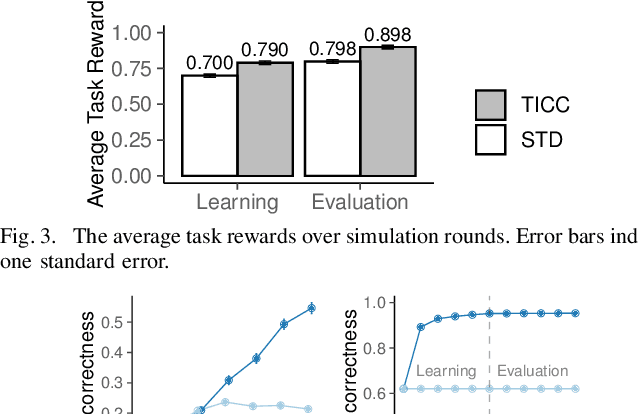

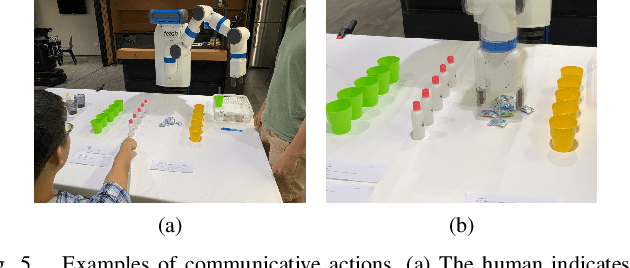

Getting to Know One Another: Calibrating Intent, Capabilities and Trust for Human-Robot Collaboration

Aug 03, 2020

Abstract:Common experience suggests that agents who know each other well are better able to work together. In this work, we address the problem of calibrating intention and capabilities in human-robot collaboration. In particular, we focus on scenarios where the robot is attempting to assist a human who is unable to directly communicate her intent. Moreover, both agents may have differing capabilities that are unknown to one another. We adopt a decision-theoretic approach and propose the TICC-POMDP for modeling this setting, with an associated online solver. Experiments show our approach leads to better team performance both in simulation and in a real-world study with human subjects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge