Jeewook Kim

Two-Stage Grid Optimization for Group-wise Quantization of LLMs

Feb 02, 2026Abstract:Group-wise quantization is an effective strategy for mitigating accuracy degradation in low-bit quantization of large language models (LLMs). Among existing methods, GPTQ has been widely adopted due to its efficiency; however, it neglects input statistics and inter-group correlations when determining group scales, leading to a mismatch with its goal of minimizing layer-wise reconstruction loss. In this work, we propose a two-stage optimization framework for group scales that explicitly minimizes the layer-wise reconstruction loss. In the first stage, performed prior to GPTQ, we initialize each group scale to minimize the group-wise reconstruction loss, thereby incorporating input statistics. In the second stage, we freeze the integer weights obtained via GPTQ and refine the group scales to minimize the layer-wise reconstruction loss. To this end, we employ the coordinate descent algorithm and derive a closed-form update rule, which enables efficient refinement without costly numerical optimization. Notably, our derivation incorporates the quantization errors from preceding layers to prevent error accumulation. Experimental results demonstrate that our method consistently enhances group-wise quantization, achieving higher accuracy with negligible overhead.

All-in-One Deep Learning Framework for MR Image Reconstruction

May 06, 2024

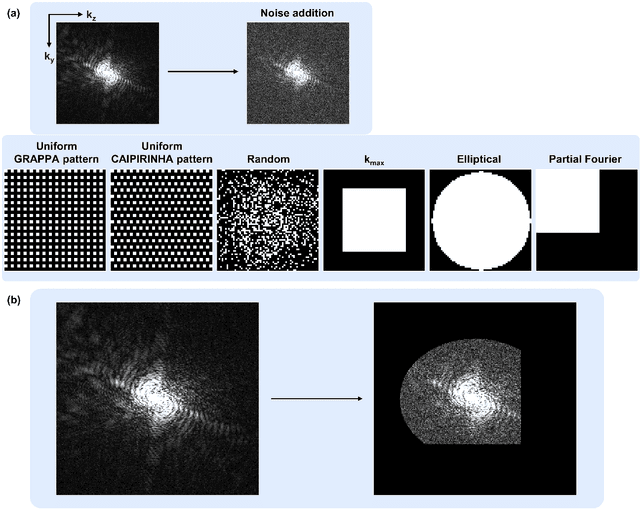

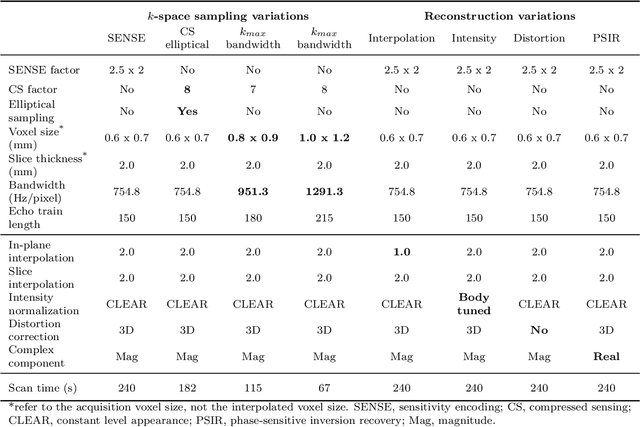

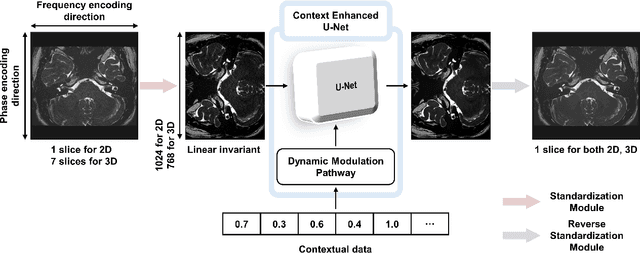

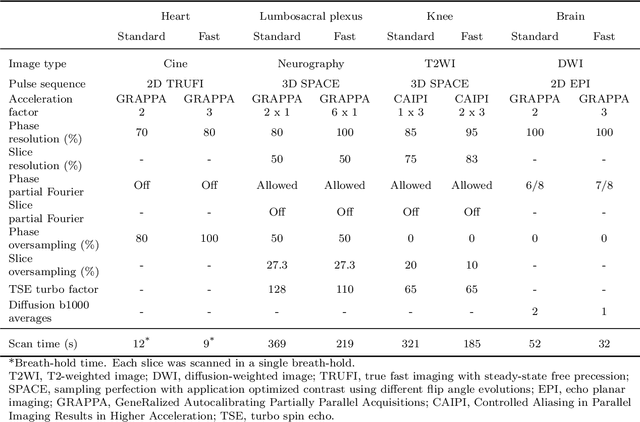

Abstract:We introduce a novel, all-in-one deep learning framework for MR image reconstruction, enabling a single model to enhance image quality across multiple aspects of k-space sampling and to be effective across a wide range of clinical and technical scenarios. This DICOM-based algorithm serves as the core of SwiftMR (AIRS Medical, Seoul, Korea), which is FDA-cleared, CE-certified, and commercially available. We first detail the comprehensive development process of the model, including data collection, training pair preparation, model architecture design, and DICOM inference. We then assess the model's capability to enhance image quality in a multi-dimensional manner, specifically across various aspects of k-space sampling. Subsequently, we evaluate several features of the multi-dimensional enhancement: the accuracy of tunable denoising, the effectiveness of super-resolution in each encoding direction, and the reduction of artifacts that become more prominent at lower spatial resolutions. Additionally, we assess its compatibility with various scan parameter sets and its generalizability across scanner vendors not seen during training. Finally, we present specific cases demonstrating the model's utility in reducing scan time across anatomical regions in conjunction with protocol optimization. The proposed model is compatible with a broad spectrum of scenarios, including various vendors, pulse sequences, scan parameters, and anatomical regions. Its DICOM-based operation particularly enhances its applicability for real-world applications. Given its demonstrated effectiveness and versatility, we expect its use to expand in the field of clinical MRI.

Privacy Safe Representation Learning via Frequency Filtering Encoder

Aug 04, 2022

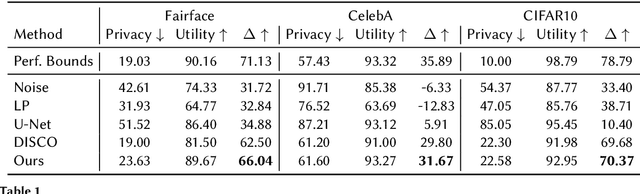

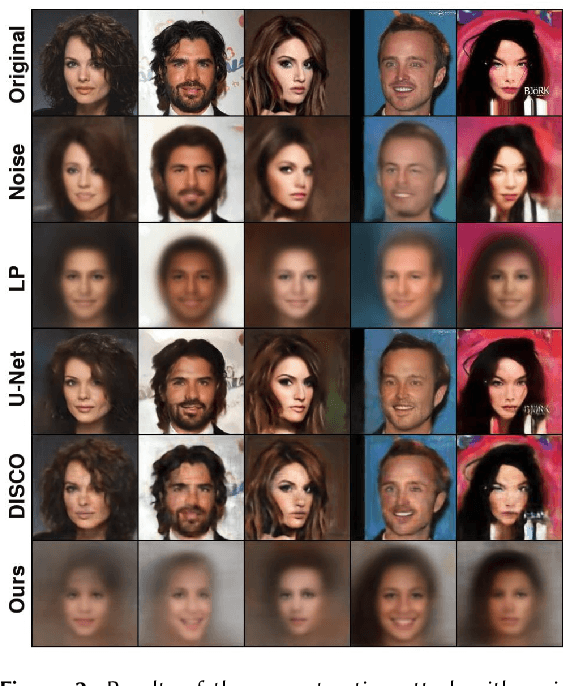

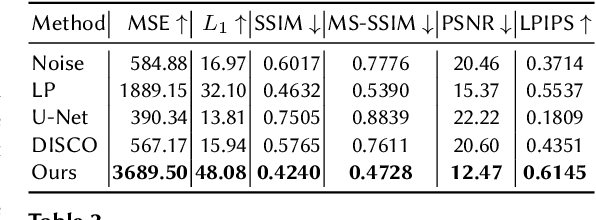

Abstract:Deep learning models are increasingly deployed in real-world applications. These models are often deployed on the server-side and receive user data in an information-rich representation to solve a specific task, such as image classification. Since images can contain sensitive information, which users might not be willing to share, privacy protection becomes increasingly important. Adversarial Representation Learning (ARL) is a common approach to train an encoder that runs on the client-side and obfuscates an image. It is assumed, that the obfuscated image can safely be transmitted and used for the task on the server without privacy concerns. However, in this work, we find that training a reconstruction attacker can successfully recover the original image of existing ARL methods. To this end, we introduce a novel ARL method enhanced through low-pass filtering, limiting the available information amount to be encoded in the frequency domain. Our experimental results reveal that our approach withstands reconstruction attacks while outperforming previous state-of-the-art methods regarding the privacy-utility trade-off. We further conduct a user study to qualitatively assess our defense of the reconstruction attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge