Jean-Louis Dessalles

INFRES, LTCI

Exploring Structures of Inferential Mechanisms through Simplistic Digital Circuits

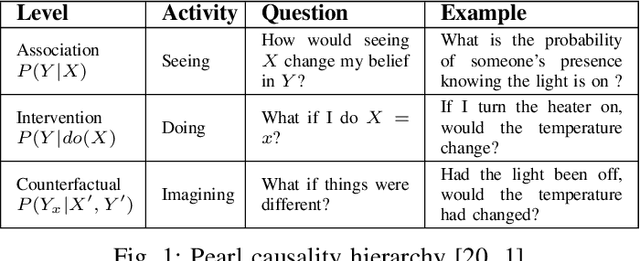

Oct 27, 2025Abstract:Cognitive studies and artificial intelligence have developed distinct models for various inferential mechanisms (categorization, induction, abduction, causal inference, contrast, merge, ...). Yet, both natural and artificial views on cognition lack apparently a unifying framework. This paper formulates a speculative answer attempting to respond to this gap. To postulate on higher-level activation processes from a material perspective, we consider inferential mechanisms informed by symbolic AI modelling techniques, through the simplistic lenses of electronic circuits based on logic gates. We observe that a logic gate view entails a different treatment of implication and negation compared to standard logic and logic programming. Then, by combinatorial exploration, we identify four main forms of dependencies that can be realized by these inferential circuits. Looking at how these forms are generally used in the context of logic programs, we identify eight common inferential patterns, exposing traditionally distinct inferential mechanisms in an unifying framework. Finally, following a probabilistic interpretation of logic programs, we unveil inner functional dependencies. The paper concludes elaborating in what sense, even if our arguments are mostly informed by symbolic means and digital systems infrastructures, our observations may pinpoint to more generally applicable structures.

Searching for actual causes: Approximate algorithms with adjustable precision

Jul 10, 2025Abstract:Causality has gained popularity in recent years. It has helped improve the performance, reliability, and interpretability of machine learning models. However, recent literature on explainable artificial intelligence (XAI) has faced criticism. The classical XAI and causality literature focuses on understanding which factors contribute to which consequences. While such knowledge is valuable for researchers and engineers, it is not what non-expert users expect as explanations. Instead, these users often await facts that cause the target consequences, i.e., actual causes. Formalizing this notion is still an open problem. Additionally, identifying actual causes is reportedly an NP-complete problem, and there are too few practical solutions to approximate formal definitions. We propose a set of algorithms to identify actual causes with a polynomial complexity and an adjustable level of precision and exhaustiveness. Our experiments indicate that the algorithms (1) identify causes for different categories of systems that are not handled by existing approaches (i.e., non-boolean, black-box, and stochastic systems), (2) can be adjusted to gain more precision and exhaustiveness with more computation time.

Three Conjectures on Unexpectedeness

Nov 15, 2023Abstract:Unexpectedness is a central concept in Simplicity Theory, a theory of cognition relating various inferential processes to the computation of Kolmogorov complexities, rather than probabilities. Its predictive power has been confirmed by several experiments with human subjects, yet its theoretical basis remains largely unexplored: why does it work? This paper lays the groundwork for three theoretical conjectures. First, unexpectedness can be seen as a generalization of Bayes' rule. Second, the frequentist core of unexpectedness can be connected to the function of tracking ergodic properties of the world. Third, unexpectedness can be seen as constituent of various measures of divergence between the entropy of the world (environment) and the variety of the observer (system). The resulting framework hints to research directions that go beyond the division between probabilistic and logical approaches, potentially bringing new insights into the extraction of causal relations, and into the role of descriptive mechanisms in learning.

From Probabilistic Programming to Complexity-based Programming

Jul 28, 2023

Abstract:The paper presents the main characteristics and a preliminary implementation of a novel computational framework named CompLog. Inspired by probabilistic programming systems like ProbLog, CompLog builds upon the inferential mechanisms proposed by Simplicity Theory, relying on the computation of two Kolmogorov complexities (here implemented as min-path searches via ASP programs) rather than probabilistic inference. The proposed system enables users to compute ex-post and ex-ante measures of unexpectedness of a certain situation, mapping respectively to posterior and prior subjective probabilities. The computation is based on the specification of world and mental models by means of causal and descriptive relations between predicates weighted by complexity. The paper illustrates a few examples of application: generating relevant descriptions, and providing alternative approaches to disjunction and to negation.

To do or not to do: finding causal relations in smart homes

May 20, 2021

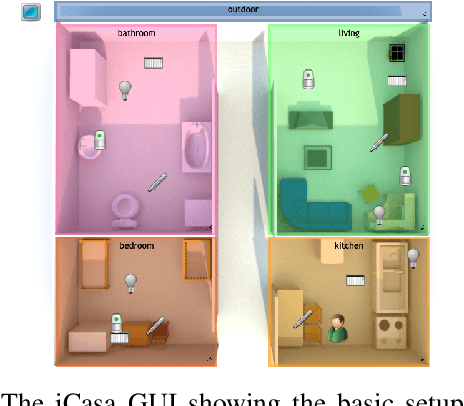

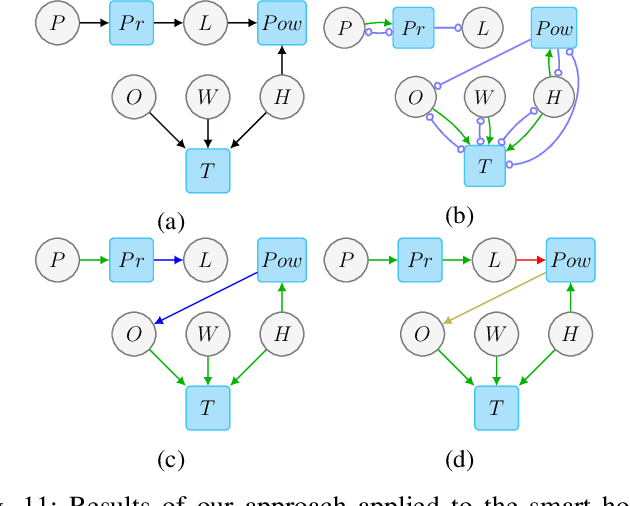

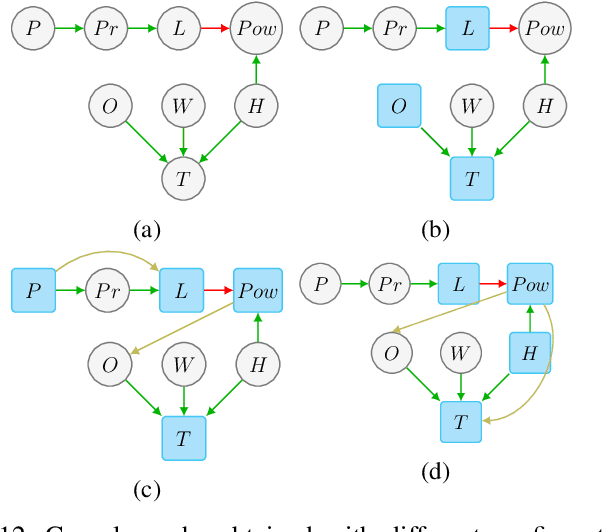

Abstract:Research in Cognitive Science suggests that humans understand and represent knowledge of the world through causal relationships. In addition to observations, they can rely on experimenting and counterfactual reasoning -- i.e. referring to an alternative course of events -- to identify causal relations and explain atypical situations. Different instances of control systems, such as smart homes, would benefit from having a similar causal model, as it would help the user understand the logic of the system and better react when needed. However, while data-driven methods achieve high levels of correlation detection, they mainly fall short of finding causal relations, notably being limited to observations only. Notably, they struggle to identify the cause from the effect when detecting a correlation between two variables. This paper introduces a new way to learn causal models from a mixture of experiments on the environment and observational data. The core of our method is the use of selected interventions, especially our learning takes into account the variables where it is impossible to intervene, unlike other approaches. The causal model we obtain is then used to generate Causal Bayesian Networks, which can be later used to perform diagnostic and predictive inference. We use our method on a smart home simulation, a use case where knowing causal relations pave the way towards explainable systems. Our algorithm succeeds in generating a Causal Bayesian Network close to the simulation's ground truth causal interactions, showing encouraging prospects for application in real-life systems.

REMI: Mining Intuitive Referring Expressions on Knowledge Bases

Nov 04, 2019

Abstract:A referring expression (RE) is a description that identifies a set of instances unambiguously. Mining REs from data finds applications in natural language generation, algorithmic journalism, and data maintenance. Since there may exist multiple REs for a given set of entities, it is common to focus on the most intuitive ones, i.e., the most concise and informative. In this paper we present REMI, a system that can mine intuitive REs on large RDF knowledge bases. Our experimental evaluation shows that REMI finds REs deemed intuitive by users. Moreover we show that REMI is several orders of magnitude faster than an approach based on inductive logic programming.

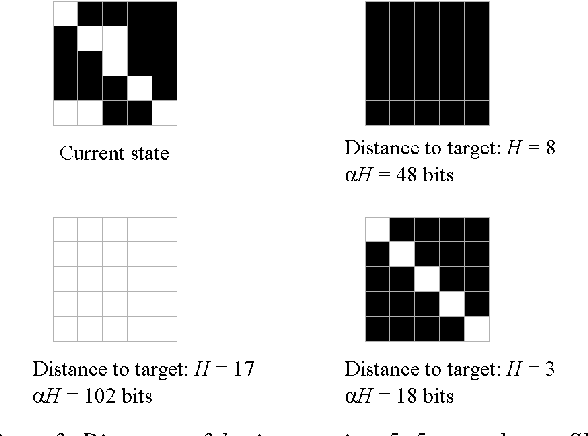

Role of Simplicity in Creative Behaviour: The Case of the Poietic Generator

Dec 22, 2016

Abstract:We propose to apply Simplicity Theory (ST) to model interest in creative situations. ST has been designed to describe and predict interest in communication. Here we use ST to derive a decision rule that we apply to a simplified version of a creative game, the Poietic Generator. The decision rule produces what can be regarded as an elementary form of creativity. This study is meant as a proof of principle. It suggests that some creative actions may be motivated by the search for unexpected simplicity.

* This study was supported by grants from the programme Futur&Ruptures and from the 'Chaire Modelisation des Imaginaires, Innovation et Creation', http://www.computationalcreativity.net/iccc2016/posters-and-demos/

Algorithmic Simplicity and Relevance

Aug 09, 2012

Abstract:The human mind is known to be sensitive to complexity. For instance, the visual system reconstructs hidden parts of objects following a principle of maximum simplicity. We suggest here that higher cognitive processes, such as the selection of relevant situations, are sensitive to variations of complexity. Situations are relevant to human beings when they appear simpler to describe than to generate. This definition offers a predictive (i.e. falsifiable) model for the selection of situations worth reporting (interestingness) and for what individuals consider an appropriate move in conversation.

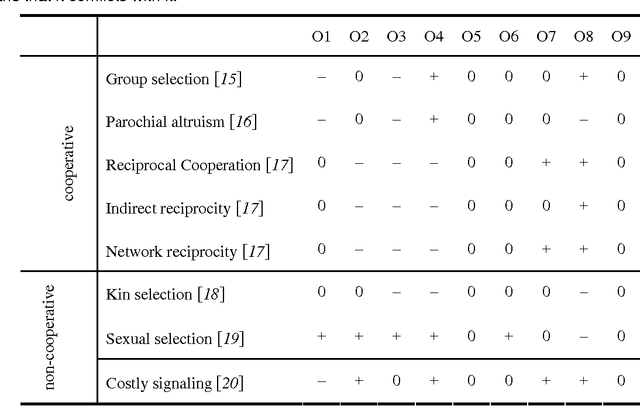

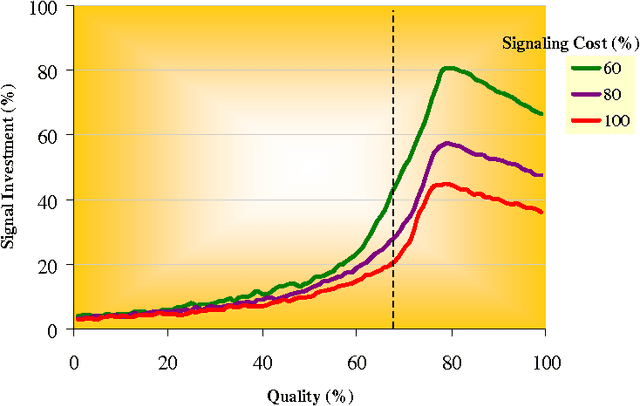

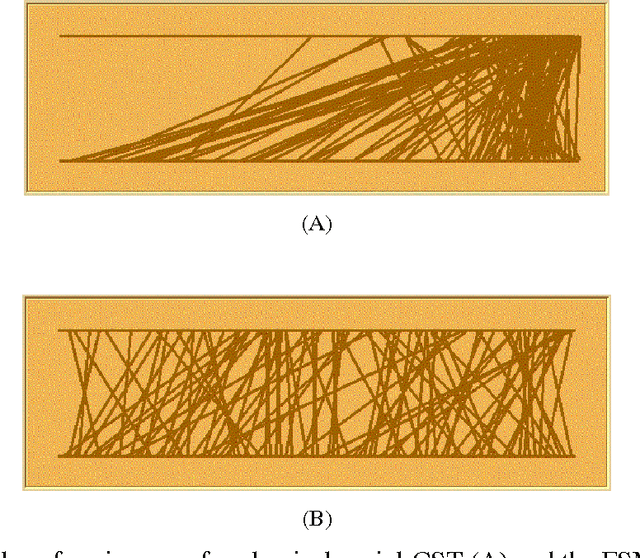

Providing information can be a stable non-cooperative evolutionary strategy

Aug 27, 2011

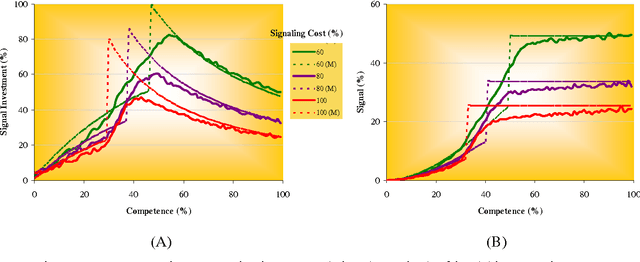

Abstract:Human language is still an embarrassment for evolutionary theory, as the speaker's benefit remains unclear. The willingness to communicate information is shown here to be an evolutionary stable strategy (ESS), even if acquiring original information from the environment involves significant cost and communicating it provides no material benefit to addressees. In this study, communication is used to advertise the emitter's ability to obtain novel information. We found that communication strategies can take two forms, competitive and uniform, that these two strategies are stable and that they necessarily coexist.

Why is language well-designed for communication? (Commentary on Christiansen and Chater: 'Language as shaped by the brain')

Aug 22, 2011Abstract:Selection through iterated learning explains no more than other non-functional accounts, such as universal grammar, why language is so well-designed for communicative efficiency. It does not predict several distinctive features of language like central embedding, large lexicons or the lack of iconicity, that seem to serve communication purposes at the expense of learnability.

* jld-08041101

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge