Ada Diaconescu

Searching for actual causes: Approximate algorithms with adjustable precision

Jul 10, 2025Abstract:Causality has gained popularity in recent years. It has helped improve the performance, reliability, and interpretability of machine learning models. However, recent literature on explainable artificial intelligence (XAI) has faced criticism. The classical XAI and causality literature focuses on understanding which factors contribute to which consequences. While such knowledge is valuable for researchers and engineers, it is not what non-expert users expect as explanations. Instead, these users often await facts that cause the target consequences, i.e., actual causes. Formalizing this notion is still an open problem. Additionally, identifying actual causes is reportedly an NP-complete problem, and there are too few practical solutions to approximate formal definitions. We propose a set of algorithms to identify actual causes with a polynomial complexity and an adjustable level of precision and exhaustiveness. Our experiments indicate that the algorithms (1) identify causes for different categories of systems that are not handled by existing approaches (i.e., non-boolean, black-box, and stochastic systems), (2) can be adjusted to gain more precision and exhaustiveness with more computation time.

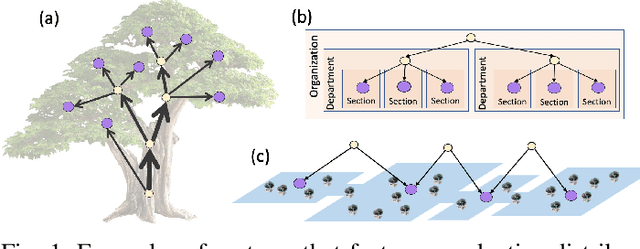

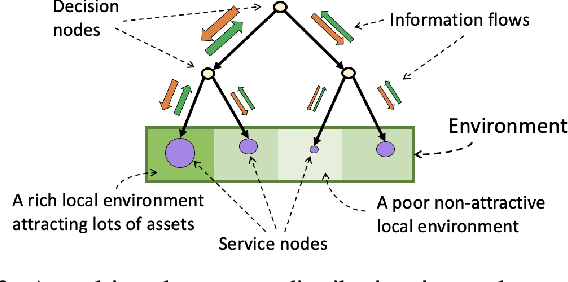

Multi-Scale Asset Distribution Model for Dynamic Environments

Jul 25, 2022

Abstract:In many self-organising systems the ability to extract necessary resources from the external environment is essential to the system's growth and survival. Examples include the extraction of sunlight and nutrients in organic plants, of monetary income in business organisations and of mobile robots in swarm intelligence actions. When operating within competitive, ever-changing environments, such systems must distribute their internal assets wisely so as to improve and adapt their ability to extract available resources. As the system size increases, the asset-distribution process often gets organised around a multi-scale control topology. This topology may be static (fixed) or dynamic (enabling growth and structural adaptation) depending on the system's internal constraints and adaptive mechanisms. In this paper, we expand on a plant-inspired asset-distribution model and introduce a more general multi-scale model applicable across a wider range of natural and artificial system domains. We study the impact that the topology of the multi-scale control process has upon the system's ability to self-adapt asset distribution when resource availability changes within the environment. Results show how different topological characteristics and different competition levels between system branches impact overall system profitability, adaptation delays and disturbances when environmental changes occur. These findings provide a basis for system designers to select the most suitable topology and configuration for their particular application and execution environment.

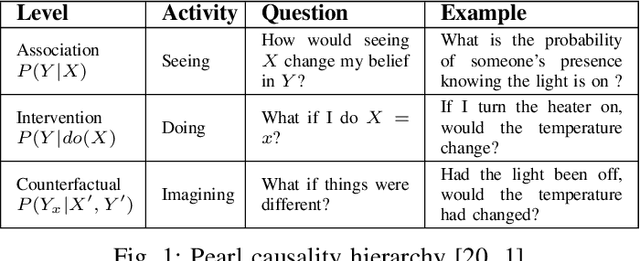

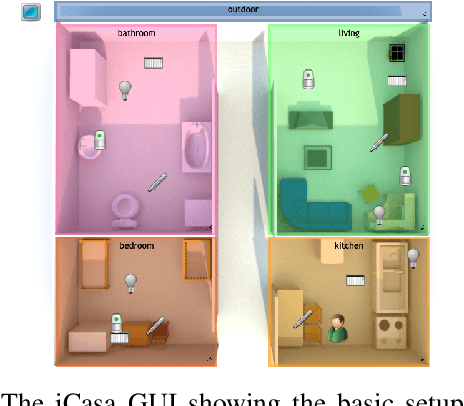

To do or not to do: finding causal relations in smart homes

May 20, 2021

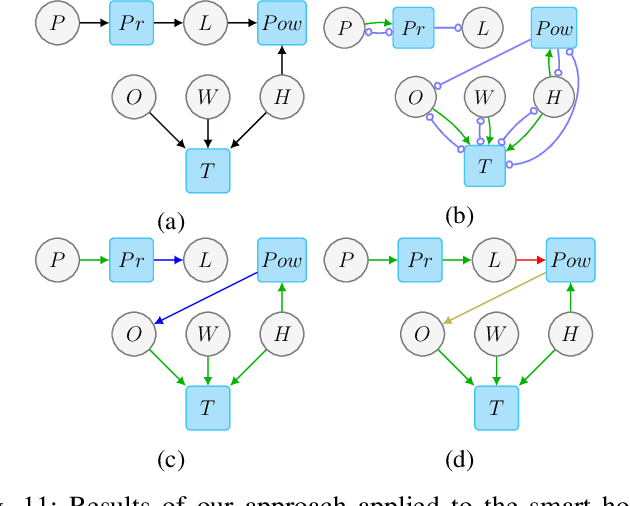

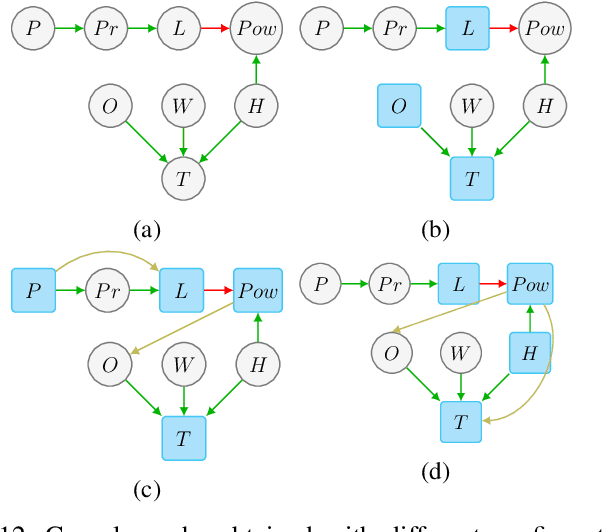

Abstract:Research in Cognitive Science suggests that humans understand and represent knowledge of the world through causal relationships. In addition to observations, they can rely on experimenting and counterfactual reasoning -- i.e. referring to an alternative course of events -- to identify causal relations and explain atypical situations. Different instances of control systems, such as smart homes, would benefit from having a similar causal model, as it would help the user understand the logic of the system and better react when needed. However, while data-driven methods achieve high levels of correlation detection, they mainly fall short of finding causal relations, notably being limited to observations only. Notably, they struggle to identify the cause from the effect when detecting a correlation between two variables. This paper introduces a new way to learn causal models from a mixture of experiments on the environment and observational data. The core of our method is the use of selected interventions, especially our learning takes into account the variables where it is impossible to intervene, unlike other approaches. The causal model we obtain is then used to generate Causal Bayesian Networks, which can be later used to perform diagnostic and predictive inference. We use our method on a smart home simulation, a use case where knowing causal relations pave the way towards explainable systems. Our algorithm succeeds in generating a Causal Bayesian Network close to the simulation's ground truth causal interactions, showing encouraging prospects for application in real-life systems.

Holarchic Structures for Decentralized Deep Learning - A Performance Analysis

Sep 17, 2018

Abstract:Structure plays a key role in learning performance. In centralized computational systems, hyperparameter optimization and regularization techniques such as dropout are computational means to enhance learning performance by adjusting the deep hierarchical structure. However, in decentralized deep learning by the Internet of Things, the structure is an actual network of autonomous interconnected devices such as smart phones that interact via complex network protocols. Self-adaptation of the learning structure is a challenge. Uncertainties such as network latency, node and link failures or even bottlenecks by limited processing capacity and energy availability can signif- icantly downgrade learning performance. Network self-organization and self-management is complex, while it requires additional computational and network resources that hinder the feasibility of decentralized deep learning. In contrast, this paper introduces a self-adaptive learning approach based on holarchic learning structures for exploring, mitigating and boosting learning performance in distributed environments with uncertainties. A large-scale performance analysis with 864000 experiments fed with synthetic and real-world data from smart grid and smart city pilot projects confirm the cost-effectiveness of holarchic structures for decentralized deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge