Jayabrata Chowdhury

Deep Attention Driven Reinforcement Learning (DAD-RL) for Autonomous Vehicle Decision-Making in Dynamic Environment

Jul 12, 2024

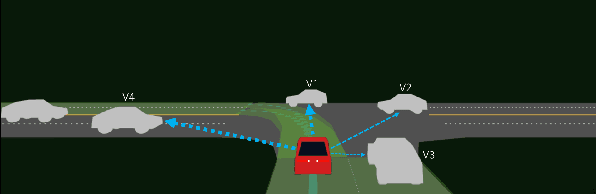

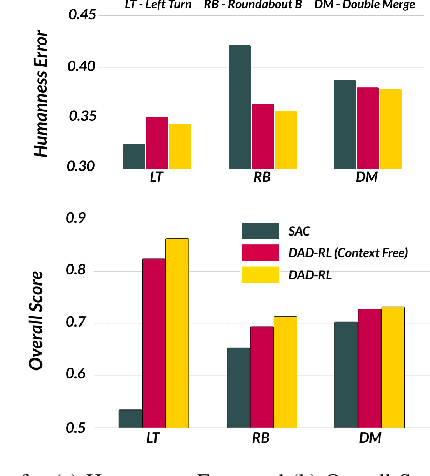

Abstract:Autonomous Vehicle (AV) decision making in urban environments is inherently challenging due to the dynamic interactions with surrounding vehicles. For safe planning, AV must understand the weightage of various spatiotemporal interactions in a scene. Contemporary works use colossal transformer architectures to encode interactions mainly for trajectory prediction, resulting in increased computational complexity. To address this issue without compromising spatiotemporal understanding and performance, we propose the simple Deep Attention Driven Reinforcement Learning (DADRL) framework, which dynamically assigns and incorporates the significance of surrounding vehicles into the ego's RL driven decision making process. We introduce an AV centric spatiotemporal attention encoding (STAE) mechanism for learning the dynamic interactions with different surrounding vehicles. To understand map and route context, we employ a context encoder to extract features from context maps. The spatiotemporal representations combined with contextual encoding provide a comprehensive state representation. The resulting model is trained using the Soft Actor Critic (SAC) algorithm. We evaluate the proposed framework on the SMARTS urban benchmarking scenarios without traffic signals to demonstrate that DADRL outperforms recent state of the art methods. Furthermore, an ablation study underscores the importance of the context-encoder and spatio temporal attention encoder in achieving superior performance.

Graph-based Prediction and Planning Policy Network (GP3Net) for scalable self-driving in dynamic environments using Deep Reinforcement Learning

Dec 10, 2023

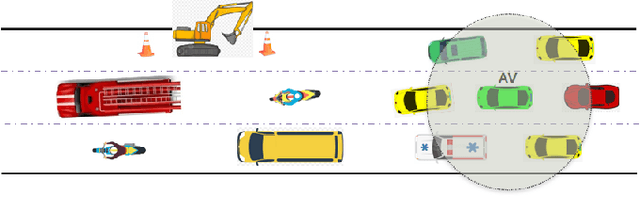

Abstract:Recent advancements in motion planning for Autonomous Vehicles (AVs) show great promise in using expert driver behaviors in non-stationary driving environments. However, learning only through expert drivers needs more generalizability to recover from domain shifts and near-failure scenarios due to the dynamic behavior of traffic participants and weather conditions. A deep Graph-based Prediction and Planning Policy Network (GP3Net) framework is proposed for non-stationary environments that encodes the interactions between traffic participants with contextual information and provides a decision for safe maneuver for AV. A spatio-temporal graph models the interactions between traffic participants for predicting the future trajectories of those participants. The predicted trajectories are utilized to generate a future occupancy map around the AV with uncertainties embedded to anticipate the evolving non-stationary driving environments. Then the contextual information and future occupancy maps are input to the policy network of the GP3Net framework and trained using Proximal Policy Optimization (PPO) algorithm. The proposed GP3Net performance is evaluated on standard CARLA benchmarking scenarios with domain shifts of traffic patterns (urban, highway, and mixed). The results show that the GP3Net outperforms previous state-of-the-art imitation learning-based planning models for different towns. Further, in unseen new weather conditions, GP3Net completes the desired route with fewer traffic infractions. Finally, the results emphasize the advantage of including the prediction module to enhance safety measures in non-stationary environments.

Predictive Maneuver Planning with Deep Reinforcement Learning (PMP-DRL) for comfortable and safe autonomous driving

Jun 15, 2023

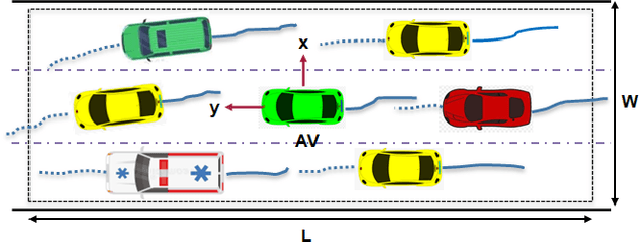

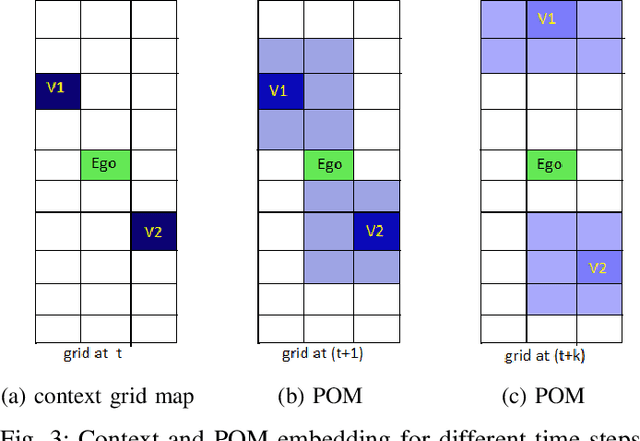

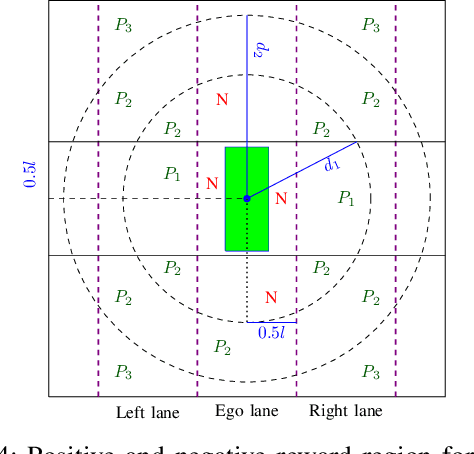

Abstract:This paper presents a Predictive Maneuver Planning with Deep Reinforcement Learning (PMP-DRL) model for maneuver planning. Traditional rule-based maneuver planning approaches often have to improve their abilities to handle the variabilities of real-world driving scenarios. By learning from its experience, a Reinforcement Learning (RL)-based driving agent can adapt to changing driving conditions and improve its performance over time. Our proposed approach combines a predictive model and an RL agent to plan for comfortable and safe maneuvers. The predictive model is trained using historical driving data to predict the future positions of other surrounding vehicles. The surrounding vehicles' past and predicted future positions are embedded in context-aware grid maps. At the same time, the RL agent learns to make maneuvers based on this spatio-temporal context information. Performance evaluation of PMP-DRL has been carried out using simulated environments generated from publicly available NGSIM US101 and I80 datasets. The training sequence shows the continuous improvement in the driving experiences. It shows that proposed PMP-DRL can learn the trade-off between safety and comfortability. The decisions generated by the recent imitation learning-based model are compared with the proposed PMP-DRL for unseen scenarios. The results clearly show that PMP-DRL can handle complex real-world scenarios and make better comfortable and safe maneuver decisions than rule-based and imitative models.

An efficient Deep Spatio-Temporal Context Aware decision Network (DST-CAN) for Predictive Manoeuvre Planning

May 20, 2022

Abstract:To ensure the safety and efficiency of its maneuvers, an Autonomous Vehicle (AV) should anticipate the future intentions of surrounding vehicles using its sensor information. If an AV can predict its surrounding vehicles' future trajectories, it can make safe and efficient manoeuvre decisions. In this paper, we present such a Deep Spatio-Temporal Context-Aware decision Network (DST-CAN) model for predictive manoeuvre planning of AVs. A memory neuron network is used to predict future trajectories of its surrounding vehicles. The driving environment's spatio-temporal information (past, present, and predicted future trajectories) are embedded into a context-aware grid. The proposed DST-CAN model employs these context-aware grids as inputs to a convolutional neural network to understand the spatial relationships between the vehicles and determine a safe and efficient manoeuvre decision. The DST-CAN model also uses information of human driving behavior on a highway. Performance evaluation of DST-CAN has been carried out using two publicly available NGSIM US-101 and I-80 datasets. Also, rule-based ground truth decisions have been compared with those generated by DST-CAN. The results clearly show that DST-CAN can make much better decisions with 3-sec of predicted trajectories of neighboring vehicles compared to currently existing methods that do not use this prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge