Jarred Jordan

Quasi Real-Time Autonomous Satellite Detection and Orbit Estimation

Apr 13, 2023

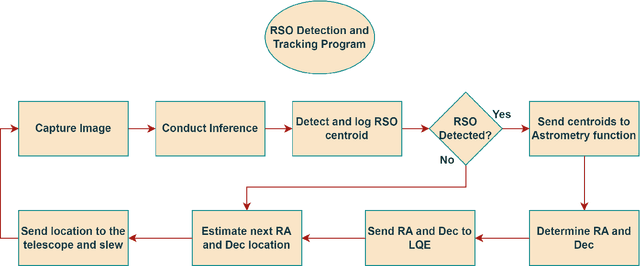

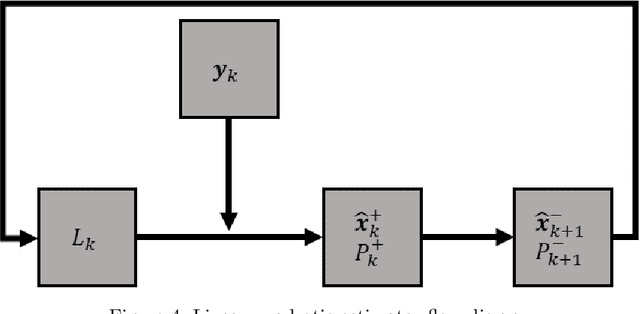

Abstract:A method of near real-time detection and tracking of resident space objects (RSOs) using a convolutional neural network (CNN) and linear quadratic estimator (LQE) is proposed. Advances in machine learning architecture allow the use of low-power/cost embedded devices to perform complex classification tasks. In order to reduce the costs of tracking systems, a low-cost embedded device will be used to run a CNN detection model for RSOs in unresolved images captured by a gray-scale camera and small telescope. Detection results computed in near real-time are then passed to an LQE to compute tracking updates for the telescope mount, resulting in a fully autonomous method of optical RSO detection and tracking. Keywords: Space Domain Awareness, Neural Networks, Real-Time, Object Detection, Embedded Systems.

RGB-D Robotic Pose Estimation For a Servicing Robotic Arm

Jul 23, 2022

Abstract:A large number of robotic and human-assisted missions to the Moon and Mars are forecast. NASA's efforts to learn about the geology and makeup of these celestial bodies rely heavily on the use of robotic arms. The safety and redundancy aspects will be crucial when humans will be working alongside the robotic explorers. Additionally, robotic arms are crucial to satellite servicing and planned orbit debris mitigation missions. The goal of this work is to create a custom Computer Vision (CV) based Artificial Neural Network (ANN) that would be able to rapidly identify the posture of a 7 Degree of Freedom (DoF) robotic arm from a single (RGB-D) image - just like humans can easily identify if an arm is pointing in some general direction. The Sawyer robotic arm is used for developing and training this intelligent algorithm. Since Sawyer's joint space spans 7 dimensions, it is an insurmountable task to cover the entire joint configuration space. In this work, orthogonal arrays are used, similar to the Taguchi method, to efficiently span the joint space with the minimal number of training images. This ``optimally'' generated database is used to train the custom ANN and its degree of accuracy is on average equal to twice the smallest joint displacement step used for database generation. A pre-trained ANN will be useful for estimating the postures of robotic manipulators used on space stations, spacecraft, and rovers as an auxiliary tool or for contingency plans.

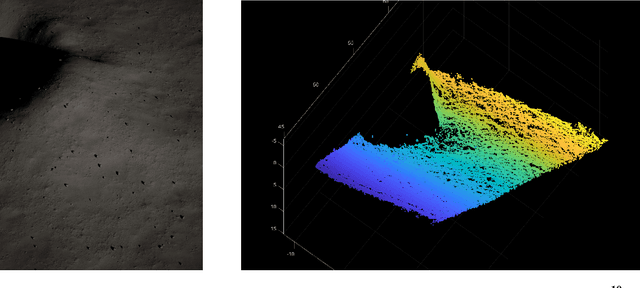

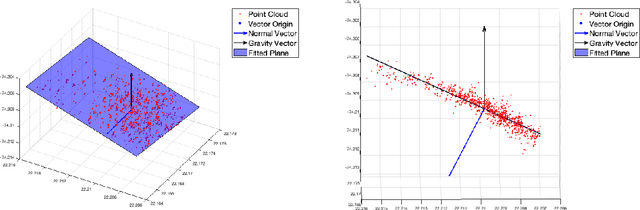

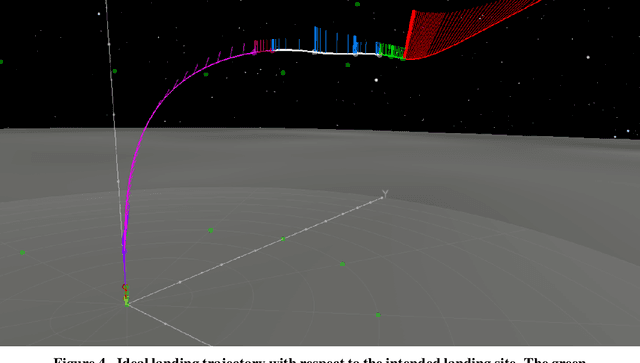

Detection and Initial Assessment of Lunar Landing Sites Using Neural Networks

Jul 23, 2022

Abstract:Robotic and human lunar landings are a focus of future NASA missions. Precision landing capabilities are vital to guarantee the success of the mission, and the safety of the lander and crew. During the approach to the surface there are multiple challenges associated with Hazard Relative Navigation to ensure safe landings. This paper will focus on a passive autonomous hazard detection and avoidance sub-system to generate an initial assessment of possible landing regions for the guidance system. The system uses a single camera and the MobileNetV2 neural network architecture to detect and discern between safe landing sites and hazards such as rocks, shadows, and craters. Then a monocular structure from motion will recreate the surface to provide slope and roughness analysis.

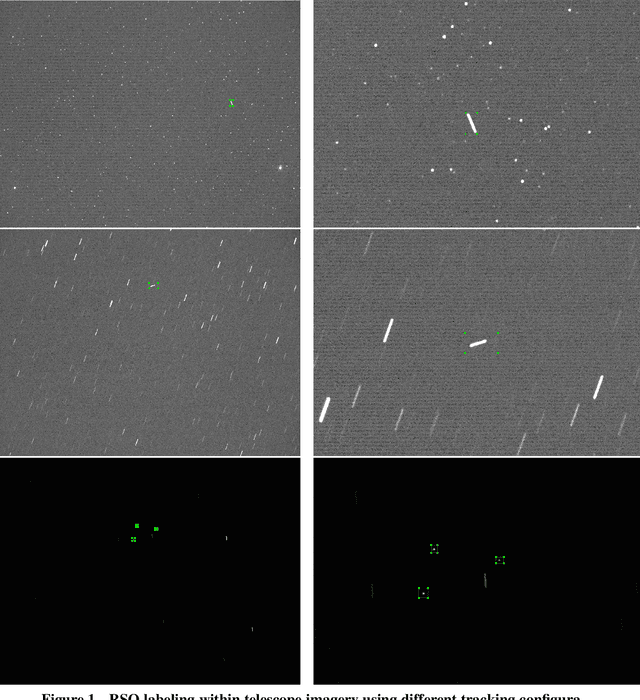

Satellite Detection in Unresolved Space Imagery for Space Domain Awareness Using Neural Networks

Jul 23, 2022

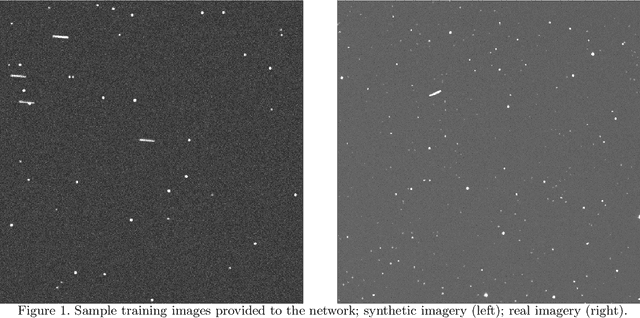

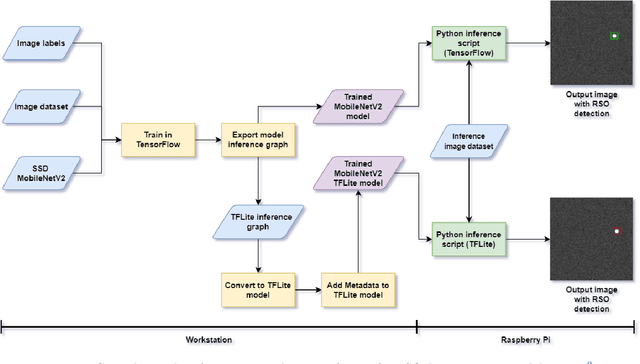

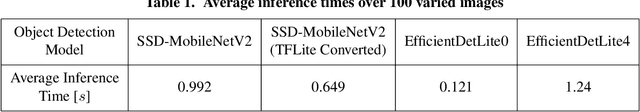

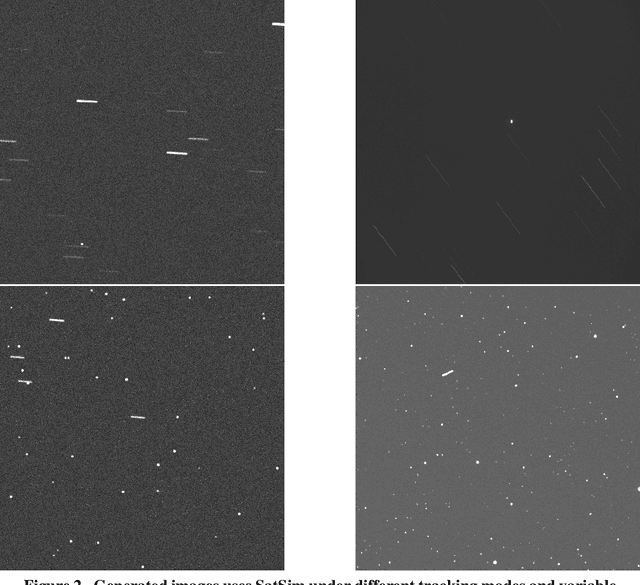

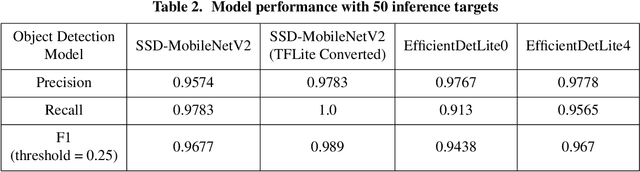

Abstract:This work utilizes a MobileNetV2 Convolutional Neural Network (CNN) for fast, mobile detection of satellites, and rejection of stars, in cluttered unresolved space imagery. First, a custom database is created using imagery from a synthetic satellite image program and labeled with bounding boxes over satellites for "satellite-positive" images. The CNN is then trained on this database and the inference is validated by checking the accuracy of the model on an external dataset constructed of real telescope imagery. In doing so, the trained CNN provides a method of rapid satellite identification for subsequent utilization in ground-based orbit estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge