Jannis Chemseddine

Adapting Noise to Data: Generative Flows from 1D Processes

Oct 14, 2025Abstract:We introduce a general framework for constructing generative models using one-dimensional noising processes. Beyond diffusion processes, we outline examples that demonstrate the flexibility of our approach. Motivated by this, we propose a novel framework in which the 1D processes themselves are learnable, achieved by parameterizing the noise distribution through quantile functions that adapt to the data. Our construction integrates seamlessly with standard objectives, including Flow Matching and consistency models. Learning quantile-based noise naturally captures heavy tails and compact supports when present. Numerical experiments highlight both the flexibility and the effectiveness of our method.

Neural Sampling from Boltzmann Densities: Fisher-Rao Curves in the Wasserstein Geometry

Oct 04, 2024

Abstract:We deal with the task of sampling from an unnormalized Boltzmann density $\rho_D$ by learning a Boltzmann curve given by energies $f_t$ starting in a simple density $\rho_Z$. First, we examine conditions under which Fisher-Rao flows are absolutely continuous in the Wasserstein geometry. Second, we address specific interpolations $f_t$ and the learning of the related density/velocity pairs $(\rho_t,v_t)$. It was numerically observed that the linear interpolation, which requires only a parametrization of the velocity field $v_t$, suffers from a "teleportation-of-mass" issue. Using tools from the Wasserstein geometry, we give an analytical example, where we can precisely measure the explosion of the velocity field. Inspired by M\'at\'e and Fleuret, who parametrize both $f_t$ and $v_t$, we propose an interpolation which parametrizes only $f_t$ and fixes an appropriate $v_t$. This corresponds to the Wasserstein gradient flow of the Kullback-Leibler divergence related to Langevin dynamics. We demonstrate by numerical examples that our model provides a well-behaved flow field which successfully solves the above sampling task.

Conditional Wasserstein Distances with Applications in Bayesian OT Flow Matching

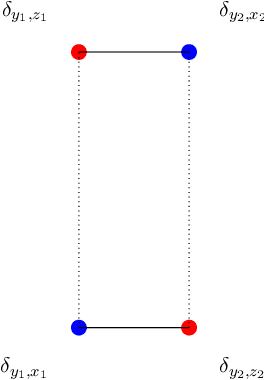

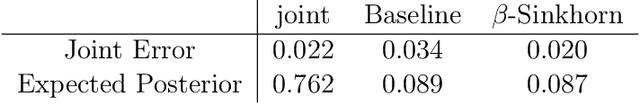

Mar 27, 2024Abstract:In inverse problems, many conditional generative models approximate the posterior measure by minimizing a distance between the joint measure and its learned approximation. While this approach also controls the distance between the posterior measures in the case of the Kullback--Leibler divergence, this is in general not hold true for the Wasserstein distance. In this paper, we introduce a conditional Wasserstein distance via a set of restricted couplings that equals the expected Wasserstein distance of the posteriors. Interestingly, the dual formulation of the conditional Wasserstein-1 flow resembles losses in the conditional Wasserstein GAN literature in a quite natural way. We derive theoretical properties of the conditional Wasserstein distance, characterize the corresponding geodesics and velocity fields as well as the flow ODEs. Subsequently, we propose to approximate the velocity fields by relaxing the conditional Wasserstein distance. Based on this, we propose an extension of OT Flow Matching for solving Bayesian inverse problems and demonstrate its numerical advantages on an inverse problem and class-conditional image generation.

Y-Diagonal Couplings: Approximating Posteriors with Conditional Wasserstein Distances

Oct 20, 2023

Abstract:In inverse problems, many conditional generative models approximate the posterior measure by minimizing a distance between the joint measure and its learned approximation. While this approach also controls the distance between the posterior measures in the case of the Kullback Leibler divergence, it does not hold true for the Wasserstein distance. We will introduce a conditional Wasserstein distance with a set of restricted couplings that equals the expected Wasserstein distance of the posteriors. By deriving its dual, we find a rigorous way to motivate the loss of conditional Wasserstein GANs. We outline conditions under which the vanilla and the conditional Wasserstein distance coincide. Furthermore, we will show numerical examples where training with the conditional Wasserstein distance yields favorable properties for posterior sampling.

Posterior Sampling Based on Gradient Flows of the MMD with Negative Distance Kernel

Oct 04, 2023

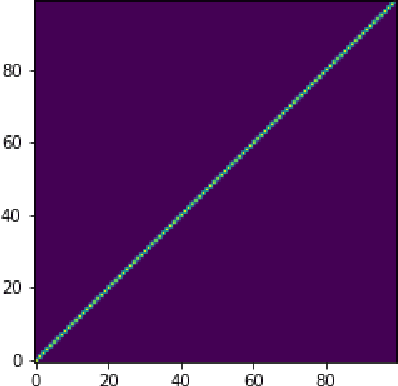

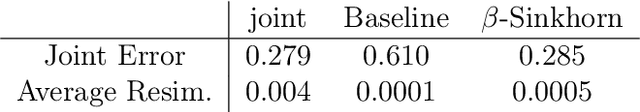

Abstract:We propose conditional flows of the maximum mean discrepancy (MMD) with the negative distance kernel for posterior sampling and conditional generative modeling. This MMD, which is also known as energy distance, has several advantageous properties like efficient computation via slicing and sorting. We approximate the joint distribution of the ground truth and the observations using discrete Wasserstein gradient flows and establish an error bound for the posterior distributions. Further, we prove that our particle flow is indeed a Wasserstein gradient flow of an appropriate functional. The power of our method is demonstrated by numerical examples including conditional image generation and inverse problems like superresolution, inpainting and computed tomography in low-dose and limited-angle settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge