Jan Hajič Jr.

Understanding Optical Music Recognition

Aug 14, 2019

Abstract:For over 50 years, researchers have been trying to teach computers to read music notation, referred to as Optical Music Recognition (OMR). However, this field is still difficult to access for new researchers, especially those without a significant musical background: few introductory materials are available, and furthermore the field has struggled with defining itself and building a shared terminology. In this tutorial, we address these shortcomings by (1) providing a robust definition of OMR and its relationship to related fields, (2) analyzing how OMR inverts the music encoding process to recover the musical notation and the musical semantics from documents, (3) proposing a taxonomy of OMR, with most notably a novel taxonomy of applications. Additionally, we discuss how deep learning affects modern OMR research, as opposed to the traditional pipeline. Based on this work, the reader should be able to attain a basic understanding of OMR: its objectives, its inherent structure, its relationship to other fields, the state of the art, and the research opportunities it affords.

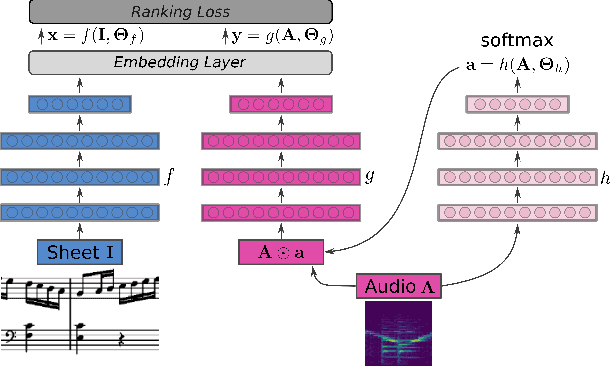

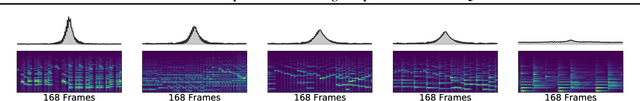

Attention as a Perspective for Learning Tempo-invariant Audio Queries

Sep 15, 2018

Abstract:Current models for audio--sheet music retrieval via multimodal embedding space learning use convolutional neural networks with a fixed-size window for the input audio. Depending on the tempo of a query performance, this window captures more or less musical content, while notehead density in the score is largely tempo-independent. In this work we address this disparity with a soft attention mechanism, which allows the model to encode only those parts of an audio excerpt that are most relevant with respect to efficient query codes. Empirical results on classical piano music indicate that attention is beneficial for retrieval performance, and exhibits intuitively appealing behavior.

Detecting Noteheads in Handwritten Scores with ConvNets and Bounding Box Regression

Aug 05, 2017

Abstract:Noteheads are the interface between the written score and music. Each notehead on the page signifies one note to be played, and detecting noteheads is thus an unavoidable step for Optical Music Recognition. Noteheads are clearly distinct objects, however, the variety of music notation handwriting makes noteheads harder to identify, and while handwritten music notation symbol {\em classification} is a well-studied task, symbol {\em detection} has usually been limited to heuristics and rule-based systems instead of machine learning methods better suited to deal with the uncertainties in handwriting. We present ongoing work on a simple notehead detector using convolutional neural networks for pixel classification and bounding box regression that achieves a detection f-score of 0.97 on binary score images in the MUSCIMA++ dataset, does not require staff removal, and is applicable to a variety of handwriting styles and levels of musical complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge