Jamshid Mozafari

PARSE: An Open-Domain Reasoning Question Answering Benchmark for Persian

Feb 01, 2026Abstract:Reasoning-focused Question Answering (QA) has advanced rapidly with Large Language Models (LLMs), yet high-quality benchmarks for low-resource languages remain scarce. Persian, spoken by roughly 130 million people, lacks a comprehensive open-domain resource for evaluating reasoning-capable QA systems. We introduce PARSE, the first open-domain Persian reasoning QA benchmark, containing 10,800 questions across Boolean, multiple-choice, and factoid formats, with diverse reasoning types, difficulty levels, and answer structures. The benchmark is built via a controlled LLM-based generation pipeline and validated through human evaluation. We also ensure linguistic and factual quality through multi-stage filtering, annotation, and consistency checks. We benchmark multilingual and Persian LLMs under multiple prompting strategies and show that Persian prompts and structured prompting (CoT for Boolean/multiple-choice; few-shot for factoid) improve performance. Fine-tuning further boosts results, especially for Persian-specialized models. These findings highlight how PARSE supports both fair comparison and practical model adaptation. PARSE fills a critical gap in Persian QA research and provides a strong foundation for developing and evaluating reasoning-capable LLMs in low-resource settings.

Inferential Question Answering

Feb 01, 2026Abstract:Despite extensive research on a wide range of question answering (QA) systems, most existing work focuses on answer containment-i.e., assuming that answers can be directly extracted and/or generated from documents in the corpus. However, some questions require inference, i.e., deriving answers that are not explicitly stated but can be inferred from the available information. We introduce Inferential QA -- a new task that challenges models to infer answers from answer-supporting passages which provide only clues. To study this problem, we construct QUIT (QUestions requiring Inference from Texts) dataset, comprising 7,401 questions and 2.4M passages built from high-convergence human- and machine-authored hints, labeled across three relevance levels using LLM-based answerability and human verification. Through comprehensive evaluation of retrievers, rerankers, and LLM-based readers, we show that methods effective on traditional QA tasks struggle in inferential QA: retrievers underperform, rerankers offer limited gains, and fine-tuning provides inconsistent improvements. Even reasoning-oriented LLMs fail to outperform smaller general-purpose models. These findings reveal that current QA pipelines are not yet ready for inference-based reasoning. Inferential QA thus establishes a new class of QA tasks that move towards understanding and reasoning from indirect textual evidence.

DeAR: Dual-Stage Document Reranking with Reasoning Agents via LLM Distillation

Aug 23, 2025Abstract:Large Language Models (LLMs) have transformed listwise document reranking by enabling global reasoning over candidate sets, yet single models often struggle to balance fine-grained relevance scoring with holistic cross-document analysis. We propose \textbf{De}ep\textbf{A}gent\textbf{R}ank (\textbf{\DeAR}), an open-source framework that decouples these tasks through a dual-stage approach, achieving superior accuracy and interpretability. In \emph{Stage 1}, we distill token-level relevance signals from a frozen 13B LLaMA teacher into a compact \{3, 8\}B student model using a hybrid of cross-entropy, RankNet, and KL divergence losses, ensuring robust pointwise scoring. In \emph{Stage 2}, we attach a second LoRA adapter and fine-tune on 20K GPT-4o-generated chain-of-thought permutations, enabling listwise reasoning with natural-language justifications. Evaluated on TREC-DL19/20, eight BEIR datasets, and NovelEval-2306, \DeAR surpasses open-source baselines by +5.1 nDCG@5 on DL20 and achieves 90.97 nDCG@10 on NovelEval, outperforming GPT-4 by +3.09. Without fine-tuning on Wikipedia, DeAR also excels in open-domain QA, achieving 54.29 Top-1 accuracy on Natural Questions, surpassing baselines like MonoT5, UPR, and RankGPT. Ablations confirm that dual-loss distillation ensures stable calibration, making \DeAR a highly effective and interpretable solution for modern reranking systems.\footnote{Dataset and code available at https://github.com/DataScienceUIBK/DeAR-Reranking.}.

RankArena: A Unified Platform for Evaluating Retrieval, Reranking and RAG with Human and LLM Feedback

Aug 07, 2025Abstract:Evaluating the quality of retrieval-augmented generation (RAG) and document reranking systems remains challenging due to the lack of scalable, user-centric, and multi-perspective evaluation tools. We introduce RankArena, a unified platform for comparing and analysing the performance of retrieval pipelines, rerankers, and RAG systems using structured human and LLM-based feedback as well as for collecting such feedback. RankArena supports multiple evaluation modes: direct reranking visualisation, blind pairwise comparisons with human or LLM voting, supervised manual document annotation, and end-to-end RAG answer quality assessment. It captures fine-grained relevance feedback through both pairwise preferences and full-list annotations, along with auxiliary metadata such as movement metrics, annotation time, and quality ratings. The platform also integrates LLM-as-a-judge evaluation, enabling comparison between model-generated rankings and human ground truth annotations. All interactions are stored as structured evaluation datasets that can be used to train rerankers, reward models, judgment agents, or retrieval strategy selectors. Our platform is publicly available at https://rankarena.ngrok.io/, and the Demo video is provided https://youtu.be/jIYAP4PaSSI.

It's High Time: A Survey of Temporal Information Retrieval and Question Answering

May 26, 2025Abstract:Time plays a critical role in how information is generated, retrieved, and interpreted. In this survey, we provide a comprehensive overview of Temporal Information Retrieval and Temporal Question Answering, two research areas aimed at handling and understanding time-sensitive information. As the amount of time-stamped content from sources like news articles, web archives, and knowledge bases increases, systems must address challenges such as detecting temporal intent, normalizing time expressions, ordering events, and reasoning over evolving or ambiguous facts. These challenges are critical across many dynamic and time-sensitive domains, from news and encyclopedias to science, history, and social media. We review both traditional approaches and modern neural methods, including those that use transformer models and Large Language Models (LLMs). We also review recent advances in temporal language modeling, multi-hop reasoning, and retrieval-augmented generation (RAG), alongside benchmark datasets and evaluation strategies that test temporal robustness, recency awareness, and generalization.

From Retrieval to Generation: Comparing Different Approaches

Feb 27, 2025Abstract:Knowledge-intensive tasks, particularly open-domain question answering (ODQA), document reranking, and retrieval-augmented language modeling, require a balance between retrieval accuracy and generative flexibility. Traditional retrieval models such as BM25 and Dense Passage Retrieval (DPR), efficiently retrieve from large corpora but often lack semantic depth. Generative models like GPT-4-o provide richer contextual understanding but face challenges in maintaining factual consistency. In this work, we conduct a systematic evaluation of retrieval-based, generation-based, and hybrid models, with a primary focus on their performance in ODQA and related retrieval-augmented tasks. Our results show that dense retrievers, particularly DPR, achieve strong performance in ODQA with a top-1 accuracy of 50.17\% on NQ, while hybrid models improve nDCG@10 scores on BEIR from 43.42 (BM25) to 52.59, demonstrating their strength in document reranking. Additionally, we analyze language modeling tasks using WikiText-103, showing that retrieval-based approaches like BM25 achieve lower perplexity compared to generative and hybrid methods, highlighting their utility in retrieval-augmented generation. By providing detailed comparisons and practical insights into the conditions where each approach excels, we aim to facilitate future optimizations in retrieval, reranking, and generative models for ODQA and related knowledge-intensive applications.

MultiOCR-QA: Dataset for Evaluating Robustness of LLMs in Question Answering on Multilingual OCR Texts

Feb 24, 2025Abstract:Optical Character Recognition (OCR) plays a crucial role in digitizing historical and multilingual documents, yet OCR errors -- imperfect extraction of the text, including character insertion, deletion and permutation -- can significantly impact downstream tasks like question-answering (QA). In this work, we introduce a multilingual QA dataset MultiOCR-QA, designed to analyze the effects of OCR noise on QA systems' performance. The MultiOCR-QA dataset comprises 60K question-answer pairs covering three languages, English, French, and German. The dataset is curated from OCR-ed old documents, allowing for the evaluation of OCR-induced challenges on question answering. We evaluate MultiOCR-QA on various levels and types of OCR errors to access the robustness of LLMs in handling real-world digitization errors. Our findings show that QA systems are highly prone to OCR induced errors and exhibit performance degradation on noisy OCR text.

Wrong Answers Can Also Be Useful: PlausibleQA -- A Large-Scale QA Dataset with Answer Plausibility Scores

Feb 22, 2025Abstract:Large Language Models (LLMs) are revolutionizing information retrieval, with chatbots becoming an important source for answering user queries. As by their design, LLMs prioritize generating correct answers, the value of highly plausible yet incorrect answers (candidate answers) tends to be overlooked. However, such answers can still prove useful, for example, they can play a crucial role in tasks like Multiple-Choice Question Answering (MCQA) and QA Robustness Assessment (QARA). Existing QA datasets primarily focus on correct answers without explicit consideration of the plausibility of other candidate answers, limiting opportunity for more nuanced evaluations of models. To address this gap, we introduce PlausibleQA, a large-scale dataset comprising 10,000 questions and 100,000 candidate answers, each annotated with plausibility scores and justifications for their selection. Additionally, the dataset includes 900,000 justifications for pairwise comparisons between candidate answers, further refining plausibility assessments. We evaluate PlausibleQA through human assessments and empirical experiments, demonstrating its utility in MCQA and QARA analysis. Our findings show that plausibility-aware approaches are effective for MCQA distractor generation and QARA. We release PlausibleQA as a resource for advancing QA research and enhancing LLM performance in distinguishing plausible distractors from correct answers.

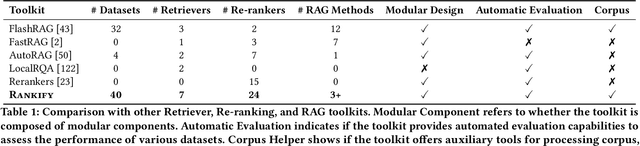

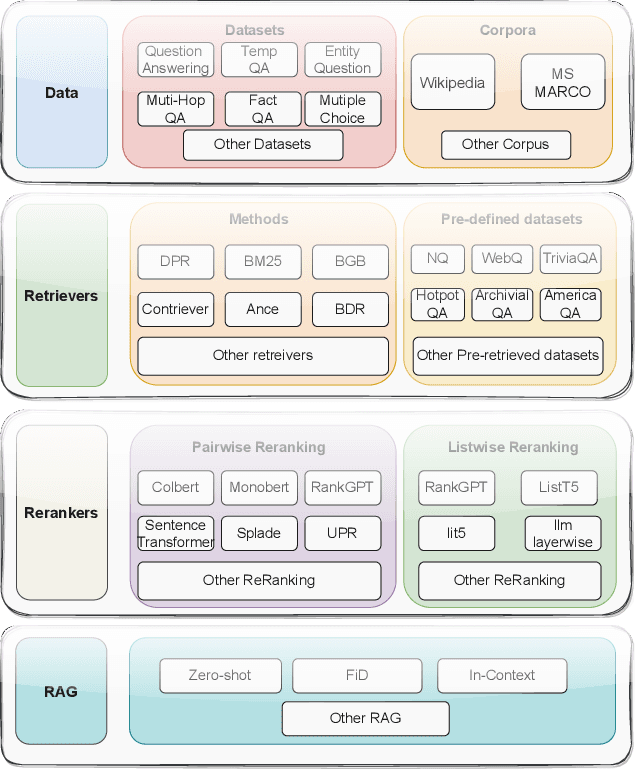

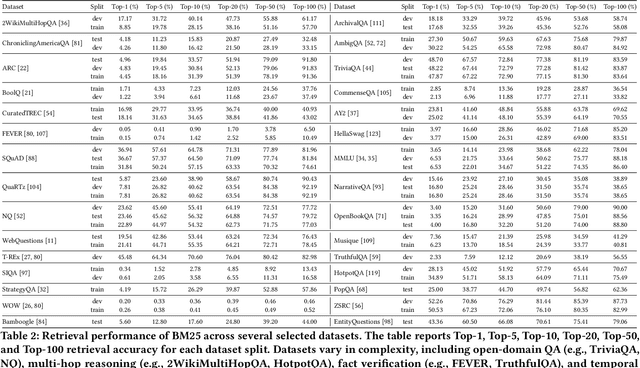

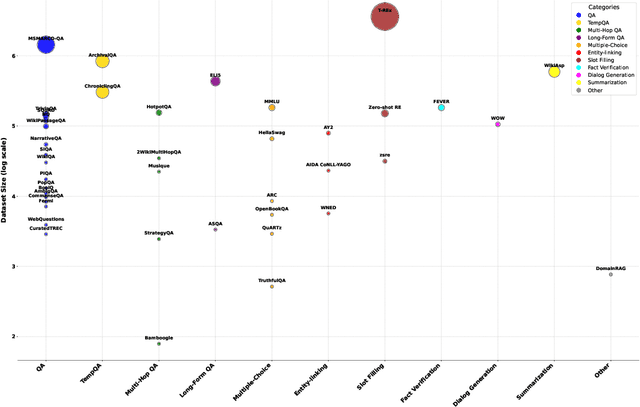

Rankify: A Comprehensive Python Toolkit for Retrieval, Re-Ranking, and Retrieval-Augmented Generation

Feb 04, 2025

Abstract:Retrieval, re-ranking, and retrieval-augmented generation (RAG) are critical components of modern natural language processing (NLP) applications in information retrieval, question answering, and knowledge-based text generation. However, existing solutions are often fragmented, lacking a unified framework that easily integrates these essential processes. The absence of a standardized implementation, coupled with the complexity of retrieval and re-ranking workflows, makes it challenging for researchers to compare and evaluate different approaches in a consistent environment. While existing toolkits such as Rerankers and RankLLM provide general-purpose reranking pipelines, they often lack the flexibility required for fine-grained experimentation and benchmarking. In response to these challenges, we introduce \textbf{Rankify}, a powerful and modular open-source toolkit designed to unify retrieval, re-ranking, and RAG within a cohesive framework. Rankify supports a wide range of retrieval techniques, including dense and sparse retrievers, while incorporating state-of-the-art re-ranking models to enhance retrieval quality. Additionally, Rankify includes a collection of pre-retrieved datasets to facilitate benchmarking, available at Huggingface (https://huggingface.co/datasets/abdoelsayed/reranking-datasets). To encourage adoption and ease of integration, we provide comprehensive documentation (http://rankify.readthedocs.io/), an open-source implementation on GitHub(https://github.com/DataScienceUIBK/rankify), and a PyPI package for effortless installation(https://pypi.org/project/rankify/). By providing a unified and lightweight framework, Rankify allows researchers and practitioners to advance retrieval and re-ranking methodologies while ensuring consistency, scalability, and ease of use.

HintEval: A Comprehensive Framework for Hint Generation and Evaluation for Questions

Feb 02, 2025Abstract:Large Language Models (LLMs) are transforming how people find information, and many users turn nowadays to chatbots to obtain answers to their questions. Despite the instant access to abundant information that LLMs offer, it is still important to promote critical thinking and problem-solving skills. Automatic hint generation is a new task that aims to support humans in answering questions by themselves by creating hints that guide users toward answers without directly revealing them. In this context, hint evaluation focuses on measuring the quality of hints, helping to improve the hint generation approaches. However, resources for hint research are currently spanning different formats and datasets, while the evaluation tools are missing or incompatible, making it hard for researchers to compare and test their models. To overcome these challenges, we introduce HintEval, a Python library that makes it easy to access diverse datasets and provides multiple approaches to generate and evaluate hints. HintEval aggregates the scattered resources into a single toolkit that supports a range of research goals and enables a clear, multi-faceted, and reliable evaluation. The proposed library also includes detailed online documentation, helping users quickly explore its features and get started. By reducing barriers to entry and encouraging consistent evaluation practices, HintEval offers a major step forward for facilitating hint generation and analysis research within the NLP/IR community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge