Jakub Smékal

Towards a theory of learning dynamics in deep state space models

Jul 10, 2024

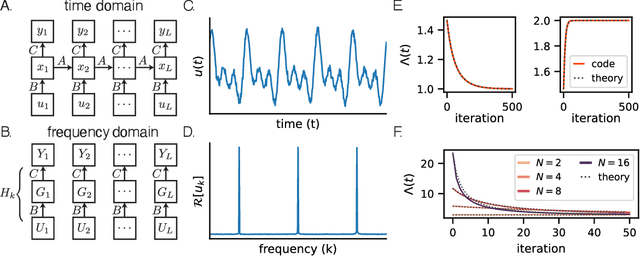

Abstract:State space models (SSMs) have shown remarkable empirical performance on many long sequence modeling tasks, but a theoretical understanding of these models is still lacking. In this work, we study the learning dynamics of linear SSMs to understand how covariance structure in data, latent state size, and initialization affect the evolution of parameters throughout learning with gradient descent. We show that focusing on the learning dynamics in the frequency domain affords analytical solutions under mild assumptions, and we establish a link between one-dimensional SSMs and the dynamics of deep linear feed-forward networks. Finally, we analyze how latent state over-parameterization affects convergence time and describe future work in extending our results to the study of deep SSMs with nonlinear connections. This work is a step toward a theory of learning dynamics in deep state space models.

Decentralized Technologies for AI Hubs

Jun 07, 2023

Abstract:AI requires heavy amounts of storage and compute with assets that are commonly stored in AI Hubs. AI Hubs have contributed significantly to the democratization of AI. However, existing implementations are associated with certain benefits and limitations that stem from the underlying infrastructure and governance systems with which they are built. These limitations include high costs, lack of monetization and reward, lack of control and difficulty of reproducibility. In the current work, we explore the potential of decentralized technologies - such as Web3 wallets, peer-to-peer marketplaces, storage and compute, and DAOs - to address some of these issues. We suggest that these infrastructural components can be used in combination in the design and construction of decentralized AI Hubs.

* arXiv admin note: substantial text overlap with arXiv:2210.16651

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge