Jairo Cugliari

Monitoring geometrical properties of word embeddings for detecting the emergence of new topics

Nov 05, 2021

Abstract:Slow emerging topic detection is a task between event detection, where we aggregate behaviors of different words on short period of time, and language evolution, where we monitor their long term evolution. In this work, we tackle the problem of early detection of slowly emerging new topics. To this end, we gather evidence of weak signals at the word level. We propose to monitor the behavior of words representation in an embedding space and use one of its geometrical properties to characterize the emergence of topics. As evaluation is typically hard for this kind of task, we present a framework for quantitative evaluation. We show positive results that outperform state-of-the-art methods on two public datasets of press and scientific articles.

Transfer Learning for Linear Regression: a Statistical Test of Gain

Feb 18, 2021

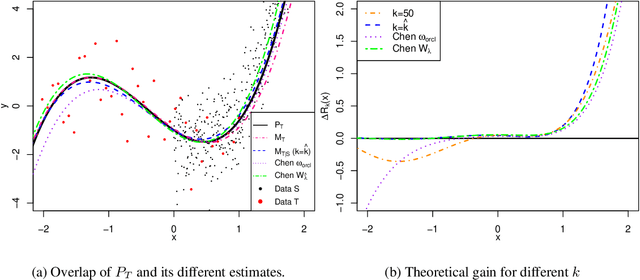

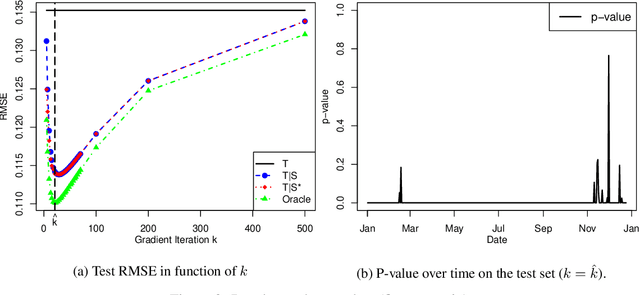

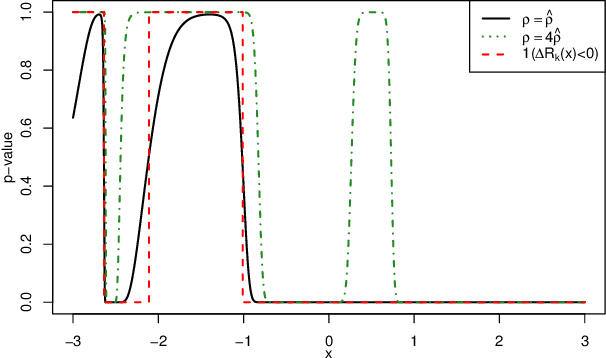

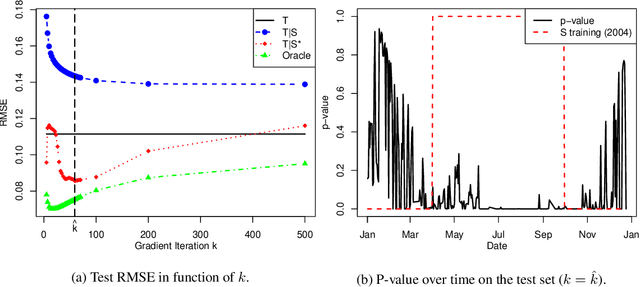

Abstract:Transfer learning, also referred as knowledge transfer, aims at reusing knowledge from a source dataset to a similar target one. While many empirical studies illustrate the benefits of transfer learning, few theoretical results are established especially for regression problems. In this paper a theoretical framework for the problem of parameter transfer for the linear model is proposed. It is shown that the quality of transfer for a new input vector $x$ depends on its representation in an eigenbasis involving the parameters of the problem. Furthermore a statistical test is constructed to predict whether a fine-tuned model has a lower prediction quadratic risk than the base target model for an unobserved sample. Efficiency of the test is illustrated on synthetic data as well as real electricity consumption data.

Textual Data for Time Series Forecasting

Oct 29, 2019

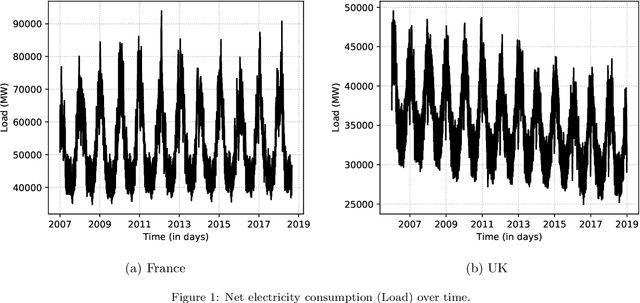

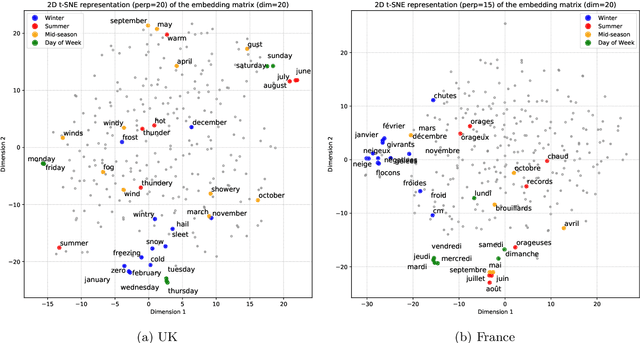

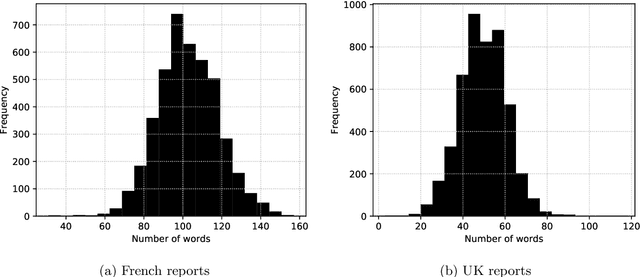

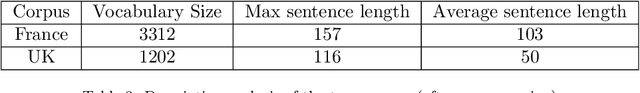

Abstract:While ubiquitous, textual sources of information such as company reports, social media posts, etc. are hardly included in prediction algorithms for time series, despite the relevant information they may contain. In this work, openly accessible daily weather reports from France and the United-Kingdom are leveraged to predict time series of national electricity consumption, average temperature and wind-speed with a single pipeline. Two methods of numerical representation of text are considered, namely traditional Term Frequency - Inverse Document Frequency (TF-IDF) as well as our own neural word embedding. Using exclusively text, we are able to predict the aforementioned time series with sufficient accuracy to be used to replace missing data. Furthermore the proposed word embeddings display geometric properties relating to the behavior of the time series and context similarity between words.

How to detect novelty in textual data streams? A comparative study of existing methods

Sep 11, 2019

Abstract:Since datasets with annotation for novelty at the document and/or word level are not easily available, we present a simulation framework that allows us to create different textual datasets in which we control the way novelty occurs. We also present a benchmark of existing methods for novelty detection in textual data streams. We define a few tasks to solve and compare several state-of-the-art methods. The simulation framework allows us to evaluate their performances according to a set of limited scenarios and test their sensitivity to some parameters. Finally, we experiment with the same methods on different kinds of novelty in the New York Times Annotated Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge