Jaelle Scheuerman

Proceedings of 1st Workshop on Advancing Artificial Intelligence through Theory of Mind

Apr 28, 2025

Abstract:This volume includes a selection of papers presented at the Workshop on Advancing Artificial Intelligence through Theory of Mind held at AAAI 2025 in Philadelphia US on 3rd March 2025. The purpose of this volume is to provide an open access and curated anthology for the ToM and AI research community.

Multi-Criteria Comparison as a Method of Advancing Knowledge-Guided Machine Learning

Mar 18, 2024Abstract:This paper describes a generalizable model evaluation method that can be adapted to evaluate AI/ML models across multiple criteria including core scientific principles and more practical outcomes. Emerging from prediction competitions in Psychology and Decision Science, the method evaluates a group of candidate models of varying type and structure across multiple scientific, theoretic, and practical criteria. Ordinal ranking of criteria scores are evaluated using voting rules from the field of computational social choice and allow the comparison of divergent measures and types of models in a holistic evaluation. Additional advantages and applications are discussed.

Modeling Voters in Multi-Winner Approval Voting

Dec 04, 2020

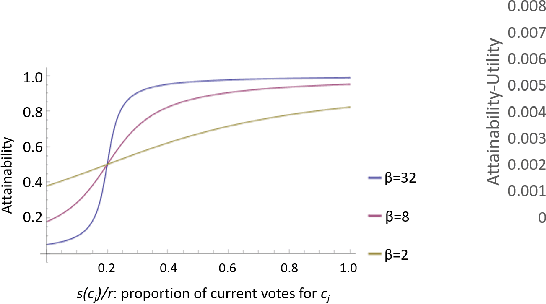

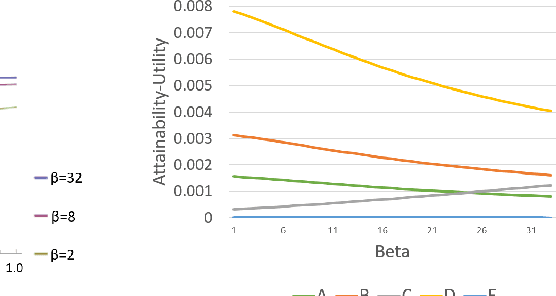

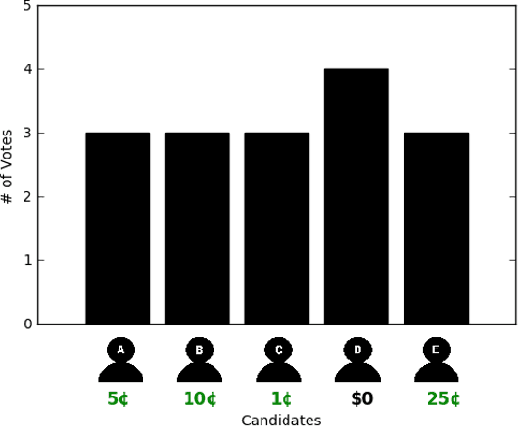

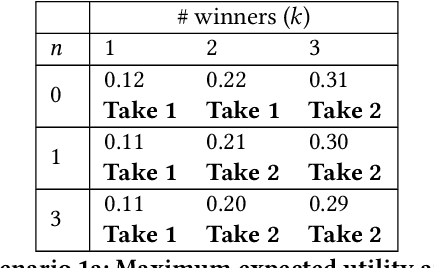

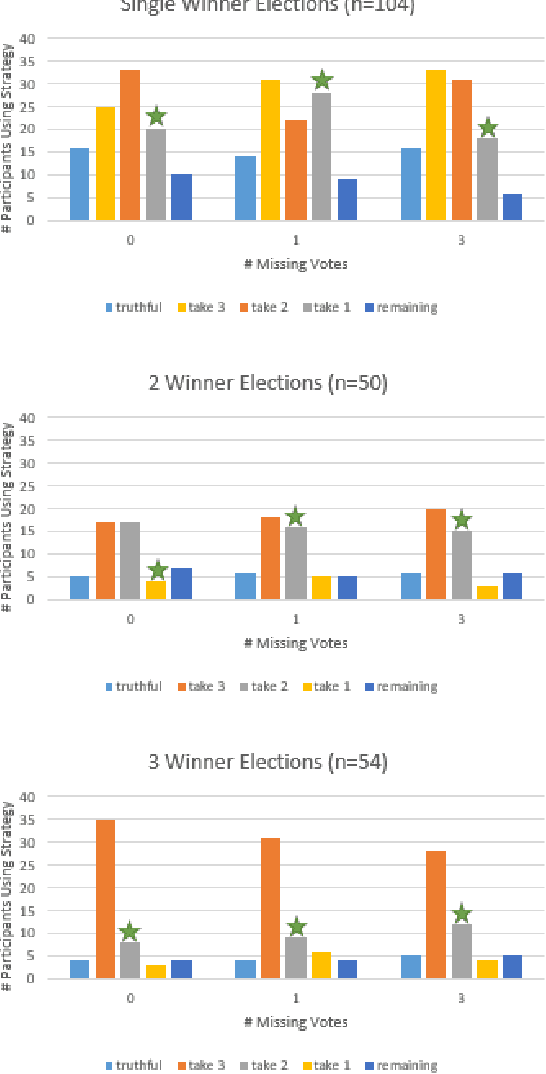

Abstract:In many real world situations, collective decisions are made using voting and, in scenarios such as committee or board elections, employing voting rules that return multiple winners. In multi-winner approval voting (AV), an agent submits a ballot consisting of approvals for as many candidates as they wish, and winners are chosen by tallying up the votes and choosing the top-$k$ candidates receiving the most approvals. In many scenarios, an agent may manipulate the ballot they submit in order to achieve a better outcome by voting in a way that does not reflect their true preferences. In complex and uncertain situations, agents may use heuristics instead of incurring the additional effort required to compute the manipulation which most favors them. In this paper, we examine voting behavior in single-winner and multi-winner approval voting scenarios with varying degrees of uncertainty using behavioral data obtained from Mechanical Turk. We find that people generally manipulate their vote to obtain a better outcome, but often do not identify the optimal manipulation. There are a number of predictive models of agent behavior in the COMSOC and psychology literature that are based on cognitively plausible heuristic strategies. We show that the existing approaches do not adequately model real-world data. We propose a novel model that takes into account the size of the winning set and human cognitive constraints, and demonstrate that this model is more effective at capturing real-world behaviors in multi-winner approval voting scenarios.

On Interactive Machine Learning and the Potential of Cognitive Feedback

Mar 23, 2020

Abstract:In order to increase productivity, capability, and data exploitation, numerous defense applications are experiencing an integration of state-of-the-art machine learning and AI into their architectures. Especially for defense applications, having a human analyst in the loop is of high interest due to quality control, accountability, and complex subject matter expertise not readily automated or replicated by AI. However, many applications are suffering from a very slow transition. This may be in large part due to lack of trust, usability, and productivity, especially when adapting to unforeseen classes and changes in mission context. Interactive machine learning is a newly emerging field in which machine learning implementations are trained, optimized, evaluated, and exploited through an intuitive human-computer interface. In this paper, we introduce interactive machine learning and explain its advantages and limitations within the context of defense applications. Furthermore, we address several of the shortcomings of interactive machine learning by discussing how cognitive feedback may inform features, data, and results in the state of the art. We define the three techniques by which cognitive feedback may be employed: self reporting, implicit cognitive feedback, and modeled cognitive feedback. The advantages and disadvantages of each technique are discussed.

Heuristic Strategies in Uncertain Approval Voting Environments

Nov 29, 2019

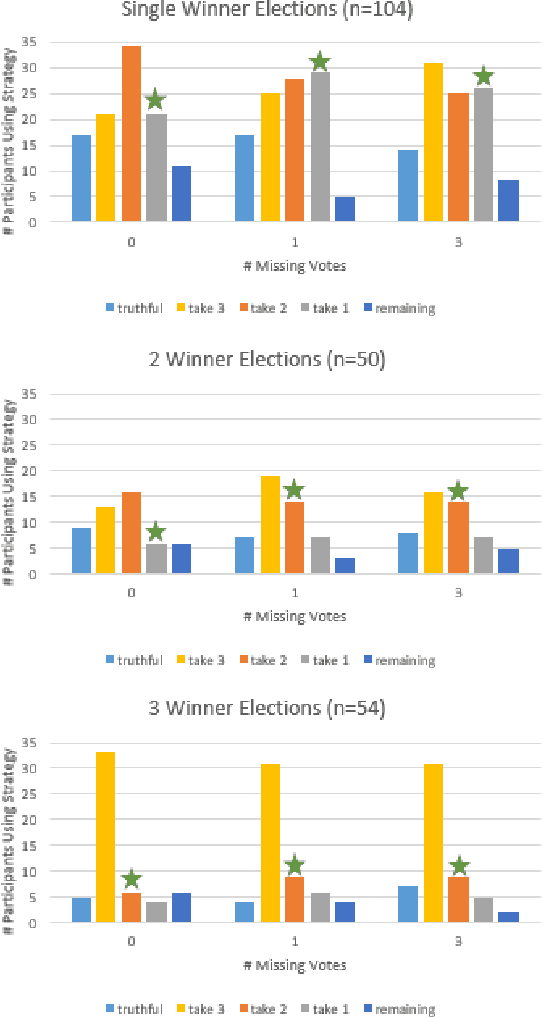

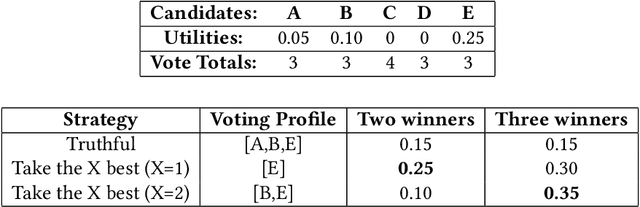

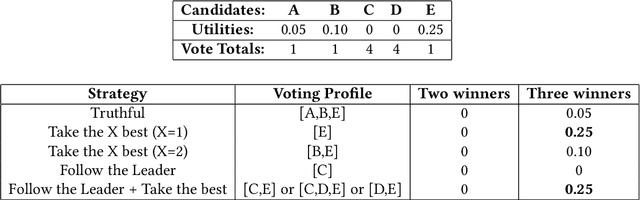

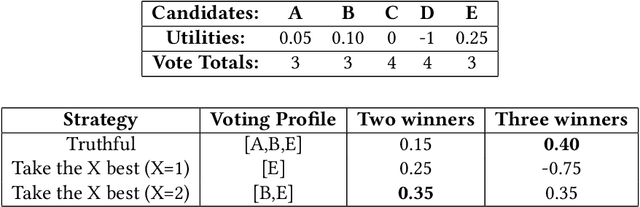

Abstract:In many collective decision making situations, agents vote to choose an alternative that best represents the preferences of the group. Agents may manipulate the vote to achieve a better outcome by voting in a way that does not reflect their true preferences. In real world voting scenarios, people often do not have complete information about other voter preferences and it can be computationally complex to identify a strategy that will maximize their expected utility. In such situations, it is often assumed that voters will vote truthfully rather than expending the effort to strategize. However, being truthful is just one possible heuristic that may be used. In this paper, we examine the effectiveness of heuristics in single winner and multi-winner approval voting scenarios with missing votes. In particular, we look at heuristics where a voter ignores information about other voting profiles and makes their decisions based solely on how much they like each candidate. In a behavioral experiment, we show that people vote truthfully in some situations and prioritize high utility candidates in others. We examine when these behaviors maximize expected utility and show how the structure of the voting environment affects both how well each heuristic performs and how humans employ these heuristics.

Heuristics in Multi-Winner Approval Voting

May 30, 2019

Abstract:In many real world situations, collective decisions are made using voting. Moreover, scenarios such as committee or board elections require voting rules that return multiple winners. In multi-winner approval voting (AV), an agent may vote for as many candidates as they wish. Winners are chosen by tallying up the votes and choosing the top-$k$ candidates receiving the most votes. An agent may manipulate the vote to achieve a better outcome by voting in a way that does not reflect their true preferences. In complex and uncertain situations, agents may use heuristics to strategize, instead of incurring the additional effort required to compute the manipulation which most favors them. In this paper, we examine voting behavior in multi-winner approval voting scenarios with complete information. We show that people generally manipulate their vote to obtain a better outcome, but often do not identify the optimal manipulation. Instead, voters tend to prioritize the candidates with the highest utilities. Using simulations, we demonstrate the effectiveness of these heuristics in situations where agents only have access to partial information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge