Jack Felag

myAURA: Personalized health library for epilepsy management via knowledge graph sparsification and visualization

May 08, 2024

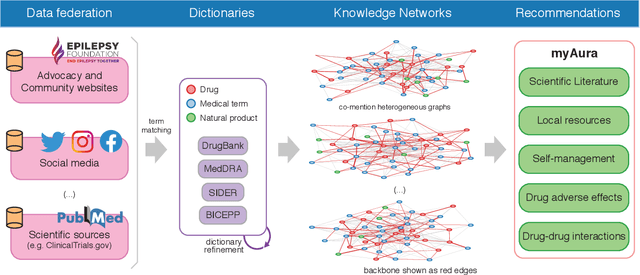

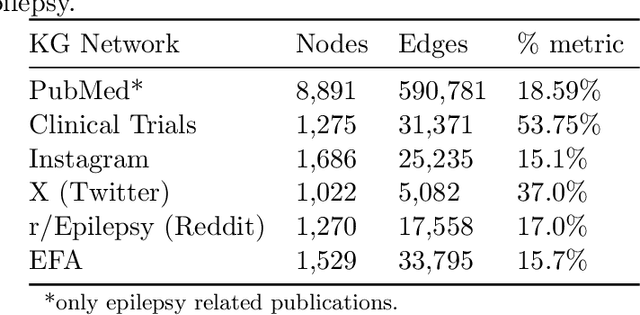

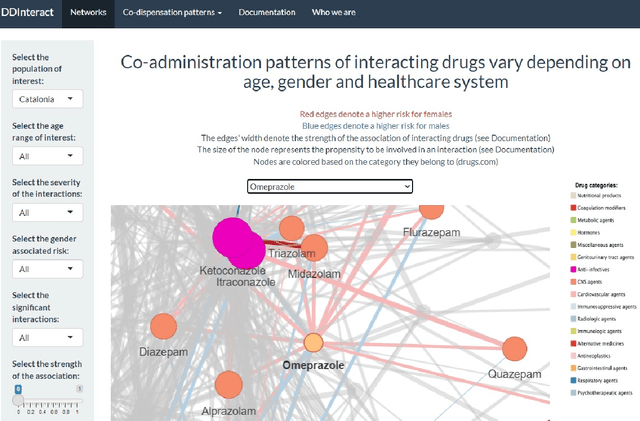

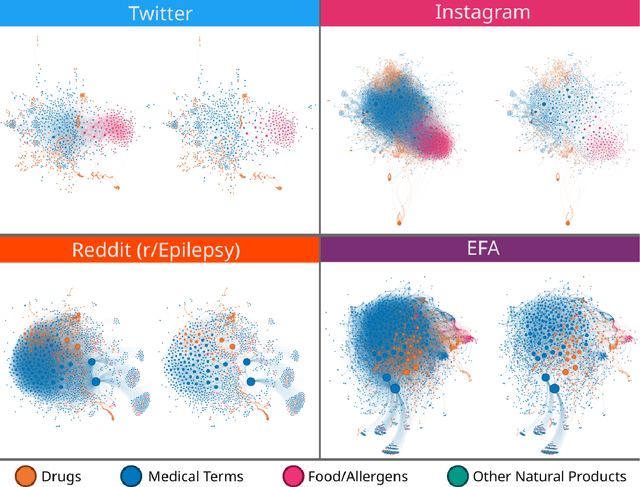

Abstract:Objective: We report the development of the patient-centered myAURA application and suite of methods designed to aid epilepsy patients, caregivers, and researchers in making decisions about care and self-management. Materials and Methods: myAURA rests on the federation of an unprecedented collection of heterogeneous data resources relevant to epilepsy, such as biomedical databases, social media, and electronic health records. A generalizable, open-source methodology was developed to compute a multi-layer knowledge graph linking all this heterogeneous data via the terms of a human-centered biomedical dictionary. Results: The power of the approach is first exemplified in the study of the drug-drug interaction phenomenon. Furthermore, we employ a novel network sparsification methodology using the metric backbone of weighted graphs, which reveals the most important edges for inference, recommendation, and visualization, such as pharmacology factors patients discuss on social media. The network sparsification approach also allows us to extract focused digital cohorts from social media whose discourse is more relevant to epilepsy or other biomedical problems. Finally, we present our patient-centered design and pilot-testing of myAURA, including its user interface, based on focus groups and other stakeholder input. Discussion: The ability to search and explore myAURA's heterogeneous data sources via a sparsified multi-layer knowledge graph, as well as the combination of those layers in a single map, are useful features for integrating relevant information for epilepsy. Conclusion: Our stakeholder-driven, scalable approach to integrate traditional and non-traditional data sources, enables biomedical discovery and data-powered patient self-management in epilepsy, and is generalizable to other chronic conditions.

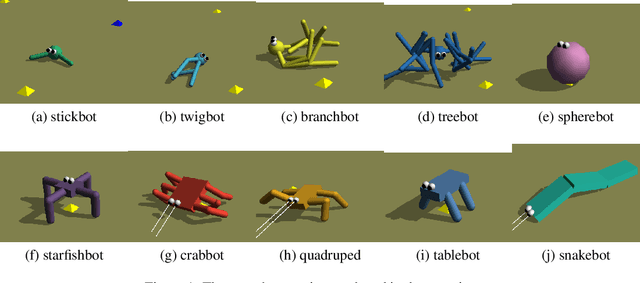

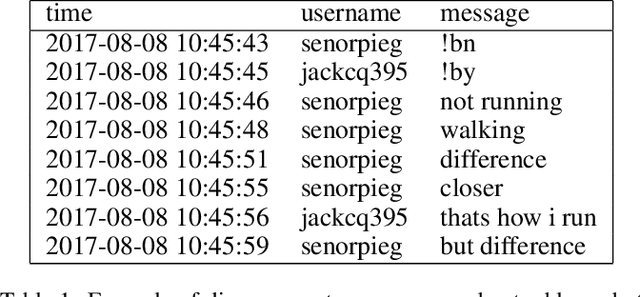

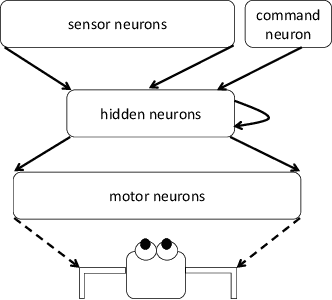

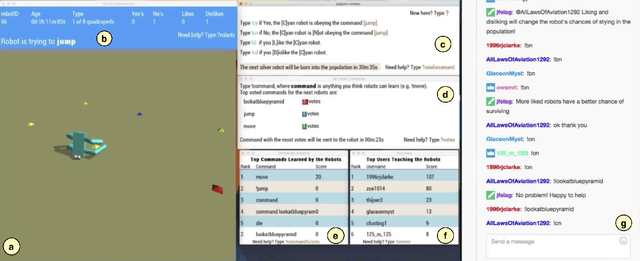

Morphology dictates a robot's ability to ground crowd-proposed language

Dec 20, 2017

Abstract:As more robots act in physical proximity to people, it is essential to ensure they make decisions and execute actions that align with human values. To do so, robots need to understand the true intentions behind human-issued commands. In this paper, we define a safe robot as one that receives a natural-language command from humans, considers an action in response to that command, and accurately predicts how humans will judge that action if is executed in reality. Our contribution is two-fold: First, we introduce a web platform for human users to propose commands to simulated robots. The robots receive commands and act based on those proposed commands, and then the users provide positive and/or negative reinforcement. Next, we train a critic for each robot to predict the crowd's responses to one of the crowd-proposed commands. Second, we show that the morphology of a robot plays a role in the way it grounds language: The critics show that two of the robots used in the experiment achieve a lower prediction error than the others. Thus, those two robots are safer, according to our definition, since they ground the proposed command more accurately.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge