Morphology dictates a robot's ability to ground crowd-proposed language

Paper and Code

Dec 20, 2017

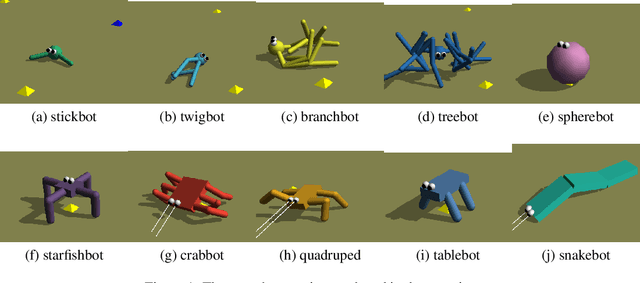

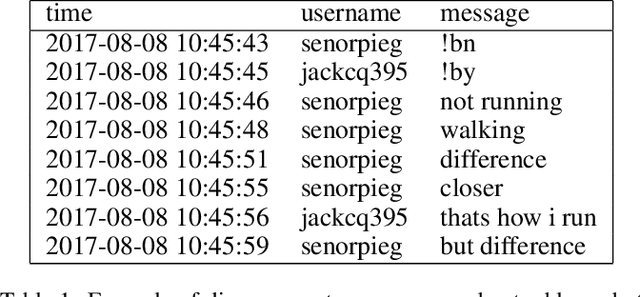

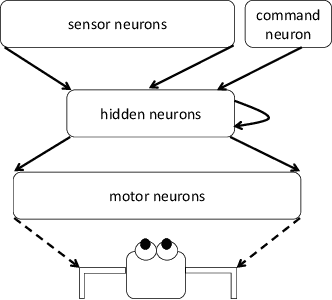

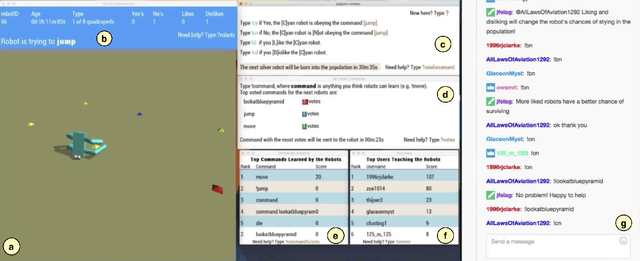

As more robots act in physical proximity to people, it is essential to ensure they make decisions and execute actions that align with human values. To do so, robots need to understand the true intentions behind human-issued commands. In this paper, we define a safe robot as one that receives a natural-language command from humans, considers an action in response to that command, and accurately predicts how humans will judge that action if is executed in reality. Our contribution is two-fold: First, we introduce a web platform for human users to propose commands to simulated robots. The robots receive commands and act based on those proposed commands, and then the users provide positive and/or negative reinforcement. Next, we train a critic for each robot to predict the crowd's responses to one of the crowd-proposed commands. Second, we show that the morphology of a robot plays a role in the way it grounds language: The critics show that two of the robots used in the experiment achieve a lower prediction error than the others. Thus, those two robots are safer, according to our definition, since they ground the proposed command more accurately.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge