Ivon Arroyo

Understanding Users' Privacy Reasoning and Behaviors During Chatbot Use to Support Meaningful Agency in Privacy

Jan 26, 2026Abstract:Conversational agents (CAs) (e.g., chatbots) are increasingly used in settings where users disclose sensitive information, raising significant privacy concerns. Because privacy judgments are highly contextual, supporting users to engage in privacy-protective actions during chatbot interactions is essential. However, enabling meaningful engagement requires a deeper understanding of how users currently reason about and manage sensitive information during realistic chatbot use scenarios. To investigate this, we qualitatively examined computer science (undergraduate and masters) students' in-the-moment disclosure and protection behaviors, as well as the reasoning underlying these behaviors, across a range of realistic chatbot tasks. Participants used a simulated ChatGPT interface with and without a privacy notice panel that intercepts message submissions, highlights potentially sensitive information, and offers privacy protective actions. The panel supports anonymization through retracting, faking, and generalizing, and surfaces two of ChatGPT's built-in privacy controls to improve their discoverability. Drawing on interaction logs, think-alouds, and survey responses, we analyzed how the panel fostered privacy awareness, encouraged protective actions, and supported context-specific reasoning about what information to protect and how. We further discuss design opportunities for tools that provide users greater and more meaningful agency in protecting sensitive information during CA interactions.

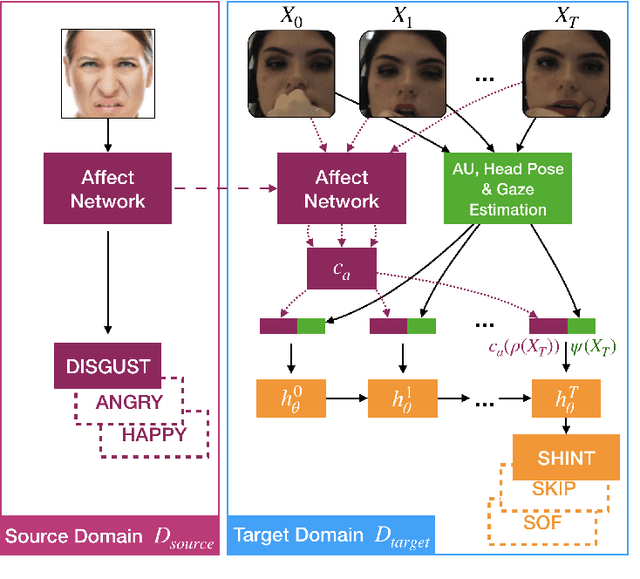

Leveraging Affect Transfer Learning for Behavior Prediction in an Intelligent Tutoring System

Feb 12, 2020

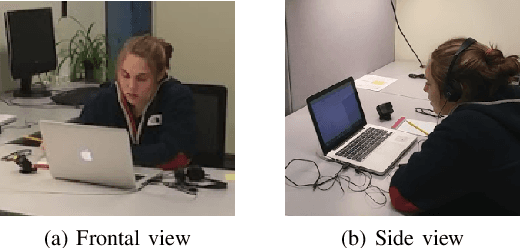

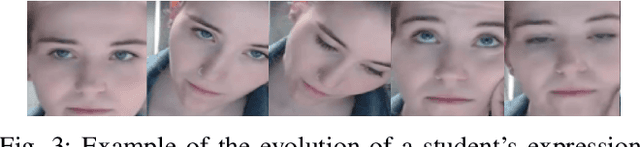

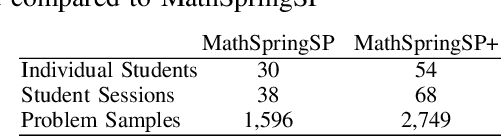

Abstract:In the context of building an intelligent tutoring system (ITS), which improves student learning outcomes by intervention, we set out to improve prediction of student problem outcome. In essence, we want to predict the outcome of a student answering a problem in an ITS from a video feed by analyzing their face and gestures. For this, we present a novel transfer learning facial affect representation and a user-personalized training scheme that unlocks the potential of this representation. We model the temporal structure of video sequences of students solving math problems using a recurrent neural network architecture. Additionally, we extend the largest dataset of student interactions with an intelligent online math tutor by a factor of two. Our final model, coined ATL-BP (Affect Transfer Learning for Behavior Prediction) achieves an increase in mean F-score over state-of-the-art of 45% on this new dataset in the general case and 50% in a more challenging leave-users-out experimental setting when we use a user-personalized training scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge