Ivanna Kramer

SLD: Segmentation-Based Landmark Detection for Spinal Ligaments

Jan 23, 2026Abstract:In biomechanical modeling, the representation of ligament attachments is crucial for a realistic simulation of the forces acting between the vertebrae. These forces are typically modeled as vectors connecting ligament landmarks on adjacent vertebrae, making precise identification of these landmarks a key requirement for constructing reliable spine models. Existing automated detection methods are either limited to specific spinal regions or lack sufficient accuracy. This work presents a novel approach for detecting spinal ligament landmarks, which first performs shape-based segmentation of 3D vertebrae and subsequently applies domain-specific rules to identify different types of attachment points. The proposed method outperforms existing approaches by achieving high accuracy and demonstrating strong generalization across all spinal regions. Validation on two independent spinal datasets from multiple patients yielded a mean absolute error (MAE) of 0.7 mm and a root mean square error (RMSE) of 1.1 mm.

Spinal ligaments detection on vertebrae meshes using registration and 3D edge detection

Dec 06, 2024

Abstract:Spinal ligaments are crucial elements in the complex biomechanical simulation models as they transfer forces on the bony structure, guide and limit movements and stabilize the spine. The spinal ligaments encompass seven major groups being responsible for maintaining functional interrelationships among the other spinal components. Determination of the ligament origin and insertion points on the 3D vertebrae models is an essential step in building accurate and complex spine biomechanical models. In our paper, we propose a pipeline that is able to detect 66 spinal ligament attachment points by using a step-wise approach. Our method incorporates a fast vertebra registration that strategically extracts only 15 3D points to compute the transformation, and edge detection for a precise projection of the registered ligaments onto any given patient-specific vertebra model. Our method shows high accuracy, particularly in identifying landmarks on the anterior part of the vertebra with an average distance of 2.24 mm for anterior longitudinal ligament and 1.26 mm for posterior longitudinal ligament landmarks. The landmark detection requires approximately 3.0 seconds per vertebra, providing a substantial improvement over existing methods. Clinical relevance: using the proposed method, the required landmarks that represent origin and insertion points for forces in the biomechanical spine models can be localized automatically in an accurate and time-efficient manner.

Reconstruction of 3D lumbar spine models from incomplete segmentations using landmark detection

Dec 06, 2024

Abstract:Patient-specific 3D spine models serve as a foundation for spinal treatment and surgery planning as well as analysis of loading conditions in biomechanical and biomedical research. Despite advancements in imaging technologies, the reconstruction of complete 3D spine models often faces challenges due to limitations in imaging modalities such as planar X-Ray and missing certain spinal structures, such as the spinal or transverse processes, in volumetric medical images and resulting segmentations. In this study, we present a novel accurate and time-efficient method to reconstruct complete 3D lumbar spine models from incomplete 3D vertebral bodies obtained from segmented magnetic resonance images (MRI). In our method, we use an affine transformation to align artificial vertebra models with patient-specific incomplete vertebrae. The transformation matrix is derived from vertebra landmarks, which are automatically detected on the vertebra endplates. The results of our evaluation demonstrate the high accuracy of the performed registration, achieving an average point-to-model distance of 1.95 mm. Additionally, in assessing the morphological properties of the vertebrae and intervertebral characteristics, our method demonstrated a mean absolute error (MAE) of 3.4{\deg} in the angles of functional spine units (FSUs), emphasizing its effectiveness in maintaining important spinal features throughout the transformation process of individual vertebrae. Our method achieves the registration of the entire lumbar spine, spanning segments L1 to L5, in just 0.14 seconds, showcasing its time-efficiency. Clinical relevance: the fast and accurate reconstruction of spinal models from incomplete input data such as segmentations provides a foundation for many applications in spine diagnostics, treatment planning, and the development of spinal healthcare solutions.

3D Vertebrae Measurements: Assessing Vertebral Dimensions in Human Spine Mesh Models Using Local Anatomical Vertebral Axes

Feb 02, 2024Abstract:Vertebral morphological measurements are important across various disciplines, including spinal biomechanics and clinical applications, pre- and post-operatively. These measurements also play a crucial role in anthropological longitudinal studies, where spinal metrics are repeatedly documented over extended periods. Traditionally, such measurements have been manually conducted, a process that is time-consuming. In this study, we introduce a novel, fully automated method for measuring vertebral morphology using 3D meshes of lumbar and thoracic spine models.Our experimental results demonstrate the method's capability to accurately measure low-resolution patient-specific vertebral meshes with mean absolute error (MAE) of 1.09 mm and those derived from artificially created lumbar spines, where the average MAE value was 0.7 mm. Our qualitative analysis indicates that measurements obtained using our method on 3D spine models can be accurately reprojected back onto the original medical images if these images are available.

HealthWalk: Promoting Health and Mobility through Sensor-Based Rollator Walker Assistance

Oct 11, 2023Abstract:Rollator walkers allow people with physical limitations to increase their mobility and give them the confidence and independence to participate in society for longer. However, rollator walker users often have poor posture, leading to further health problems and, in the worst case, falls. Integrating sensors into rollator walker designs can help to address this problem and results in a platform that allows several other interesting use cases. This paper briefly overviews existing systems and the current research directions and challenges in this field. We also present our early HealthWalk rollator walker prototype for data collection with older people, rheumatism, multiple sclerosis and Parkinson patients, and individuals with visual impairments.

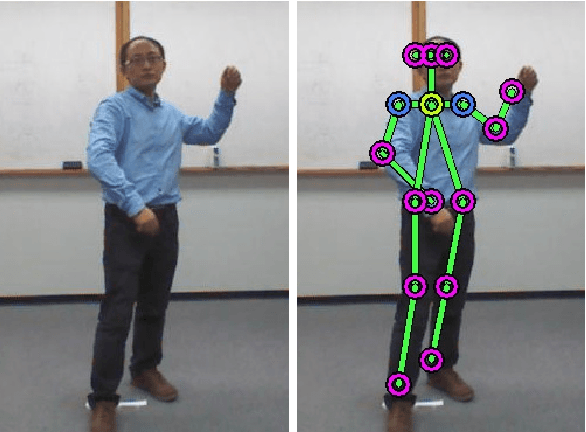

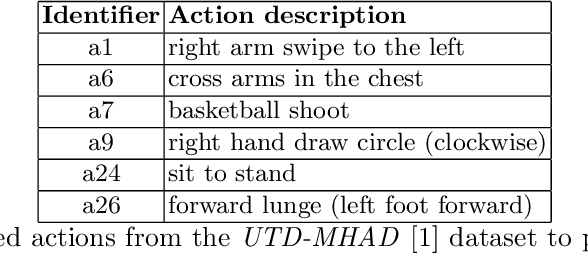

Gesture Recognition in RGB Videos UsingHuman Body Keypoints and Dynamic Time Warping

Jun 25, 2019

Abstract:Gesture recognition opens up new ways for humans to intuitively interact with machines. Especially for service robots, gestures can be a valuable addition to the means of communication to, for example, draw the robot's attention to someone or something. Extracting a gesture from video data and classifying it is a challenging task and a variety of approaches have been proposed throughout the years. This paper presents a method for gesture recognition in RGB videos using OpenPose to extract the pose of a person and Dynamic Time Warping (DTW) in conjunction with One-Nearest-Neighbor (1NN) for time-series classification. The main features of this approach are the independence of any specific hardware and high flexibility, because new gestures can be added to the classifier by adding only a few examples of it. We utilize the robustness of the Deep Learning-based OpenPose framework while avoiding the data-intensive task of training a neural network ourselves. We demonstrate the classification performance of our method using a public dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge