Ivan Shanin

Exploring Transformer-Based Music Overpainting for Jazz Piano Variations

Dec 05, 2024

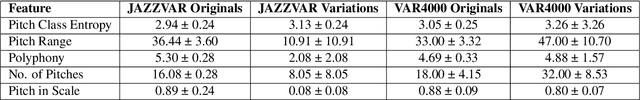

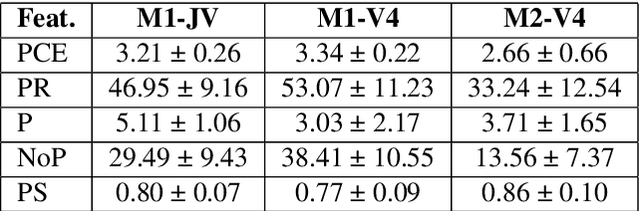

Abstract:This paper explores transformer-based models for music overpainting, focusing on jazz piano variations. Music overpainting generates new variations while preserving the melodic and harmonic structure of the input. Existing approaches are limited by small datasets, restricting scalability and diversity. We introduce VAR4000, a subset of a larger dataset for jazz piano performances, consisting of 4,352 training pairs. Using a semi-automatic pipeline, we evaluate two transformer configurations on VAR4000, comparing their performance with the smaller JAZZVAR dataset. Preliminary results show promising improvements in generalisation and performance with the larger dataset configuration, highlighting the potential of transformer models to scale effectively for music overpainting on larger and more diverse datasets.

Semi-supervised cross-lingual speech emotion recognition

Jul 14, 2022

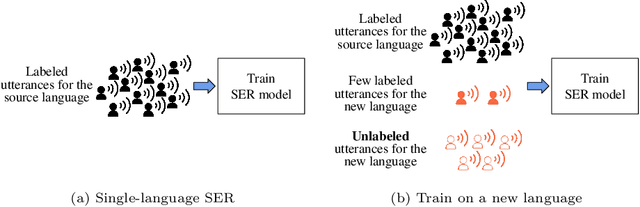

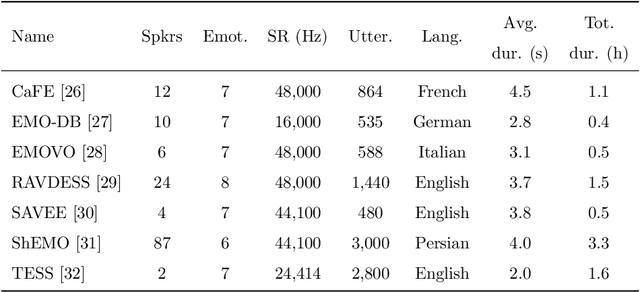

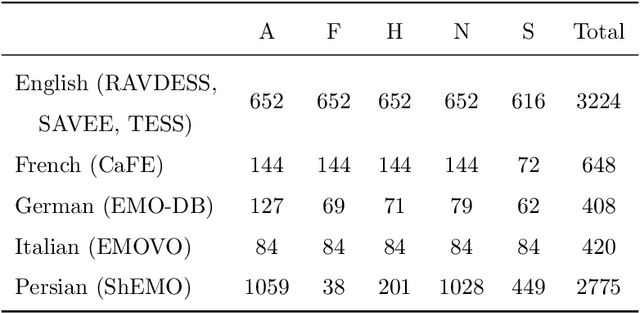

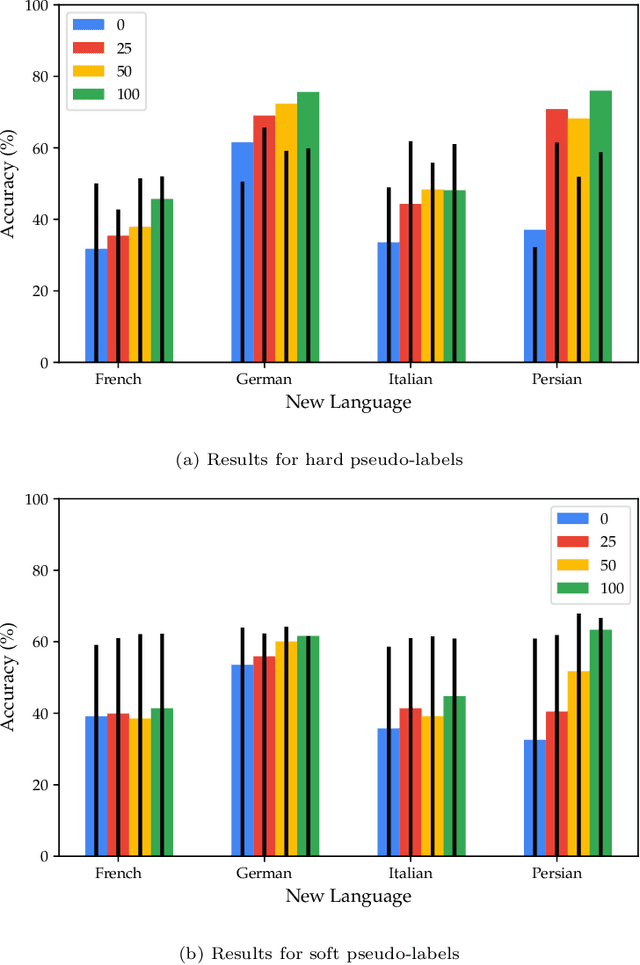

Abstract:Speech emotion recognition (SER) on a single language has achieved remarkable results through deep learning approaches over the last decade. However, cross-lingual SER remains a challenge in real-world applications due to (i) a large difference between the source and target domain distributions, (ii) the availability of few labeled and many unlabeled utterances for the new language. Taking into account previous aspects, we propose a Semi-Supervised Learning (SSL) method for cross-lingual emotion recognition when a few labels from the new language are available. Based on a Convolutional Neural Network (CNN), our method adapts to a new language by exploiting a pseudo-labeling strategy for the unlabeled utterances. In particular, the use of a hard and soft pseudo-labels approach is investigated. We thoroughly evaluate the performance of the method in a speaker-independent setup on both the source and the new language and show its robustness across five languages belonging to different linguistic strains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge