Ishaan Bhat

Effect of latent space distribution on the segmentation of images with multiple annotations

Apr 26, 2023

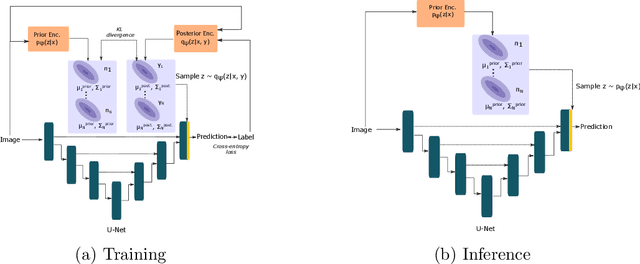

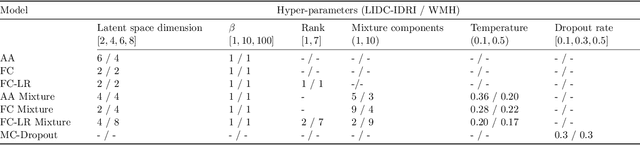

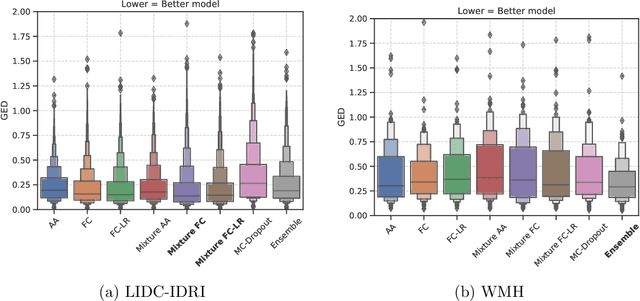

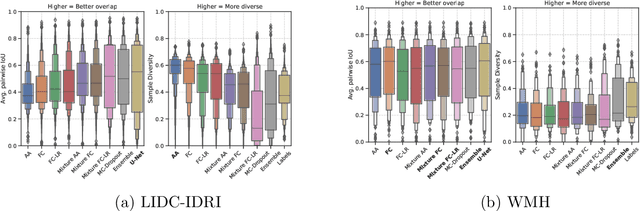

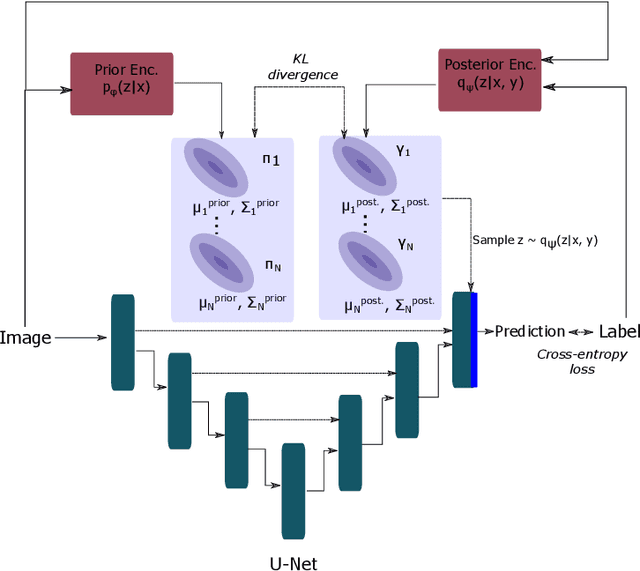

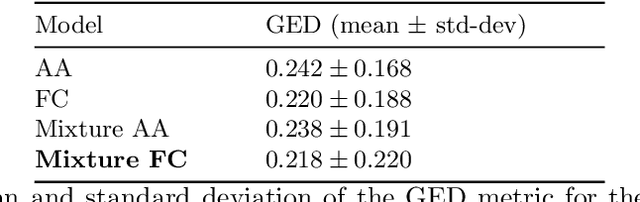

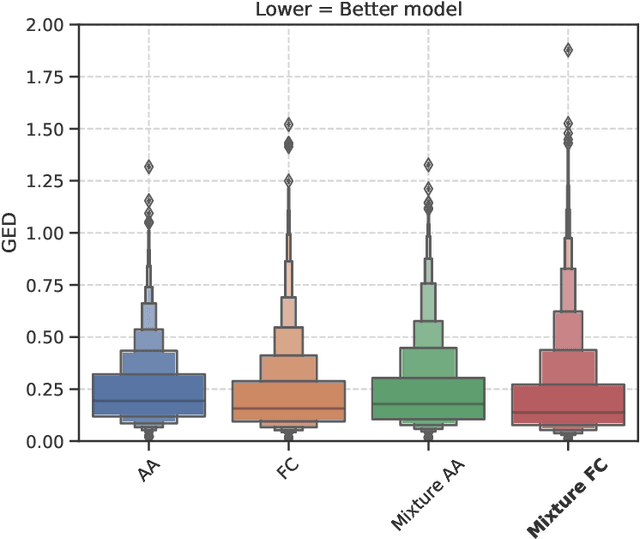

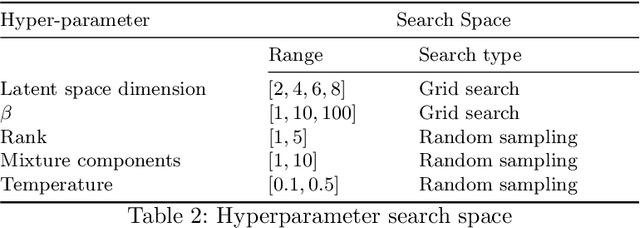

Abstract:We propose the Generalized Probabilistic U-Net, which extends the Probabilistic U-Net by allowing more general forms of the Gaussian distribution as the latent space distribution that can better approximate the uncertainty in the reference segmentations. We study the effect the choice of latent space distribution has on capturing the variation in the reference segmentations for lung tumors and white matter hyperintensities in the brain. We show that the choice of distribution affects the sample diversity of the predictions and their overlap with respect to the reference segmentations. We have made our implementation available at https://github.com/ishaanb92/GeneralizedProbabilisticUNet

* Accepted for publication at the Journal of Machine Learning for Biomedical Imaging (MELBA) https://melba-journal.org/2023:005. arXiv admin note: text overlap with arXiv:2207.12872

Generalized Probabilistic U-Net for medical image segementation

Jul 26, 2022

Abstract:We propose the Generalized Probabilistic U-Net, which extends the Probabilistic U-Net by allowing more general forms of the Gaussian distribution as the latent space distribution that can better approximate the uncertainty in the reference segmentations. We study the effect the choice of latent space distribution has on capturing the uncertainty in the reference segmentations using the LIDC-IDRI dataset. We show that the choice of distribution affects the sample diversity of the predictions and their overlap with respect to the reference segmentations. For the LIDC-IDRI dataset, we show that using a mixture of Gaussians results in a statistically significant improvement in the generalized energy distance (GED) metric with respect to the standard Probabilistic U-Net. We have made our implementation available at https://github.com/ishaanb92/GeneralizedProbabilisticUNet

Influence of uncertainty estimation techniques on false-positive reduction in liver lesion detection

Jun 22, 2022

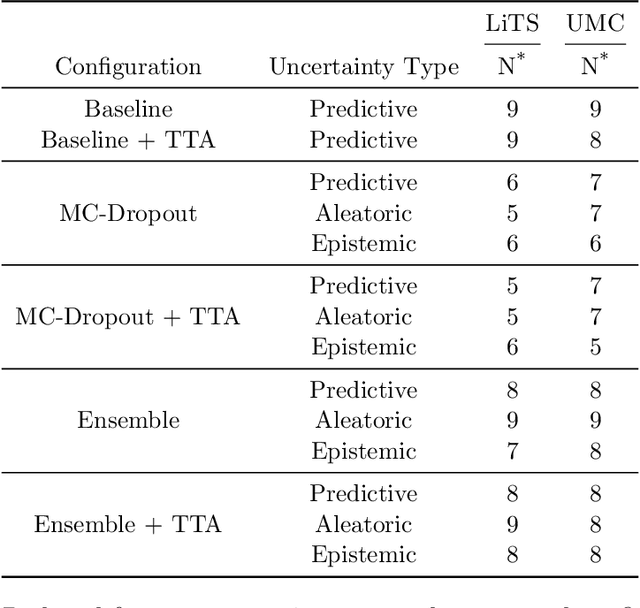

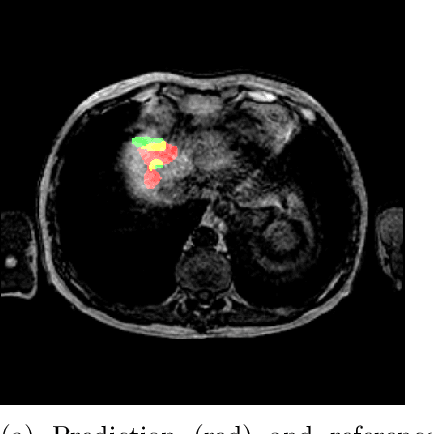

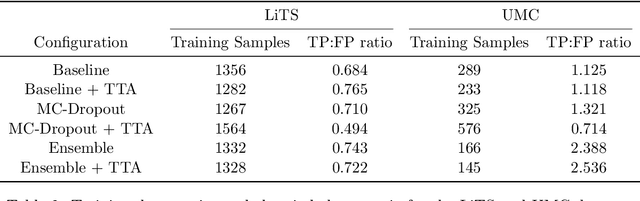

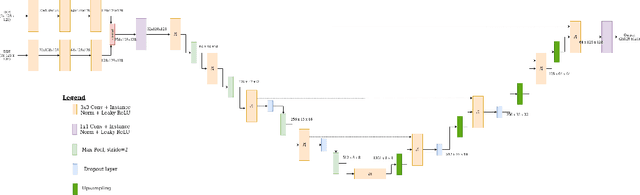

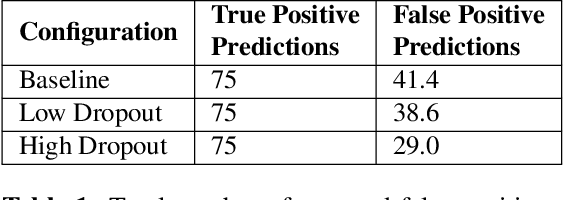

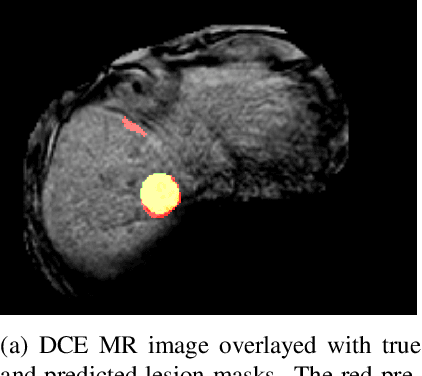

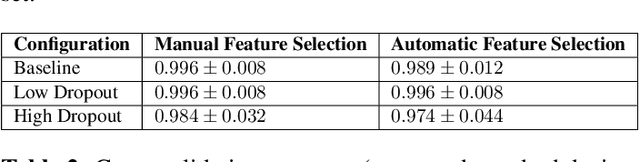

Abstract:Deep learning techniques show success in detecting objects in medical images, but still suffer from false-positive predictions that may hinder accurate diagnosis. The estimated uncertainty of the neural network output has been used to flag incorrect predictions. We study the role played by features computed from neural network uncertainty estimates and shape-based features computed from binary predictions in reducing false positives in liver lesion detection by developing a classification-based post-processing step for different uncertainty estimation methods. We demonstrate an improvement in the lesion detection performance of the neural network (with respect to F1-score) for all uncertainty estimation methods on two datasets, comprising abdominal MR and CT images respectively. We show that features computed from neural network uncertainty estimates tend not to contribute much toward reducing false positives. Our results show that factors like class imbalance (true over false positive ratio) and shape-based features extracted from uncertainty maps play an important role in distinguishing false positive from true positive predictions

Using uncertainty estimation to reduce false positives in liver lesion detection

Jan 26, 2021

Abstract:Despite the successes of deep learning techniques at detecting objects in medical images, false positive detections occur which may hinder an accurate diagnosis. We propose a technique to reduce false positive detections made by a neural network using an SVM classifier trained with features derived from the uncertainty map of the neural network prediction. We demonstrate the effectiveness of this method for the detection of liver lesions on a dataset of abdominal MR images. We find that the use of a dropout rate of 0.5 produces the least number of false positives in the neural network predictions and the trained classifier filters out approximately 90% of these false positives detections in the test-set.

The GCE in a New Light: Disentangling the $γ$-ray Sky with Bayesian Graph Convolutional Neural Networks

Jun 22, 2020

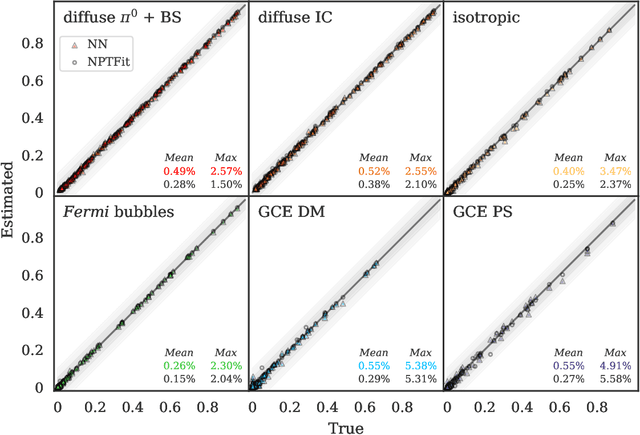

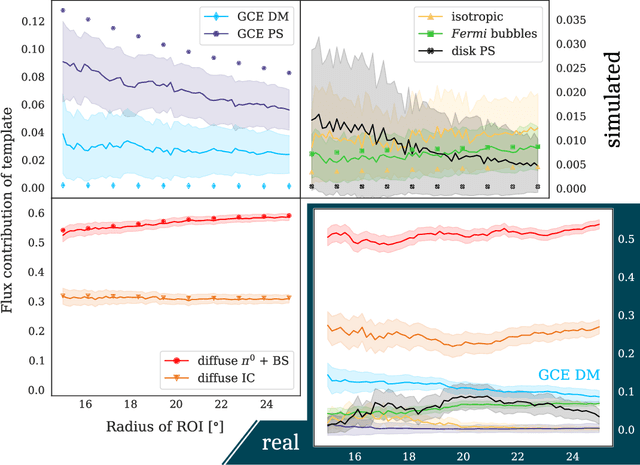

Abstract:A fundamental question regarding the Galactic Center Excess (GCE) is whether the underlying structure is point-like or smooth. This debate, often framed in terms of a millisecond pulsar or annihilating dark matter (DM) origin for the emission, awaits a conclusive resolution. In this work we weigh in on the problem using Bayesian graph convolutional neural networks. In simulated data, our neural network (NN) is able to reconstruct the flux of inner galaxy emission components to on average $\sim$0.5%, comparable to the non-Poissonian template fit (NPTF). When applied to the actual $\textit{Fermi}$-LAT data, we find that the NN estimates for the flux fractions from the background templates are consistent with the NPTF; however, the GCE is almost entirely attributed to smooth emission. While suggestive, we do not claim a definitive resolution for the GCE, as the NN tends to underestimate the flux of point-sources peaked near the 1$\sigma$ detection threshold. Yet the technique displays robustness to a number of systematics, including reconstructing injected DM, diffuse mismodeling, and unmodeled north-south asymmetries. So while the NN is hinting at a smooth origin for the GCE at present, with further refinements we argue that Bayesian Deep Learning is well placed to resolve this DM mystery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge