Isaac Squires

SAMBA: A Trainable Segmentation Web-App with Smart Labelling

Dec 07, 2023

Abstract:Segmentation is the assigning of a semantic class to every pixel in an image and is a prerequisite for various statistical analysis tasks in materials science, like phase quantification, physics simulations or morphological characterization. The wide range of length scales, imaging techniques and materials studied in materials science means any segmentation algorithm must generalise to unseen data and support abstract, user-defined semantic classes. Trainable segmentation is a popular interactive segmentation paradigm where a classifier is trained to map from image features to user drawn labels. SAMBA is a trainable segmentation tool that uses Meta's Segment Anything Model (SAM) for fast, high-quality label suggestions and a random forest classifier for robust, generalizable segmentations. It is accessible in the browser (https://www.sambasegment.com/) without the need to download any external dependencies. The segmentation backend is run in the cloud, so does not require the user to have powerful hardware.

HRTF upsampling with a generative adversarial network using a gnomonic equiangular projection

Jun 09, 2023

Abstract:An individualised head-related transfer function (HRTF) is essential for creating realistic virtual reality (VR) and augmented reality (AR) environments. However, acoustically measuring high-quality HRTFs requires expensive equipment and an acoustic lab setting. To overcome these limitations and to make this measurement more efficient HRTF upsampling has been exploited in the past where a high-resolution HRTF is created from a low-resolution one. This paper demonstrates how generative adversarial networks (GANs) can be applied to HRTF upsampling. We propose a novel approach that transforms the HRTF data for convenient use with a convolutional super-resolution generative adversarial network (SRGAN). This new approach is benchmarked against two baselines: barycentric upsampling and a HRTF selection approach. Experimental results show that the proposed method outperforms both baselines in terms of log-spectral distortion (LSD) and localisation performance using perceptual models when the input HRTF is sparse.

Two approaches to inpainting microstructure with deep convolutional generative adversarial networks

Oct 13, 2022

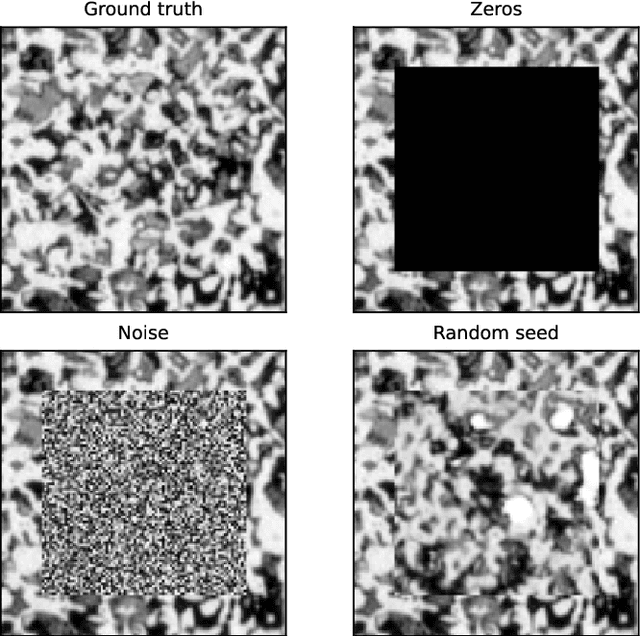

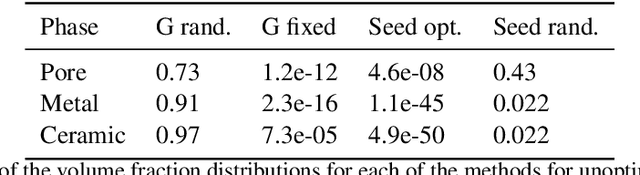

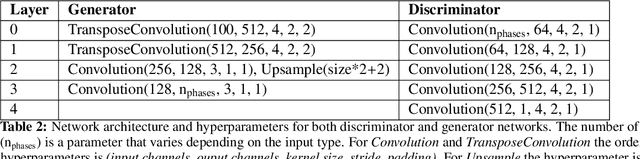

Abstract:Imaging is critical to the characterisation of materials. However, even with careful sample preparation and microscope calibration, imaging techniques are often prone to defects and unwanted artefacts. This is particularly problematic for applications where the micrograph is to be used for simulation or feature analysis, as defects are likely to lead to inaccurate results. Microstructural inpainting is a method to alleviate this problem by replacing occluded regions with synthetic microstructure with matching boundaries. In this paper we introduce two methods that use generative adversarial networks to generate contiguous inpainted regions of arbitrary shape and size by learning the microstructural distribution from the unoccluded data. We find that one benefits from high speed and simplicity, whilst the other gives smoother boundaries at the inpainting border. We also outline the development of a graphical user interface that allows users to utilise these machine learning methods in a 'no-code' environment.

MicroLib: A library of 3D microstructures generated from 2D micrographs using SliceGAN

Oct 12, 2022

Abstract:3D microstructural datasets are commonly used to define the geometrical domains used in finite element modelling. This has proven a useful tool for understanding how complex material systems behave under applied stresses, temperatures and chemical conditions. However, 3D imaging of materials is challenging for a number of reasons, including limited field of view, low resolution and difficult sample preparation. Recently, a machine learning method, SliceGAN, was developed to statistically generate 3D microstructural datasets of arbitrary size using a single 2D input slice as training data. In this paper, we present the results from applying SliceGAN to 87 different microstructures, ranging from biological materials to high-strength steels. To demonstrate the accuracy of the synthetic volumes created by SliceGAN, we compare three microstructural properties between the 2D training data and 3D generations, which show good agreement. This new microstructure library both provides valuable 3D microstructures that can be used in models, and also demonstrates the broad applicability of the SliceGAN algorithm.

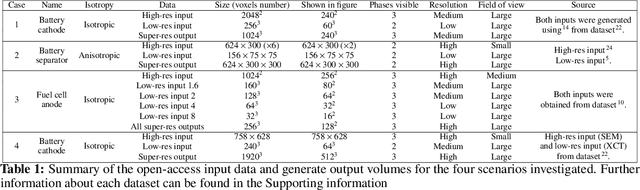

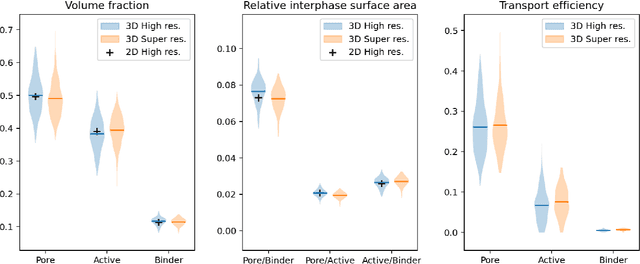

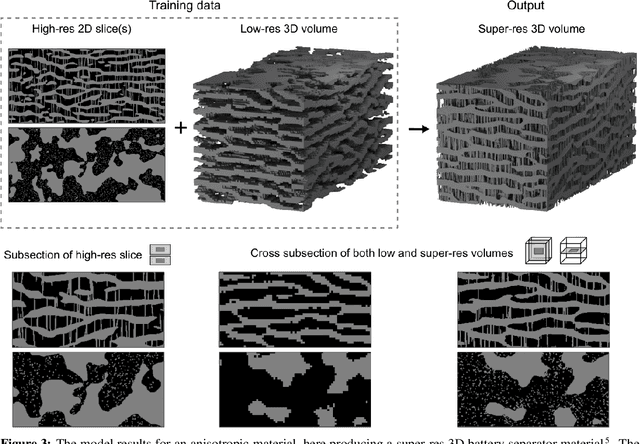

Super-resolution of multiphase materials by combining complementary 2D and 3D image data using generative adversarial networks

Oct 22, 2021

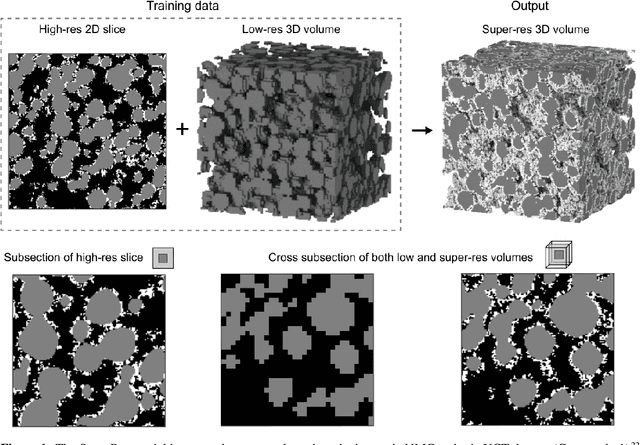

Abstract:Modelling the impact of a material's mesostructure on device level performance typically requires access to 3D image data containing all the relevant information to define the geometry of the simulation domain. This image data must include sufficient contrast between phases to distinguish each material, be of high enough resolution to capture the key details, but also have a large enough field-of-view to be representative of the material in general. It is rarely possible to obtain data with all of these properties from a single imaging technique. In this paper, we present a method for combining information from pairs of distinct but complementary imaging techniques in order to accurately reconstruct the desired multi-phase, high resolution, representative, 3D images. Specifically, we use deep convolutional generative adversarial networks to implement super-resolution, style transfer and dimensionality expansion. To demonstrate the widespread applicability of this tool, two pairs of datasets are used to validate the quality of the volumes generated by fusing the information from paired imaging techniques. Three key mesostructural metrics are calculated in each case to show the accuracy of this method. Having confidence in the accuracy of our method, we then demonstrate its power by applying to a real data pair from a lithium ion battery electrode, where the required 3D high resolution image data is not available anywhere in the literature. We believe this approach is superior to previously reported statistical material reconstruction methods both in terms of its fidelity and ease of use. Furthermore, much of the data required to train this algorithm already exists in the literature, waiting to be combined. As such, our open-access code could precipitate a step change by generating the hard to obtain high quality image volumes necessary to simulate behaviour at the mesoscale.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge