Irene Cheng

Feature CAM: Interpretable AI in Image Classification

Mar 08, 2024

Abstract:Deep Neural Networks have often been called the black box because of the complex, deep architecture and non-transparency presented by the inner layers. There is a lack of trust to use Artificial Intelligence in critical and high-precision fields such as security, finance, health, and manufacturing industries. A lot of focused work has been done to provide interpretable models, intending to deliver meaningful insights into the thoughts and behavior of neural networks. In our research, we compare the state-of-the-art methods in the Activation-based methods (ABM) for interpreting predictions of CNN models, specifically in the application of Image Classification. We then extend the same for eight CNN-based architectures to compare the differences in visualization and thus interpretability. We introduced a novel technique Feature CAM, which falls in the perturbation-activation combination, to create fine-grained, class-discriminative visualizations. The resulting saliency maps from our experiments proved to be 3-4 times better human interpretable than the state-of-the-art in ABM. At the same time it reserves machine interpretability, which is the average confidence scores in classification.

Subjective and Objective Visual Quality Assessment of Textured 3D Meshes

Feb 08, 2021

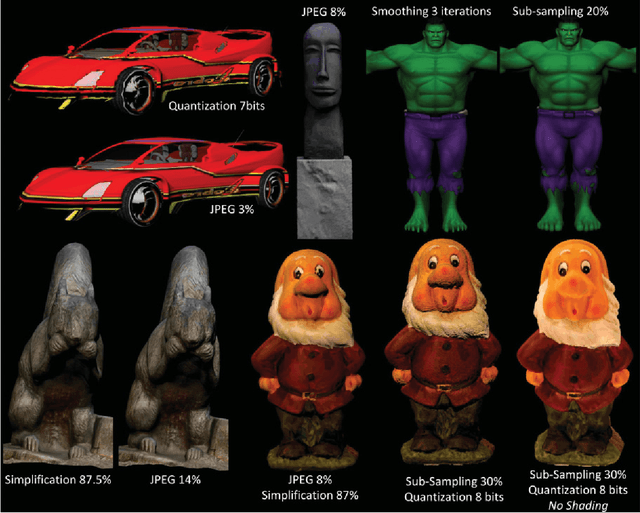

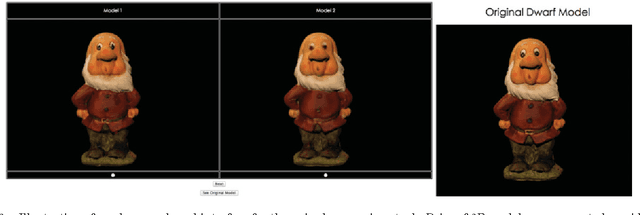

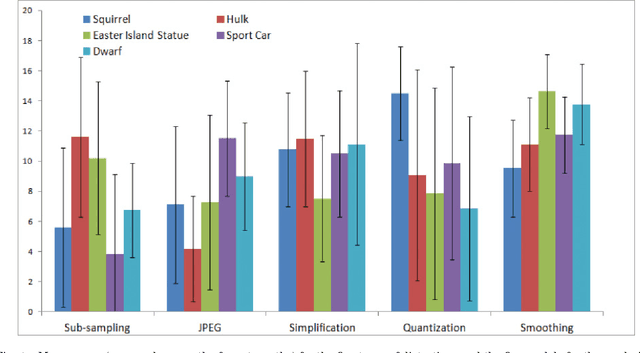

Abstract:Objective visual quality assessment of 3D models is a fundamental issue in computer graphics. Quality assessment metrics may allow a wide range of processes to be guided and evaluated, such as level of detail creation, compression, filtering, and so on. Most computer graphics assets are composed of geometric surfaces on which several texture images can be mapped to 11 make the rendering more realistic. While some quality assessment metrics exist for geometric surfaces, almost no research has been conducted on the evaluation of texture-mapped 3D models. In this context, we present a new subjective study to evaluate the perceptual quality of textured meshes, based on a paired comparison protocol. We introduce both texture and geometry distortions on a set of 5 reference models to produce a database of 136 distorted models, evaluated using two rendering protocols. Based on analysis of the results, we propose two new metrics for visual quality assessment of textured mesh, as optimized linear combinations of accurate geometry and texture quality measurements. These proposed perceptual metrics outperform their counterparts in terms of correlation with human opinion. The database, along with the associated subjective scores, will be made publicly available online.

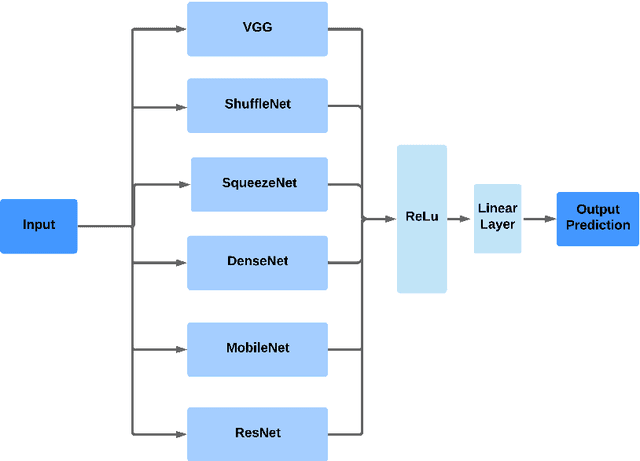

Parkinson's Disease Detection with Ensemble Architectures based on ILSVRC Models

Jul 23, 2020

Abstract:In this work, we explore various neural network architectures using Magnetic Resonance (MR) T1 images of the brain to identify Parkinson's Disease (PD), which is one of the most common neurodegenerative and movement disorders. We propose three ensemble architectures combining some winning Convolutional Neural Network models of ImageNet Large Scale Visual Recognition Challenge (ILSVRC). All of our proposed architectures outperform existing approaches to detect PD from MR images, achieving upto 95\% detection accuracy. We also find that when we construct our ensemble architecture using models pretrained on the ImageNet dataset unrelated to PD, the detection performance is significantly better compared to models without any prior training. Our finding suggests a promising direction when no or insufficient training data is available.

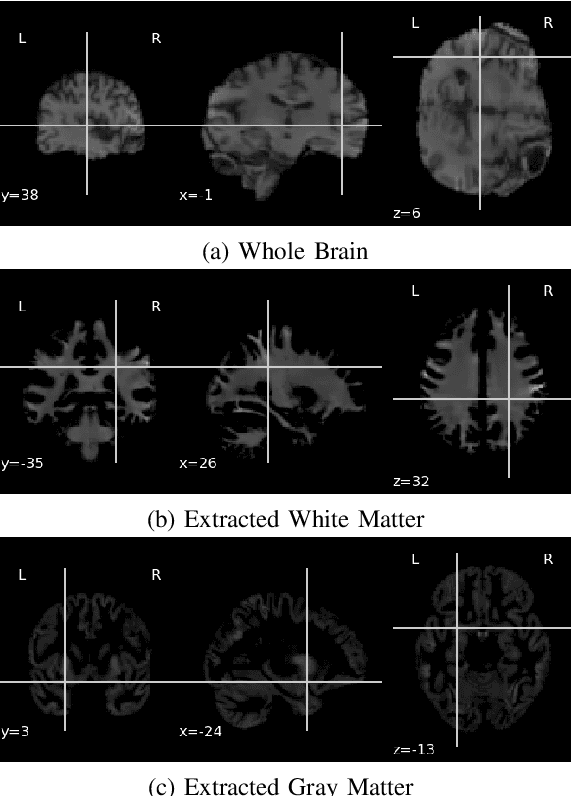

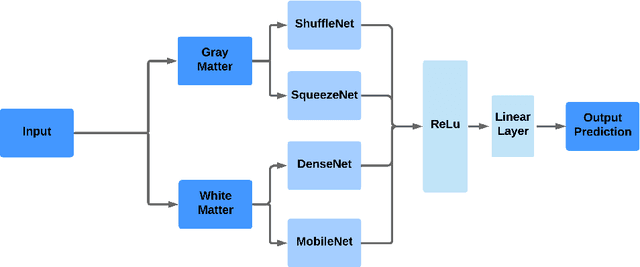

Parkinson's Disease Detection Using Ensemble Architecture from MR Images

Jul 01, 2020

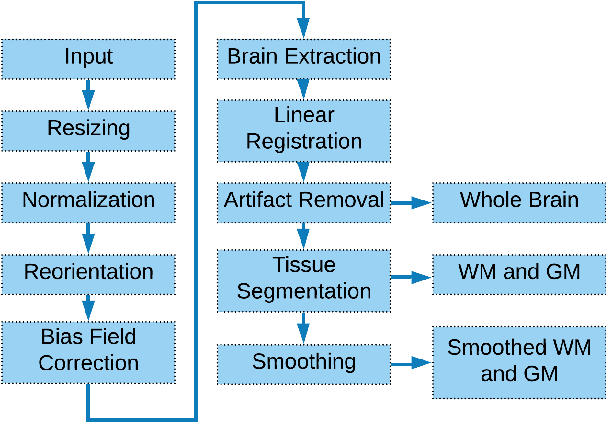

Abstract:Parkinson's Disease(PD) is one of the major nervous system disorders that affect people over 60. PD can cause cognitive impairments. In this work, we explore various approaches to identify Parkinson's using Magnetic Resonance (MR) T1 images of the brain. We experiment with ensemble architectures combining some winning Convolutional Neural Network models of ImageNet Large Scale Visual Recognition Challenge (ILSVRC) and propose two architectures. We find that detection accuracy increases drastically when we focus on the Gray Matter (GM) and White Matter (WM) regions from the MR images instead of using whole MR images. We achieved an average accuracy of 94.7\% using smoothed GM and WM extracts and one of our proposed architectures. We also perform occlusion analysis and determine which brain areas are relevant in the architecture decision making process.

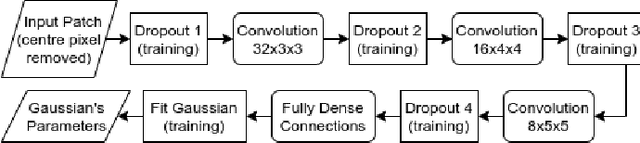

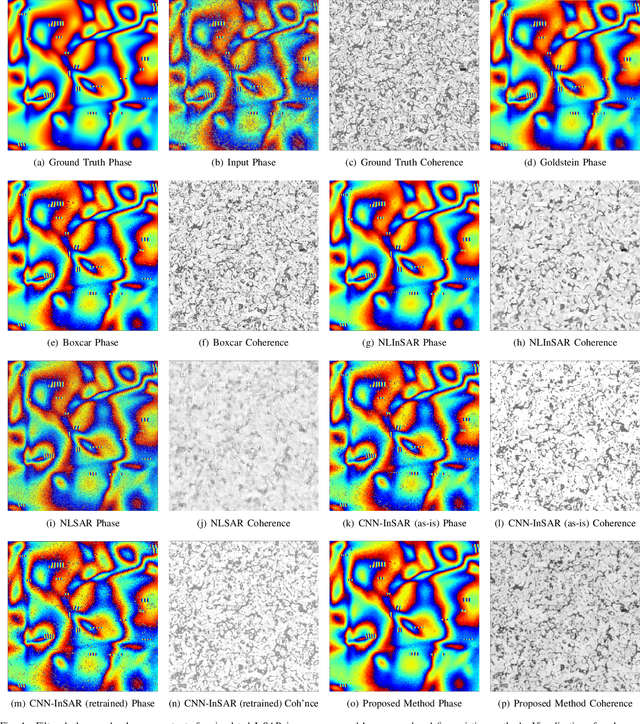

A Novel Generative Neural Approach for InSAR Joint Phase Filtering and Coherence Estimation

Jan 27, 2020

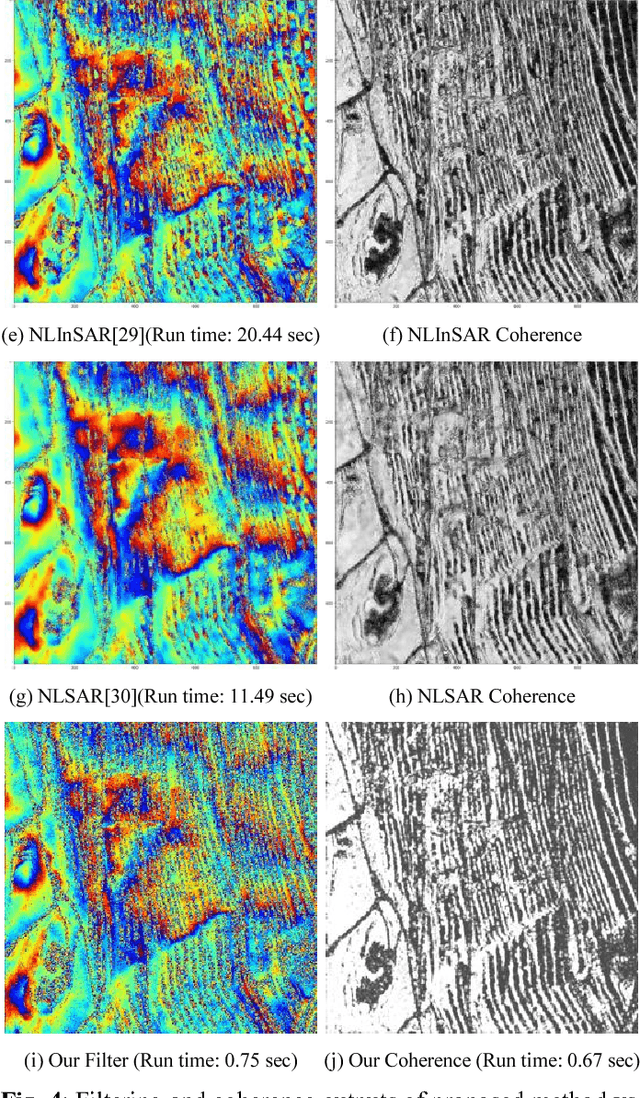

Abstract:Earth's physical properties like atmosphere, topography and ground instability can be determined by differencing billions of phase measurements (pixels) in subsequent matching Interferometric Synthetic Aperture Radar (InSAR) images. Quality (coherence) of each pixel can vary from perfect information (1) to complete noise (0), which needs to be quantified, alongside filtering information-bearing pixels. Phase filtering is thus critical to InSAR's Digital Elevation Model (DEM) production pipeline, as it removes spatial inconsistencies (residues), immensely improving the subsequent unwrapping. Recent explosion in quantity of available InSAR data can facilitate Wide Area Monitoring (WAM) over several geographical regions, if effective and efficient automated processing can obviate manual quality-control. Advances in parallel computing architectures and Convolutional Neural Networks (CNNs) which thrive on them to rival human performance on visual pattern recognition makes this approach ideal for InSAR phase filtering for WAM, but remains largely unexplored. We propose "GenInSAR", a CNN-based generative model for joint phase filtering and coherence estimation. We use satellite and simulated InSAR images to show overall superior performance of GenInSAR over five algorithms qualitatively, and quantitatively using Phase and Coherence Root-Mean-Squared-Error, Residue Reduction Percentage, and Phase Cosine Error.

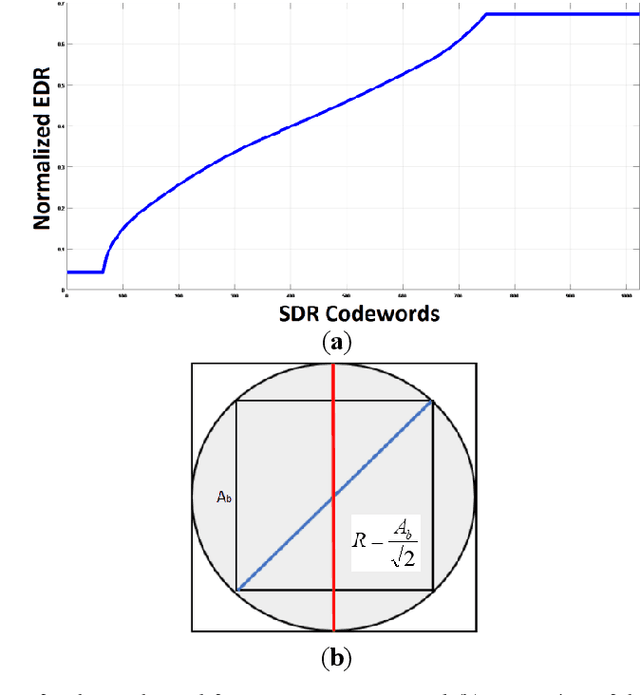

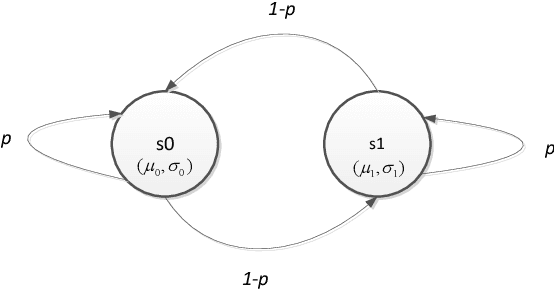

Adaptive Dithering Using Curved Markov-Gaussian Noise in the Quantized Domain for Mapping SDR to HDR Image

Jan 20, 2020

Abstract:High Dynamic Range (HDR) imaging is gaining increased attention due to its realistic content, for not only regular displays but also smartphones. Before sufficient HDR content is distributed, HDR visualization still relies mostly on converting Standard Dynamic Range (SDR) content. SDR images are often quantized, or bit depth reduced, before SDR-to-HDR conversion, e.g. for video transmission. Quantization can easily lead to banding artefacts. In some computing and/or memory I/O limited environment, the traditional solution using spatial neighborhood information is not feasible. Our method includes noise generation (offline) and noise injection (online), and operates on pixels of the quantized image. We vary the magnitude and structure of the noise pattern adaptively based on the luma of the quantized pixel and the slope of the inverse-tone mapping function. Subjective user evaluations confirm the superior performance of our technique.

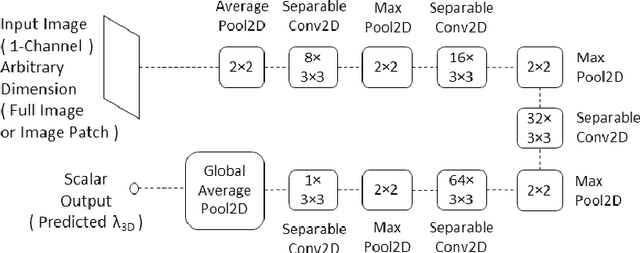

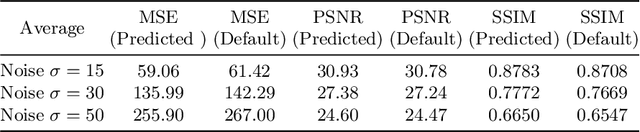

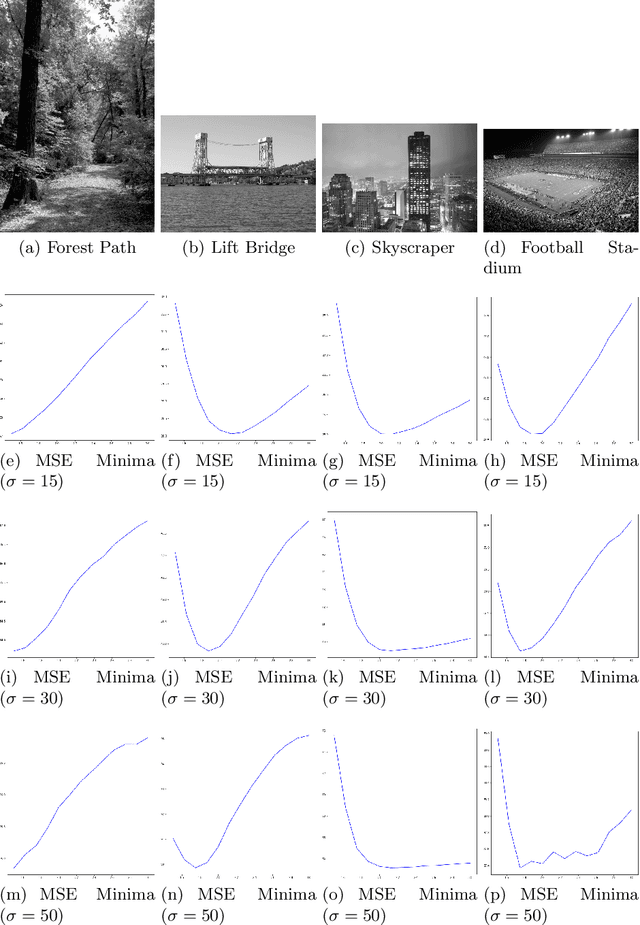

CNN-Based Real-Time Parameter Tuning for Optimizing Denoising Filter Performance

Jan 20, 2020

Abstract:We propose a novel direction to improve the denoising quality of filtering-based denoising algorithms in real time by predicting the best filter parameter value using a Convolutional Neural Network (CNN). We take the use case of BM3D, the state-of-the-art filtering-based denoising algorithm, to demonstrate and validate our approach. We propose and train a simple, shallow CNN to predict in real time, the optimum filter parameter value, given the input noisy image. Each training example consists of a noisy input image (training data) and the filter parameter value that produces the best output (training label). Both qualitative and quantitative results using the widely used PSNR and SSIM metrics on the popular BSD68 dataset show that the CNN-guided BM3D outperforms the original, unguided BM3D across different noise levels. Thus, our proposed method is a CNN-based improvement on the original BM3D which uses a fixed, default parameter value for all images.

CNN-based InSAR Coherence Classification

Jan 20, 2020

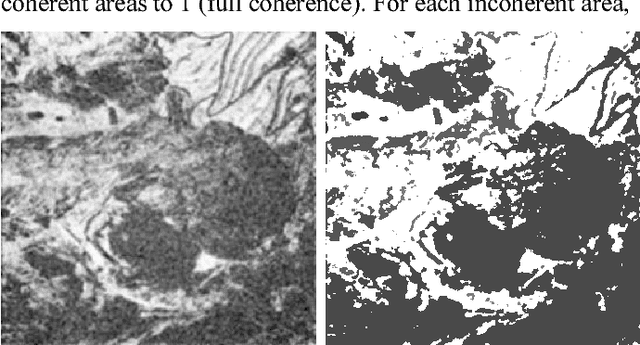

Abstract:Interferometric Synthetic Aperture Radar (InSAR) imagery based on microwaves reflected off ground targets is becoming increasingly important in remote sensing for ground movement estimation. However, the reflections are contaminated by noise, which distorts the signal's wrapped phase. Demarcation of image regions based on degree of contamination ("coherence") is an important component of the InSAR processing pipeline. We introduce Convolutional Neural Networks (CNNs) to this problem domain and show their effectiveness in improving coherence-based demarcation and reducing misclassifications in completely incoherent regions through intelligent preprocessing of training data. Quantitative and qualitative comparisons prove superiority of proposed method over three established methods.

CNN-based InSAR Denoising and Coherence Metric

Jan 20, 2020

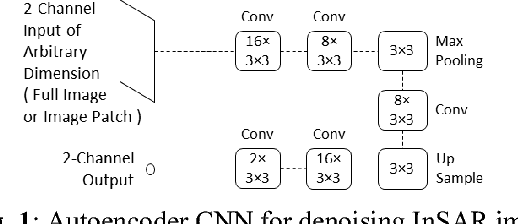

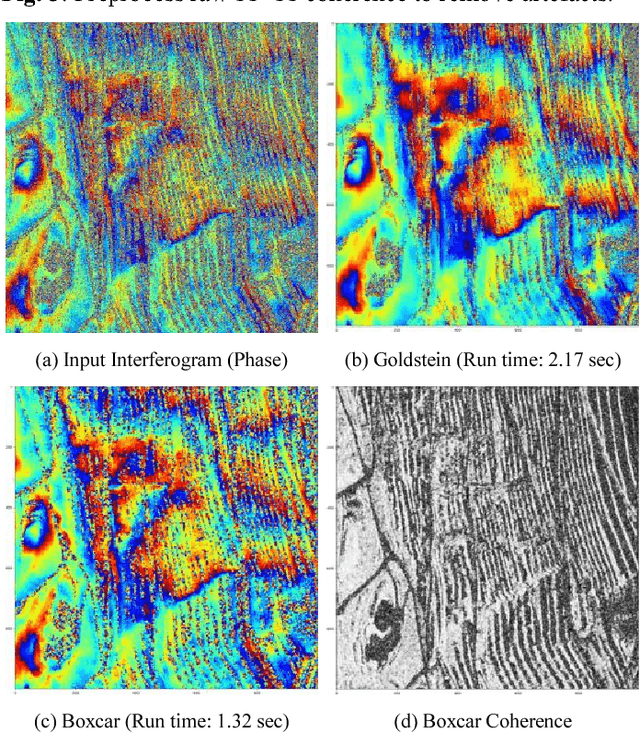

Abstract:Interferometric Synthetic Aperture Radar (InSAR) imagery for estimating ground movement, based on microwaves reflected off ground targets is gaining increasing importance in remote sensing. However, noise corrupts microwave reflections received at satellite and contaminates the signal's wrapped phase. We introduce Convolutional Neural Networks (CNNs) to this problem domain and show the effectiveness of autoencoder CNN architectures to learn InSAR image denoising filters in the absence of clean ground truth images, and for artefact reduction in estimated coherence through intelligent preprocessing of training data. We compare our results with four established methods to illustrate superiority of proposed method.

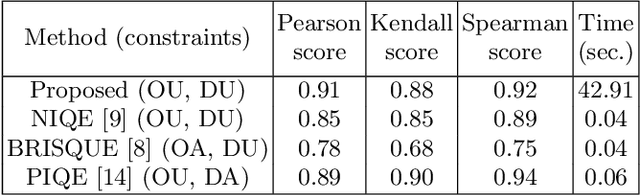

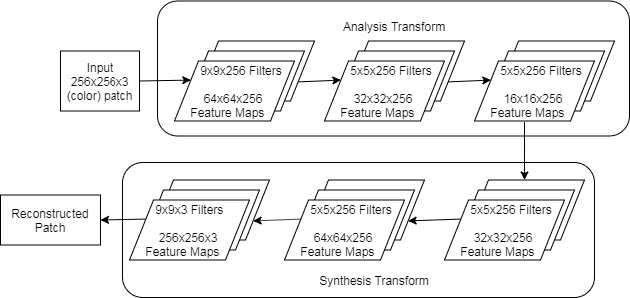

Potential of deep features for opinion-unaware, distortion-unaware, no-reference image quality assessment

Nov 27, 2019

Abstract:Image Quality Assessment algorithms predict a quality score for a pristine or distorted input image, such that it correlates with human opinion. Traditional methods required a non-distorted "reference" version of the input image to compare with, in order to predict this score. However, recent "No-reference" methods circumvent this requirement by modelling the distribution of clean image features, thereby making them more suitable for practical use. However, majority of such methods either use hand-crafted features or require training on human opinion scores (supervised learning), which are difficult to obtain and standardise. We explore the possibility of using deep features instead, particularly, the encoded (bottleneck) feature maps of a Convolutional Autoencoder neural network architecture. Also, we do not train the network on subjective scores (unsupervised learning). The primary requirements for an IQA method are monotonic increase in predicted scores with increasing degree of input image distortion, and consistent ranking of images with the same distortion type and content, but different distortion levels. Quantitative experiments using the Pearson, Kendall and Spearman correlation scores on a diverse set of images show that our proposed method meets the above requirements better than the state-of-art method (which uses hand-crafted features) for three types of distortions: blurring, noise and compression artefacts. This demonstrates the potential for future research in this relatively unexplored sub-area within IQA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge