Inha Cha

Uncovering Factor Level Preferences to Improve Human-Model Alignment

Oct 09, 2024

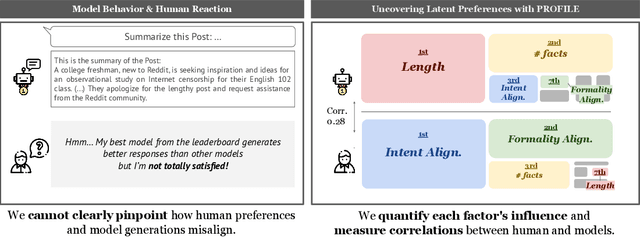

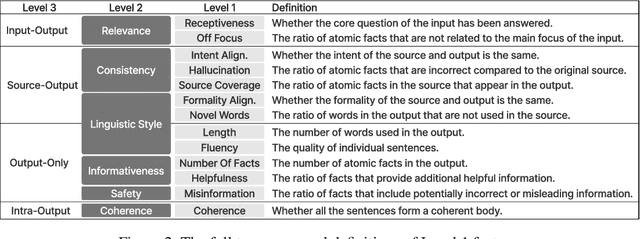

Abstract:Despite advancements in Large Language Model (LLM) alignment, understanding the reasons behind LLM preferences remains crucial for bridging the gap between desired and actual behavior. LLMs often exhibit biases or tendencies that diverge from human preferences, such as favoring certain writing styles or producing overly verbose outputs. However, current methods for evaluating preference alignment often lack explainability, relying on coarse-grained comparisons. To address this, we introduce PROFILE (PRObing Factors of InfLuence for Explainability), a novel framework that uncovers and quantifies the influence of specific factors driving preferences. PROFILE's factor level analysis explains the 'why' behind human-model alignment and misalignment, offering insights into the direction of model improvement. We apply PROFILE to analyze human and LLM preferences across three tasks: summarization, helpful response generation, and document-based question-answering. Our factor level analysis reveals a substantial discrepancy between human and LLM preferences in generation tasks, whereas LLMs show strong alignment with human preferences in evaluation tasks. We demonstrate how leveraging factor level insights, including addressing misaligned factors or exploiting the generation-evaluation gap, can improve alignment with human preferences. This work underscores the importance of explainable preference analysis and highlights PROFILE's potential to provide valuable training signals, driving further improvements in human-model alignment.

The Generative AI Paradox on Evaluation: What It Can Solve, It May Not Evaluate

Feb 09, 2024

Abstract:This paper explores the assumption that Large Language Models (LLMs) skilled in generation tasks are equally adept as evaluators. We assess the performance of three LLMs and one open-source LM in Question-Answering (QA) and evaluation tasks using the TriviaQA (Joshi et al., 2017) dataset. Results indicate a significant disparity, with LLMs exhibiting lower performance in evaluation tasks compared to generation tasks. Intriguingly, we discover instances of unfaithful evaluation where models accurately evaluate answers in areas where they lack competence, underscoring the need to examine the faithfulness and trustworthiness of LLMs as evaluators. This study contributes to the understanding of "the Generative AI Paradox" (West et al., 2023), highlighting a need to explore the correlation between generative excellence and evaluation proficiency, and the necessity to scrutinize the faithfulness aspect in model evaluations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge