Indra Priyadarsini

LLM-Fusion: A Novel Multimodal Fusion Model for Accelerated Material Discovery

Mar 02, 2025

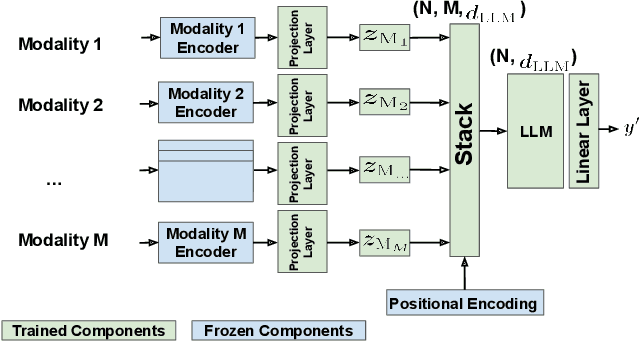

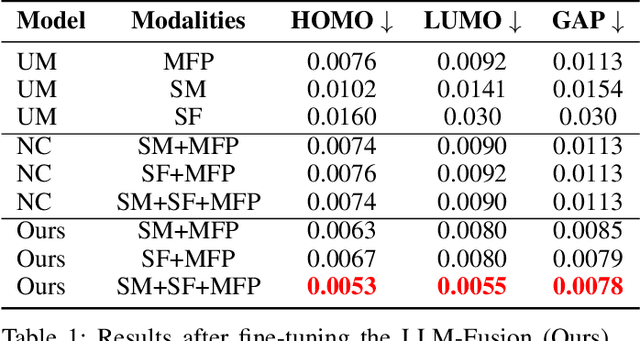

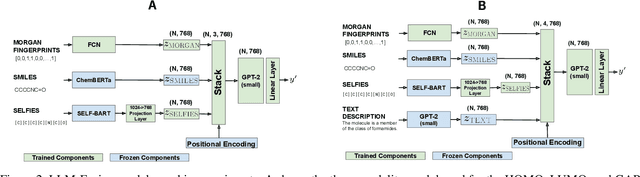

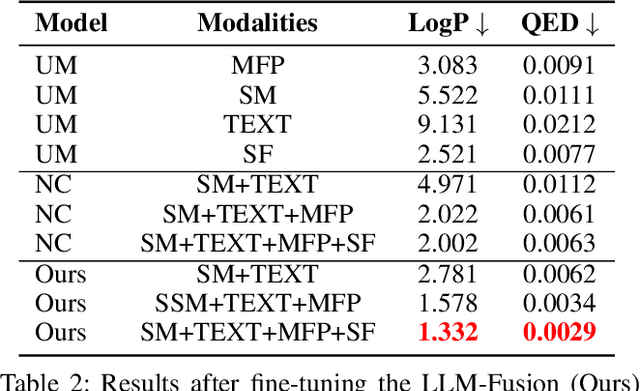

Abstract:Discovering materials with desirable properties in an efficient way remains a significant problem in materials science. Many studies have tackled this problem by using different sets of information available about the materials. Among them, multimodal approaches have been found to be promising because of their ability to combine different sources of information. However, fusion algorithms to date remain simple, lacking a mechanism to provide a rich representation of multiple modalities. This paper presents LLM-Fusion, a novel multimodal fusion model that leverages large language models (LLMs) to integrate diverse representations, such as SMILES, SELFIES, text descriptions, and molecular fingerprints, for accurate property prediction. Our approach introduces a flexible LLM-based architecture that supports multimodal input processing and enables material property prediction with higher accuracy than traditional methods. We validate our model on two datasets across five prediction tasks and demonstrate its effectiveness compared to unimodal and naive concatenation baselines.

Improving Performance Prediction of Electrolyte Formulations with Transformer-based Molecular Representation Model

Jun 28, 2024

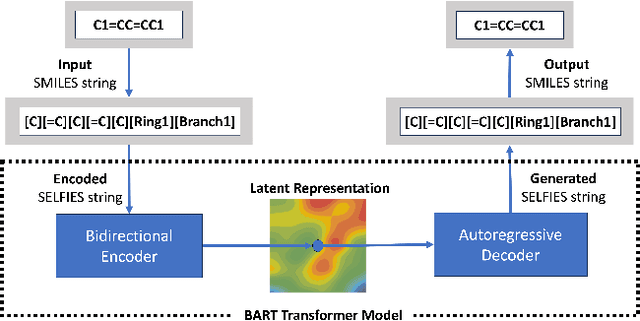

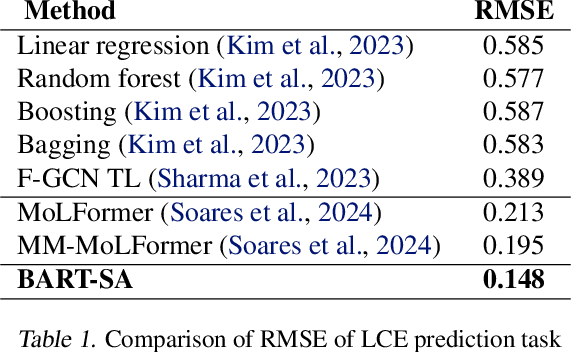

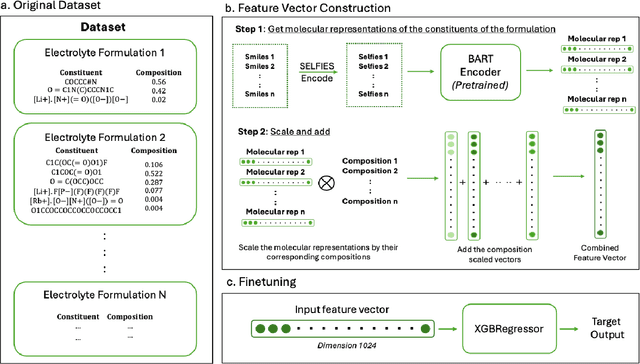

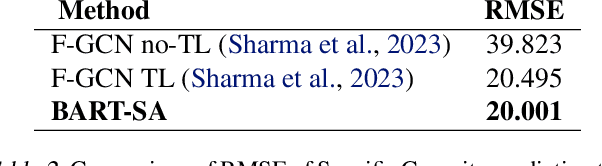

Abstract:Development of efficient and high-performing electrolytes is crucial for advancing energy storage technologies, particularly in batteries. Predicting the performance of battery electrolytes rely on complex interactions between the individual constituents. Consequently, a strategy that adeptly captures these relationships and forms a robust representation of the formulation is essential for integrating with machine learning models to predict properties accurately. In this paper, we introduce a novel approach leveraging a transformer-based molecular representation model to effectively and efficiently capture the representation of electrolyte formulations. The performance of the proposed approach is evaluated on two battery property prediction tasks and the results show superior performance compared to the state-of-the-art methods.

MHG-GNN: Combination of Molecular Hypergraph Grammar with Graph Neural Network

Sep 28, 2023

Abstract:Property prediction plays an important role in material discovery. As an initial step to eventually develop a foundation model for material science, we introduce a new autoencoder called the MHG-GNN, which combines graph neural network (GNN) with Molecular Hypergraph Grammar (MHG). Results on a variety of property prediction tasks with diverse materials show that MHG-GNN is promising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge